Azure Data Factory\ Synapse Analytics: Validate File\Folder before using!

In real time, every project would deal with Azure storage or Azure SQL Database. It can be blobs, folders/directories, files. It becomes a crucial step to validate the file\folder\table before actually using them.

Few usecases:

- Suppose we have to load a file named SalesData.csv from a folder that gets created everyday in the format yyyy/MM/dd. Before we use this file in a copy data activity or a data flow activity, we have to first validate, if the folder exists or not.

- If the folder exists, we might want to validate the size of the file. This is important, because, sometimes the files bring no data, i.e. a 0 kb file.

- Another usecase would be to validate a table structure or file structure and make sure it is compliant with what we expect.

In ADF\Synapse, we can use Validation activity and\or getmetadata activity to validate files, folders and tables.

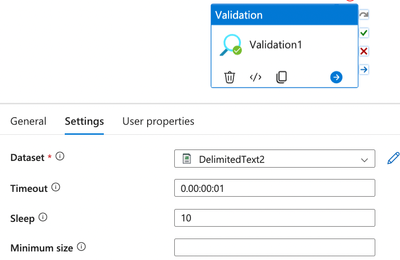

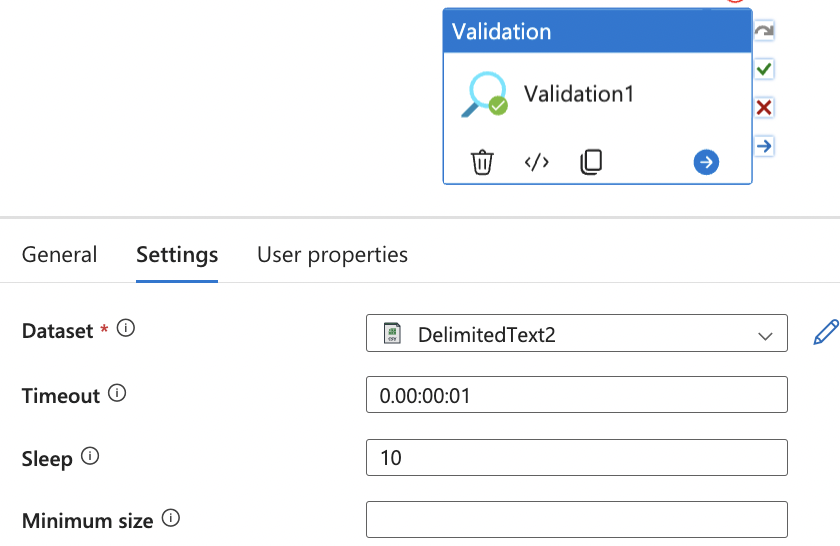

Validation Activity Settings & short description follows:

In the above image, we have a referenced a dataset called DelimitedText2, which would point to either a folder or file in azure blob storage or a table in a Azure SQL DB. Timeout property holds the time afterwhich the activity would timeout (note that, it wont fail). For instance, if the validation activity is meant to validate the presence of a file\folder\table, and it doesn't find the corresponding item, after the timeout time, the activity execution stops and timesout. Next, we have the sleep property which makes the validation activity wait for certain number of seconds before revalidation or timeout. Minimum size property is used to mention the minimum size of a file in bytes (not applicable to table based dataset).

When the validation activity completes execution or times out, we can access the output json of the validation activity to know about the validation results.

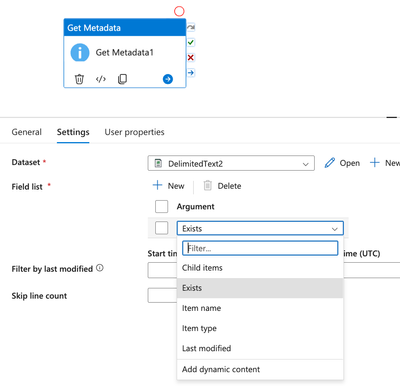

Let us look at the GetMetadata activity and its settings.

Like validation activity, we have few properties that would help us validate the file\folder\table in a GetMetadata activity in ADF\Synapse. Depending on whether the dataset points to a folder\file\table, the properties (field list) would differ.

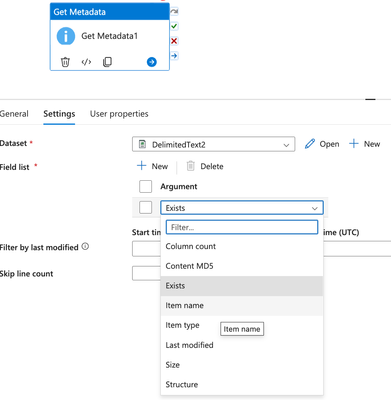

The above image depicts the field list corresponding to a folder in ADLS. When we make the dataset point to a file, it would be as below.

So, if the dataset points to a file or table, we see couple of additional properties like Column Count, Size, Structure.

Having seen about the individual properties\ field list of both the activities, its time to compare and know the similarities and differences.

The below table compares the capabilities of Validation activity & Get metadata activity.

| Validation Activity | Get metadata activity | Property Used | |

| Validate File | Yes | Yes | Exists (returns boolean) |

| Validate Folder | Yes | Yes | Exists (returns boolean) |

| Validate File Size | Yes | Yes |

Get Metadata Activity: Use Size property in field list Validation Activity: Use Minimum size field in Settings tab |

| Validate File Structure | No | Yes |

Get Metadata: Use the Field List: Structure. Then, Use another activity like If condition to validate the structure against the expected. |

| Validate File Column Count | No | Yes | Get Metadata:

Use the Field List: Column Count. Then, Use another activity like If condition to validate the count against the expected. |

In a nutshell, ADF\Synapse comes with a variety of activities, sometimes with similar characteristics or capabilities. When it comes to validation, it is based on the use case, we decide to use either Validation activity or Get Metadata activity or both.

Published on:

Learn moreRelated posts

Azure Data Factory and Databricks Lakeflow: An Architectural Evolution in Modern Data Platforms

As data platforms evolve, the role of orchestration is being quietly reexamined. This article explores how Azure Data Factory and Databricks L...

Part 2: Building a Python CRUD API with Azure Functions and Azure Cosmos DB

Series: Building Serverless Applications with Azure Functions and Azure Cosmos DB In the first post of this series, we focused on establishing...

Azure Cosmos DB Data Explorer now supports Dark Mode

If you spend time in the Azure Portal’s using Azure Cosmos DB Data Explorer, you know it’s a “lots of screens, lots of tabs, lots of work happ...

Microsoft Entra ID Governance: Azure subscription required to continue using guest governance features

Starting January 30, 2026, Microsoft Entra ID Governance requires tenants to link an Azure subscription to use guest governance features. With...

Azure Developer CLI (azd) – January 2026: Configuration & Performance

This post announces the January 2026 release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – January 2026: Conf...

Azure SDK Release (January 2026)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (January 2026)...

Azure Cosmos DB TV Recap – From Burger to Bots – Agentic Apps with Cosmos DB and LangChain.js | Ep. 111

In Episode 111 of Azure Cosmos DB TV, host Mark Brown is joined by Yohan Lasorsa to explore how developers can build agent-powered application...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...