KEDA (Kubernetes event-driven autoscaling) in AKS and identities

KEDA (Kubernetes Event-driven Autoscaling) and identities

Currently, there are several blogs and videos about how KEDA works. The intention of this blog is to explore identities a bit more when using KEDA with external resources. This is a concern for some customers. We will also review certain basics before introducing how identities are used with KEDA.

KEDA 101

As the name of the project implies, KEDA supports autoscaling Kubernetes workloads/apps based on certain criteria or events. KEDA augments already existing HPA (Horizontal Pod Autoscaler) by adding new capabilities to autoscaling. KEDA pro-actively adds capacity to Kubernetes workload by scaling out/in based on data that application uses vs based on consumption or usage of CPU/memory. KEDA also improves efficiency of Kubernetes compute resources by supporting ‘scale to 0’. Overall, KEDA improves application performance by scaling pro-actively and improves utilization of resources. If you want to learn more about Keda, visit keda.sh

Internal metrics and HPA

With the installation of metrics server, Kubernetes clusters can support horizontal pod autoscaling (as known as HPA) by adding more replicas or reducing replicas. In order for HPA to work, it needs to analyze metrics to meet the target metric value. This metrics can be an internal cluster metrics such as cpu/memory usage in the app (pod), it could be custom metrics or it could even be external metrics. This internal cluster metrics or sometimes known as core metrics is served by ‘Metrics server’ inside the cluster. This metric server supports only internal metrics and its whole purpose is to support critical capabilities such as auto-scaling inside the cluster.

External metrics and KEDA

In order to serve external metrics to cluster, we need to have a component that could interact with various external services and expose relevant metrics to Kubernetes cluster. HPA can monitor these external metrics and scale appropriately. There is an operator that gets deployed in Kubernetes cluster when installing KEDA. This operator has code (as known as Scalers) to interact with various external services.

Limitations of HPA without KEDA

Besides other capabilities that KEDA provides, . From a consolidation and resource utilization standpoint, scaling down to 0 is very important. HPAs can scale app down to 1. While this might be one pod for one application, when you have 10s or 100s of application and if a considerable portion of them don’t really need a pod running all the time, scaling them down to 0 frees up enormous amount of CPU and memory in the cluster which in turns triggers cluster autoscaler to reduce number of nodes in the cluster. This results in significant savings. Double this saving if you are running them in two different regions for redundancy! Also, in certain use cases, monitoring CPU or memory may not be helpful in scaling deployments. For example, if there are thousands of messages/blobs to be processed, HPA is not aware of this to scale more pods to process messages concurrently if needed. KEDA fills this gap.

KEDA, its components and authentication

Scaler is defined with a CRD in Kubernetes. When KEDA is installed, there are two components deployed in KEDA namespace. One is Metrics server is to serve external metrics and other one is KEDA operator whose job is to interact with various resources, and for supporting scale to 0. Both run with service account keda-operator.

KEDA operator watches these CRDs and takes actions. KEDA uses ScaledObject CRD to understand ‘what’ workload to scale and based on ‘what’ external resource (such as Kafka, Azure Eventhub, etc). KEDA basically needs to authenticate and be authorized for scaling resources such as deployments, jobs internally within the cluster and also need to authenticate and be authorized for external resources. For internal cluster authorization, KEDA is provided with kind of super-user privileges within the cluster. You can view this by listing clusterrole for keda-operator. Following is what I see with KEDA 2.7.1

With this privilege, KEDA has full access to scale sub-resource within the cluster. Now, while this is taken care of with clusterrole, we still need to understand how KEDA authenticates and be authorized for external resources.

Please refer to KEDA Authentication to understand various authentication options. I’m comparing secret and pod identity methods in this blog. With Kubernetes secret, you basically define Kubernetes secret within your namespace and KEDA takes care of reading this to authenticate to the resource – please remember it has a cluster role defined with secrets get list watch. While this might be acceptable since KEDA is a system type resource helping with autoscaling, there is a way to avoid using/managing secrets by leveraging managed identity. Managed identity is a well-established pattern in Azure. AKS leverages managed identity with its own feature called pod identity. Currently, pod identity is in the process of getting upgraded (or revamped) to v2 also known as workload identity . There are several examples about secret method in keda.sh and other blogs so let’s focus on understanding how to implement an acceptable pattern from a security point of view with pod identity with KEDA. Let’s understand this with an example.

Let’s assume that we have a namespace called queuezone. We want to scale an object (deployment) called queue-processor. External resource that we would like to watch would be Azure Storage Account Queue (we will refer this as Azure Queue going forward). As explained above, KEDA operator is fully authorized to scale queue-processor deployment. There is no requirement to define anything additional for authentication/authorization. However, we need to authorize KEDA to interact with Azure Queue. Let’s focus on how to use ‘user-assigned-managed-identity’ (will be referred as UAMI). Example cluster name aksdemo, RG is aksdemorg

First, we need to follow steps to create UAMI. This is just an identity in Azure AD.

az identity create --name aaduami4keda --resource-group MC_aksdemorg_aksdemo_centralus

It’s better to place this identity in MC resource group as it’s an identity tied to this cluster.

Next, we need to map this to pod identity. Pod identity is the one that configures AKS cluster to assign UAMI to a pod in a namespace. In our case, namespace is keda. We also need to give it a name ‘podidentity4autoscaling’. We are using ‘managed mode’ with pod identity. For more information about manage mode, review this link.

Pod Identity in Managed Mode | Azure Active Directory Pod Identity for Kubernetes

az aks pod-identity add --cluster-name aksdemo --resource-group aksdemorg --namespace=keda --name podidentity4autoscaling --identity-resource-id “copy-paste-resource-id-of-uami-here”

Once this is defined, you can check this configuration in two different places. One at AKS config level (AKS resource) and another at Kubernetes level.

AKS config level:

az aks show -n aksdemo -g aksdemorg

Check ‘userAssignedIdentities’ section.

K8S level:

kubectl get azureidentity -n keda

kubectl get azureidentitybinding -n keda

As stated in managed mode (for pod identity) doc, you could also see this identity assigned to all VMSSes in the cluster.

so, we have met requirements of defining pod identity and linked it to a namespace in AKS.

Next step is to leverage (assume) this pod identity in KEDA operator. One method is to use this parameter while defining KEDA.

helm install keda kedacore/keda --set podIdentity.activeDirectory.identity=podidentity4autoscaling --namespace keda

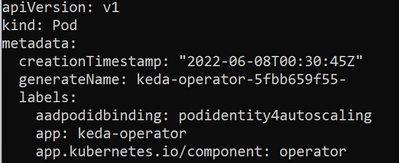

You can verify this by checking KEDA operator pod in keda namespace. You should be able to see aadpodidbinding label with pod identity name.

We’ve just completed plumbing work all the way from KEDA operator to UAMI in Azure AD identity. We still haven’t authorized this UAMI to access Azure Queue. This can be done with az role assignment or with Azure Portal. You just need to provide limited access to read information that you need (for eg. you don’t need to provider contributor or queue contributor). You could reserve privileged access for this resource (queue) to your scaled object – such as processing messages from the queue. In this case, our deployment is running in queuezone namespace and there could be a different pod identity assigned to this namespace with more privilege. This way, we are following separation of roles and principle of least privilege approach. Example below uses ‘storage queue data reader’ and ‘storage blob data reader’ roles for demonstration purposes but you could use a bit more restrictive role to derive metrics.

KEDA usually supports more than one deployment in the cluster. Do you really need to create another identity? Well, one approach is to authorize read type role to same user assigned managed identity used by KEDA operator. It’s like using system account (podidentity4autoscaling) to scrape (interact) various resources. KEDA might come up with a different support in future but for now, this is a better approach and could be an acceptable method.

Here is how TriggerAuthentication and ScaledObject look like. Deployment queue-processor is not shown. Queue-processor deployment could read from queue with a service account that has more privilege than aaduami4keda UAMI.

I would like to thank Tom Kerkhove for reviewing this blog.

Published on:

Learn more