Azure Data Factory: How to split a file into multiple output files with Bicep

Introduction

In this article we will see how to split a csv file located in an Azure Storage Account through Azure Data Factory Data Flow.

We will do it through Azure Bicep in order to demonstrate the benefits of Infrastructure as Code (IaC) including:

- Reviewing the planned infrastructure that will be deployed through the what-if feature.

- Reproducible and testable infrastructures with templating deployments.

A complete procedure to deploy the following resources is available here: https://github.com/JamesDLD/bicep-data-factory-data-flow-split-file

- Azure Storage Account

- Uploading a test file that will be splitted

- Azure Data Factory

- Azure Data Factory Linked Service to connect Data Factory to our Storage Account

- Azure Data Factory Data Flow that will split our file

- Azure Data Factory Pipeline to trigger our Data Flow

- Bonus: Azure Data Factory Pipeline to cleanup the output container for your demos

Bicep code to create our linked service

The following Bicep code demonstrates how to create a Storage Account Linked Service through Azure Bicep.

Every body might not be concerned by the following limitation but in order to make this demo accessible to everyone we will create the Storage Account linked service through a connection string instead of using a managed identity which is definitely what I usually recommend.

If your blob account enables soft delete, system-assigned/user-assigned managed identity authentication isn’t supported in Data Flow.

If you access the blob storage through private endpoint using Data Flow, note when system-assigned/user-assigned managed identity authentication is used Data Flow connects to the ADLS Gen2 endpoint instead of Blob endpoint. Make sure you create the corresponding private endpoint in ADF to enable access.

Source: Data Flow/User-assigned managed identity authentication

Let’s have a look at the Bicep code!

The only trick here is to grab an existing storage account and pass its connection string through Bicep without having any secret in your code.

Bicep code to create our Azure Data Factory Data Flow

Based on the following reference “Microsoft.DataFactory factories/linkedservices” we will create the Azure Data Factory Data Flow that will split our file into multiple files.

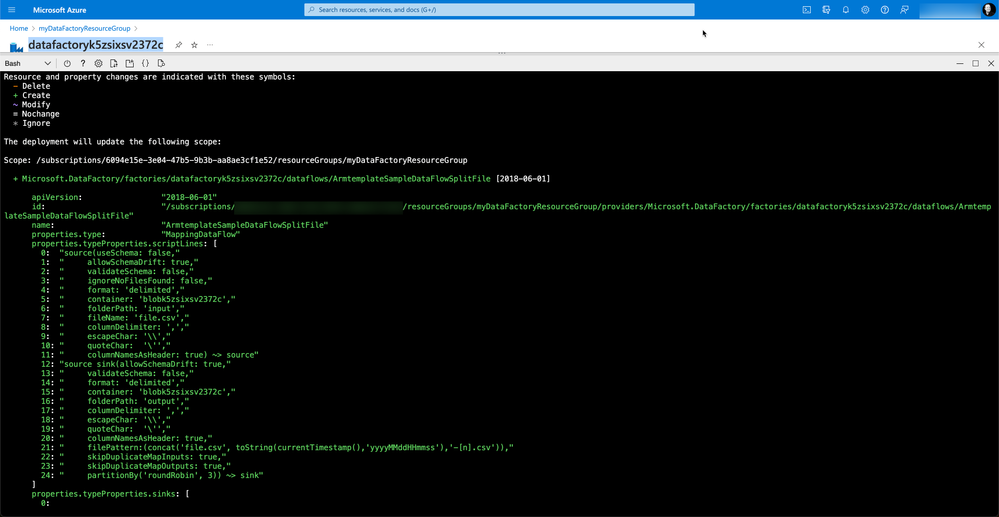

When using the az deployment what-if option we can see the following changes. This is really convenient to see the asked changes before applying them.

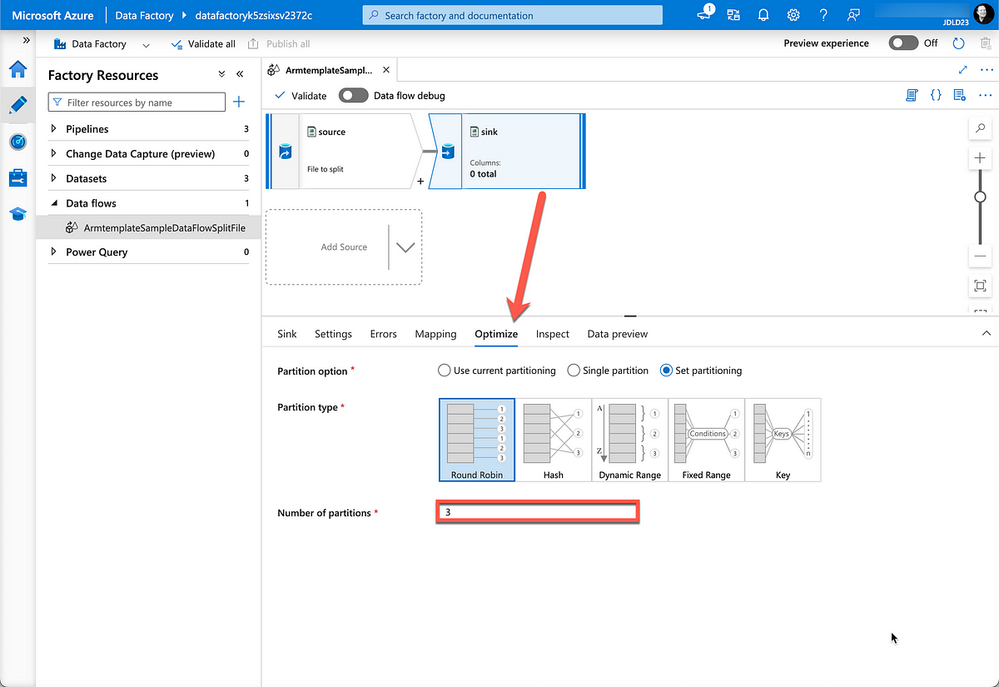

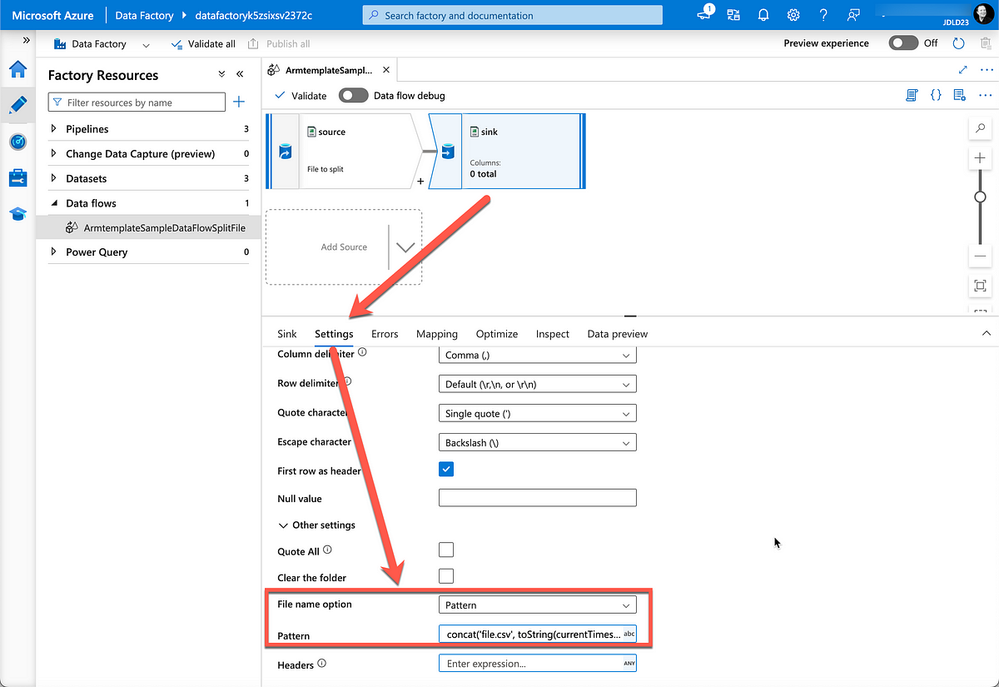

The Data Flow looks like the following screenshot where we can see the number of partition that will be created. In our context it corresponds to the number of csv files that will be generated from our input csv file.

The other trick here is to play with a file name pattern to manage the target files names.

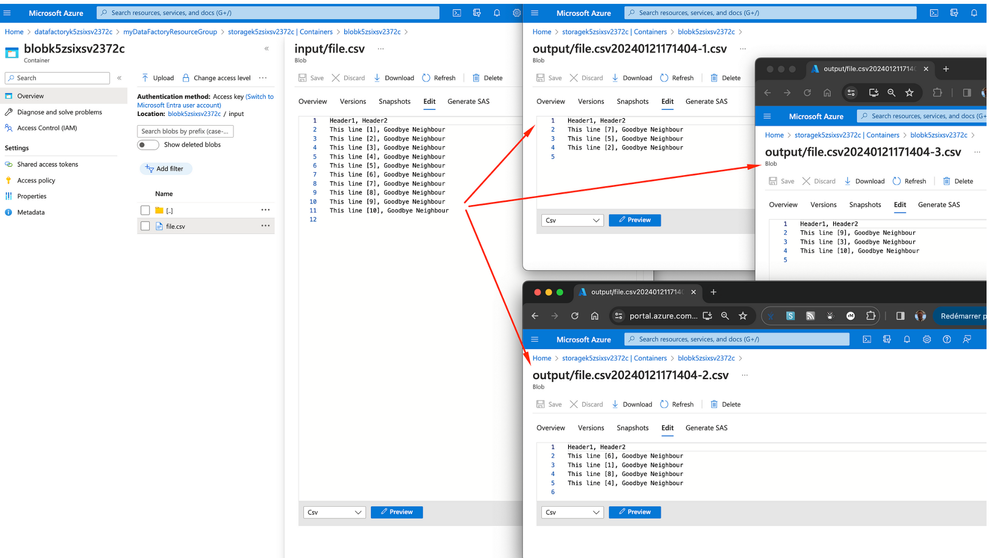

The output files in this sample will be set to fit with the input file name, the current date and the output file iteration.

Split the file through the Pipeline

Through the procedure located here https://github.com/JamesDLD/bicep-data-factory-data-flow-split-file we have created an Azure Data Factory pipeline named “ArmtemplateSampleSplitFilePipeline”, you can trigger it to launch the Data Flow that will split the file.

The following screenshot illustrates the split result done through Azure Data Factory Data Flow.

Conclusion

Considering Bicep or any other Infrastructure as Code (IaC) tool ensures to gain efficiency and agility, its a real ramp up when designing infrastructures and it makes them reproducible and testable.

See You in the Cloud

Jamesdld

Published on:

Learn moreRelated posts

Azure Developer CLI (azd): One command to swap Azure App Service slots

The new azd appservice swap command makes deployment slot swaps fast and intuitive. The post Azure Developer CLI (azd): One command to swap Az...