Maximizing AI performance with the Azure NC A100 v4-series

By: Hugo Affaticati, Sonal Doomra, Jon Shelley, Sherry Wang, and John Lee

Introduction

Azure recently launched its new NC A100 v4-series virtual machines (VMs), powered by NVIDIA A100 80GB PCIe Tensor Core GPUs and 3rd generation AMD EPYC 7V13 (Milan) processors. These powerful and scalable instances accelerate low to mid-size artificial intelligence (AI) training and inference workloads such as autonomous vehicle training, oil & gas reservoir simulation, video processing, AI/ML web services, and much more. They are available in different sizes and configurations to support various computational needs, ranging from one to four GPUs. You can find more details about the product on Microsoft Docs product page.

In this document, we share outstanding AI benchmark results and the best practices and configuration details you need to be able to replicate them. And as a result, not only do we show that the latest NC A100 v4-series VM performances are competitive against similar on-premises offerings, but also that they are the most performant and cost competitive NC series offering for a diverse set of workloads.

AI Benchmarks

We ran the NVIDIA Deep Learning Examples and MLPerf™ benchmarks that consist of real-world compute-intensive AI workloads to best simulate customer’s needs.

NVIDIA Deep Learning Examples

NVIDIA Deep Learning Examples provide sample code for various deep learning algorithms, optimized for accuracy and performance with the NVIDIA CUDA-X software stack, which includes cuDNN, NCCL, cuBLAS, and more. In this document, we showcase the results for three popular neural network architectures: BERT (Natural Language Processing), ResNet-50 (image classification) and SSD (object detection) benchmarks using the PyTorch framework.

MLPerf™ from MLCommons®

MLCommons® is an open engineering consortium of AI leaders from academia, research labs, and industry where the mission is to “build fair and useful benchmarks” that provide unbiased evaluations of training and inference performance for hardware, software, and services—all conducted under prescribed conditions. MLPerf™ tests are transparent and objective, so technology decision makers can rely on the results to make informed buying decisions.

Key Performance Results

NC A100 v4 is competitive with on-premises performance

Using the MLPerf™ benchmarks, we are able to compare the performance of our on-demand VMs to on-premises offerings. Both verified results for MLPerf™ Inference v2.0 and our unverified results for MLPerf™ Training v2.0 are in line with the submissions from the on-premises category of closed division results for MLPerf™ v2.0 inference and training. These results have been observed on both single-GPU systems and multi-GPU systems, and showcases Azure’s uncompromising commitment to enabling customers to use the best available “on-demand” cloud capabilities to solve their most complex problems. We at Azure do not believe you have to make any performance sacrifices to run your most demanding workloads in the cloud vs on-premises. The NC A100 v4-series is a demonstration of Azure’s commitment.

NC A100 v4 is the most performant of the NC series

From the Deep Learning Examples benchmarks, we compared the results obtained with the NC24ads A100 v4 to those obtained with NC6s v3. Both are single GPU virtual machines from generation 4 and generation 3, respectively. The NC6s v3 is powered by a NVIDIA V100 Tensor Core GPU. Results have shown an outstanding 503% increase in sequences per second from generation 3 to generation 4 on BERT SQuAD for Inference. NC A100 v4-series showcases a significant boost in performance as compared to previous generations of GPUs across all benchmarks.

NC A100 v4 is cost competitive

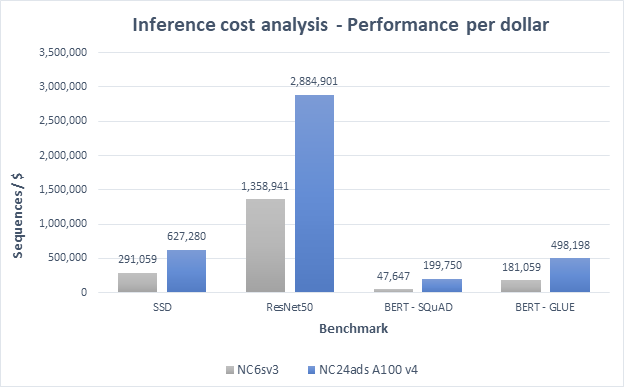

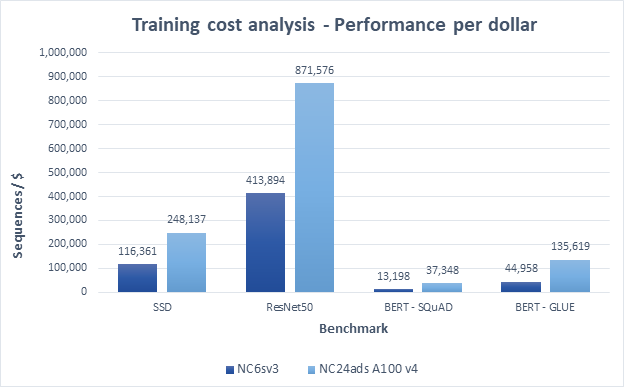

Using Deep Learning Examples, we calculated the number of sequences one can compute with a single dollar, between the NCsv3-series and the NC A100 v4-series. For these calculations, we used the price for machines available in region East US 2, under a “pay as you go” contract without discounts. For the inference models run, we see a minimum of 2x improvement in performance per dollar. The BERT SQuAD benchmark on inference (figure 1), moreover, shows a staggering 419% increase in the number of sequences per dollar compared to the NCsv3-series. For training, the NC A100 v4-series demonstrates itself to be two to three times more cost efficient than the NC 6sv3 as can be seen on the figures below.

Figure 1 – Number of sequences processed per dollar spent on Azure with NC6sv3 and NC24ads A100 v4 across four benchmarks for Inference.

Figure 2 – Number of sequences processed per dollar spent on Azure with NC6sv3 and NC24ads A100 v4 across four benchmarks for Training.

Highlights of Performance Results

The highlights of results obtained with the benchmarking exercise are shown below.

Inference

The tables below showcase performance results of NC24ads A100 v4 (1 GPU) VMs for inference scenarios in NVIDIA Deep Learning Examples and MLPerf Inference v2.0, respectively.

|

System |

NC24ads A100 v4 |

|||

|

Version |

NVIDIA Deep Learning Examples Inference |

|||

|

Batch size |

64 |

|||

|

Benchmark |

BERT – SQuAD (fp32) |

BERT – GLUE (fp32) |

ResNet-50 |

SSD |

|

Score (samples/s) |

201.0 |

410.6 |

2737.4

|

756.8 |

|

System |

NC24ads A100 v4 |

||||||

|

Version |

MLPerf™ Inference v2.0 [1] |

||||||

|

Scenario |

Offline |

||||||

|

Model

|

BERT |

3D-UNet (default) |

3D-UNet (high accuracy) |

ResNet

|

RNN-T

|

SSD-small

|

SSD-large

|

|

Score (samples/s) |

3,073 |

2.9 |

2.9 |

35,788 |

12,822 |

48,301 |

875.5 |

Training

The tables below showcase performances of NC 24 ads A100 v4 (1 GPU) and NC 96 ads A100 v4 (4 GPU) VMs for training scenario in NVIDIA Deep Learning Examples and MLPerf training v2.0, respectively.

|

System |

NC24ads A100 v4 |

|||

|

Version |

NVIDIA Deep Learning examples Training |

|||

|

Batch size |

64 |

|||

|

Benchmark |

BERT – SQuAD (fp32) |

BERT – GLUE (fp32) |

ResNet-50 |

SSD |

|

Score (samples/s) |

64.5 |

59.8 |

847.2 |

4.8 |

|

System |

NC96ads A100 v4 |

||||||

|

Version |

MLPerf™ Training v2.0 [2] |

||||||

|

Model |

BERT |

Mask R-CNN |

Minigo |

ResNet-50 |

RetinaNet |

RNN-T |

3D U-Net |

|

Score (minutes) |

52.1 * |

91.3 * |

547.7 * |

60.0 * |

217.0 * |

64.1 * |

54.3 * |

* results not verified by MLCommons Association

Recreate the Results in Azure

To get started with NC A100 v4-series, please visit the following links:

- Product overview - NC A100 v4-series

- Setting up your virtual machine - Getting started with the NC A100 v4-series

- Setting up your virtual machine with MIG instances - Getting started with Multi-Instance GPU (MIG) on the NC A100 v4-series

- Running the Deep Learning Examples (Training and Inference) - Benchmarking the NC A100 v4, NCsv3, and NCas_T4_v3 series with NVIDIA Deep Learning Examples

- Running the MLPerf Inference v2.0 - A quick start guide to benchmarking AI models in Azure: MLPerf Inferencing v2.0

- Running the MLPerf Training v2.0 - A quick start guide to benchmarking AI models in Azure: MLPerf Training v2.0

[1] Results verified by MLCommons Association. The MLPerf™ name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information.

[2] Results not verified by MLCommons Association. The MLPerf™ name and logo are trademarks of MLCommons Association in the United States and other countries. All rights reserved. Unauthorized use is strictly prohibited. See www.mlcommons.org for more information.

Published on:

Learn moreRelated posts

Microsoft Power BI: Tenant setting changes for Microsoft Azure Maps in Power BI

Admins will have updated tenant settings for Azure Maps in Power BI, split into three controls. The update requires Power BI Desktop April 202...

Build 2025 Preview: Transform Your AI Apps and Agents with Azure Cosmos DB

Microsoft Build is less than a week away, and the Azure Cosmos DB team will be out in force to showcase the newest features and capabilities f...

Fabric Mirroring for Azure Cosmos DB: Public Preview Refresh Now Live with New Features

We’re thrilled to announce the latest refresh of Fabric Mirroring for Azure Cosmos DB, now available with several powerful new features that e...

Power Platform – Use Azure Key Vault secrets with environment variables

We are announcing the ability to use Azure Key Vault secrets with environment variables in Power Platform. This feature will reach general ava...

Validating Azure Key Vault Access Securely in Fabric Notebooks

Working with sensitive data in Microsoft Fabric requires careful handling of secrets, especially when collaborating externally. In a recent cu...