Get insights from customer interactions with Azure Communication Services and Azure OpenAI Service

Customer conversations are a gold mine of information that can help service providers create a better customer experience. When a customer contacts a service provider, they are often routed between different departments and speak to different agents to address their concerns. During the conversation, the customer is usually asked to provide specifics around their issue. Service providers do their best to solve the issue. With Azure Communication Services and Azure OpenAI Service, we can automatically generate insights from the customer call including topic, summary, highlights, and more. These insights can help service providers during the call to get the necessary context to help the customer as well as organizations to learn about their customer support experience.

You can access the completed code for this blog on GitHub.

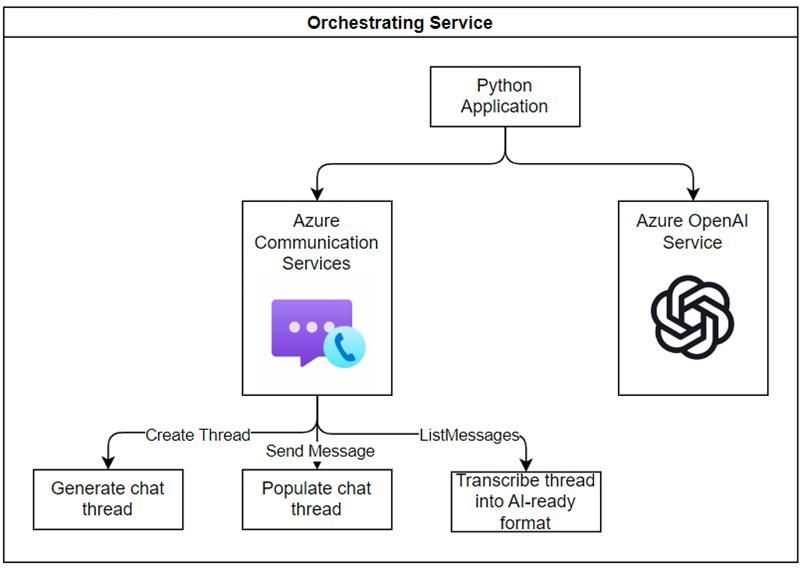

Architecture

Before we get started, we will quickly review the proposed architecture of our solution. We will leverage a console application in Python as our “orchestrating service” that has access to an Azure Communication Services resource. This console application will generate the chat threads from which we will generate insights. In a more complex architecture, this console application would be replaced by a service that is used by the clients to manage the creation and access to chat threads. The console application will then connect with Azure OpenAI Service to generate insights based on the transcribed thread and provided prompts.

Pre-requisites

To get started we will need to:

- Create an Azure account with an active subscription. For details, see Create an account for free.

- Create an Azure Communication Services resource. For details, see Quickstart: Create and manage Communication Services resources. You'll need to record your resource endpoint and connection string for this quickstart.

- Create an Azure OpenAI Service resource. See instructions.

- Deploy an Azure OpenAI Service model (Can use GPT-3, ChatGPT or GPT-4 models). See instructions.

- Install Python 3.11.2

- Install dependencies with pip:

pip install openai azure.communication.chat azure.communication.identity

Setting up the console application

Open your terminal or command windows, create a new directory for your application and go to it.

In your favorite text editor, create a file called chatInsights.py and save it to the folder we created before: chatInsights. In the file, configure the imports you will need for this project. Add a connection string and endpoint for Azure Communication Services which can be found on the Azure Portal under your Azure Communication Services resource.

Configuring the chat client

For this example, we will generate a summary from a pre-populated chat thread. In a real-world scenario, you would be accessing existing threads that have been created on your resource. To create the sample thread, we will start by creating two identities that will be mapped to two users. We will then initialize our chat client with those identities. Finally, we will populate the chat thread with some sample messages we have created.

To set up the chat clients we will create two chat participants using the identities we had generated. We will add the participants to a chat thread.

Next we will populate the chat thread with some sample conversation between a customer support agent and a customer chatting about a dishwasher.

Generating a conversations transcript

Now that the thread is generated, we will convert it into a transcript that can be fed to the Azure OpenAI Service model. We will use the list_messages method from our chat client to get the messages from the thread. We can select a date range for the messages we want. In this case we will get the last day. We will store each message into a string.

Summarize the chat thread

Finally, we will use the transcript of the conversation to ask the Azure OpenAI Service to generate insights. As part of the prompt to the GPT model, we will ask for:

- A generated topic for the thread

- A generated summary for the thread

- A generated sentiment for the thread

Run it

Now that everything is ready, we will run it. The application will create threads, populate it with messages, and then export them to summarize them. Remember, in a production environment the threads will be populated directly by your users and thus you will only need to focus on extracting the messages and summarizing them. To run the full code we generated run the following command within the ChatInsights folder.

Once you run the application you will see insights generated by Azure OpenAI Service from the conversation. You can leverage these insights to better inform your service providers on the context of a conversation or after the fact to help inform your customer support experience.

If you want to access the completed code find it on GitHub. Now it is your turn to try it! Let us know how it goes in the comments.

Published on:

Learn moreRelated posts

Azure Cosmos DB TV Recap: Supercharging AI Agents with the Azure Cosmos DB MCP Toolkit (Ep. 110)

In Episode 110 of Azure Cosmos DB TV, host Mark Brown is joined by Sajeetharan Sinnathurai to explore how the Azure Cosmos DB MCP Toolkit is c...

Introducing the Azure Cosmos DB Agent Kit: Your AI Pair Programmer Just Got Smarter

The Azure Cosmos DB Agent Kit is an open-source collection of skills that teaches your AI coding assistant (GitHub Copilot, Claude Code, Gemin...

Introducing Markers in Azure Maps for Power BI

We’re announcing a powerful new capability in the Azure Maps Visual for Power BI: Markers. This feature makes it easier than ever for organiza...

Azure Boards additional field filters (private preview)

We’re introducing a limited private preview that allows you to add additional fields as filters on backlog and Kanban boards. This long-reques...

What’s new with Azure Repos?

We thought it was a good time to check in and highlight some of the work happening in Azure Repos. In this post, we’ve covered several recent ...