Public Preview - Raw Media Access for Voice & Video Calling

This week we’re releasing raw media audio access as a public preview feature in the Calling JavaScript SDK for web browsers (1.6.1-beta). In this blog post we’ll discuss potential uses of this feature and set you up with an illuminating sample that translates audio in real-time. Azure Communication Services real-time voice and video calling can be used a wide range of scenarios:

- Virtual visits applications connecting businesses to customers for scheduled appointments like medical appointments or a mortgage application

- Ad hoc communication such as like LinkedIn’s built-in messaging

- Unscheduled customer interaction such as agents responding to inbound phone calls on a support hotline

In all of these scenarios, your app may benefit from a wide ecosystem of cloud services that analyze audio streams in real-time and provide useful data. Two Microsoft examples are Nuance and Azure Cognitive Services. Nuance can monitor virtual medical appointments and help clinicians with post-visit notes. And Azure Cognitive Services can provide real-time transcription and translation capabilities.

When you use Azure Communication Services, you enjoy a secure auto-scaling communication platform with complete control over your data. Raw media access is a practical realization of this promise, allowing your Web clients to pull audio from calls, and inject audio into a call, all in real-time, with a socket-like interface. Raw media access is just one of several ways to connect to the Azure Communication Services calling data plane with client SDKs. You can also use service SDKs, get phone numbers from Azure, or bring your own carrier, connect Power Virtual Agent, and connect Teams.

You can learn more about raw media access by checking out concept and quick start documentation. But the rest of this post will discuss a sample we built using Azure Cognitive Services.

What’s the sample do?

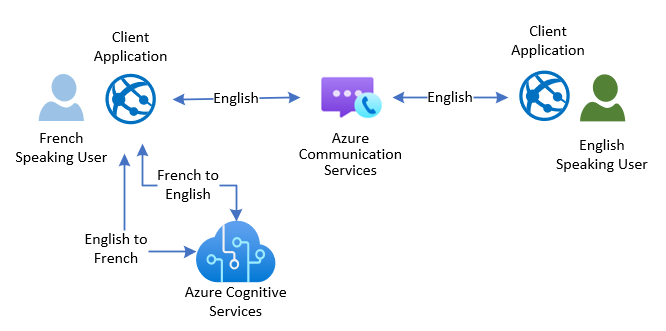

Imagine an end-user in a call who only understands French, but everyone else on the call is speaking English. We can do two things to help this French user communicate with the group:

- The French user can mute incoming English audio and listen to a real-time “robot” translation in French. This uses inbound raw media access to pull incoming English audio and pipe it to Azure Cognitive Services, and then play it locally.

- When that same user speaks French, they can generate an English translation and inject that translation into the call, so other users hear an English robot. This pulls audio from the local microphone, pipes it to Azure Cognitive Services for a speech-to-speech translation, and then uses Azure Communication Services outbound media access.

This flow is diagramed below.

Sample

To get started you’re going to want to get basic Web Calling up and running. We’ve built this Cognitive Services sample as a branch of the web calling tutorial sample hosted on GitHub.

Navigate to the project folder then install and start the application on local machine.

Once your tutorial sample application is up and running, stop it and check out to the speech translation branch

Install dependencies and run this branch with translation:

Setting up Azure Cognitive Services

Your app will need an Azure Cognitive Services Speech resource for translation. Follow these directions to create Azure speech resource and find key and location. Then add your Azure Speech key and region to the `config.json` file:

Azure Cognitive Services supports 40+ languages for speech to speech translation. We’re going to pick French and English with the code below. This is all in MakeCall.js.

Incoming audio translation

There are two key real-time operations in the sample. In this first flow we’re going to take incoming audio from ACS Call using`getOutPutAudioStreamTrack` and connects it to an TranslationRecognizer. In this flow we take the incoming audio from the call and translate it to the selected language.

Outgoing audio translation

This next flow takes the local user’s microphone input, translates that audio using another TranslationRecognizer, and that to the ACS calling using `setInputAudioStreamTrack`

Thanks for reading!

We hope this new feature will enable productive extensions and experimentation in your voice calling applications. And yes – we are working on enabling this same raw media access capability for the Calling iOS and Android SDKs. Follow this blog and hit up our GitHub page to get the latest updates from the Azure Communication Services team!

Published on:

Learn more