Distributed ML Training for Lane Detection, powered by NVIDIA and Azure NetApp Files

Table of Contents

Cloud resources and services requirements

Distributed ML training for lane detection with Run:ai

Distributed training for lane detection use case using the TuSimple dataset

Create a delegated subnet for Azure NetApp Files

Azure NetApp Files configuration

Setting up Azure Kubernetes Service

Peering of AKS virtual network and Azure NetApp Files virtual network

Set up Azure NetApp Files back-end and storage class

Installing and running Run:ai distributed lane detection training

Download and process the TuSimple dataset as Run:ai job

Perform distributed lane detection training using Horovod

Azure NetApp Files service levels

Dynamically change the service level of a volume

Leveraging Azure NetApp Files snapshot data protection

Deploy and set up volume snapshot components on AKS

Restore data from an Azure NetApp Files snapshot

Abstract

Microsoft, NetApp and Run:ai have partnered in the creation of this article to demonstrate the unique capabilities of the Azure NetApp Files together with the Run:ai platform for simplifying orchestration of AI workloads. This article provides a reference architecture for streamlining the process of both data pipelines and workload orchestration for Distributed Machine Learning Training for Lane Detection, by ensuring the use of the full potential of NVIDIA GPUs.

Co-authors: Muneer Ahmad, Verron Martina (NetApp), Ronen Dar (Run:ai)

Introduction

Microsoft and NetApp have teamed up with Run:ai – a company virtualizing AI infrastructure, to allow faster AI experimentation with full GPU utilization – with the goal to enable teams to speed up AI by running many experiments in parallel, with fast access to data, and leveraging limitless compute resources. Run:ai enables full NVIDIA GPU utilization in Azure by automating resource allocation, and the proven architecture of Azure NetApp Files enables every experiment to run at maximum speed by eliminating data pipeline obstructions.

Microsoft, NetApp and Run:ai have joined forces to offer customers a future-proof platform for their AI journey in Azure. From analytics and high-performance computing (HPC) to autonomous decisions (where customers can optimize their IT investments by only paying for what they need, when they need it), the 3-way partnership offers a single unified experience in the Azure Cloud.

Target audience

Data science incorporates multiple disciplines in IT and business, therefore multiple personas are part of our targeted audience:

- Data scientists need the flexibility to use the tools and libraries of their choice.

- Data engineers need to know how the data flows and where it resides.

- Autonomous driving use-case experts.

- Cloud administrators and architects to set up and manage cloud (Azure) resources.

- A DevOps engineer needs the tools to integrate new AI/ML applications into their continuous integration and continuous deployment (CI/CD) pipelines.

- Business users want to have access to AI/ML applications.

In this article, we describe how Run:ai, NVIDIA GPU-powered compute in Microsoft Azure and Azure NetApp Files help each of these roles bring value to business.

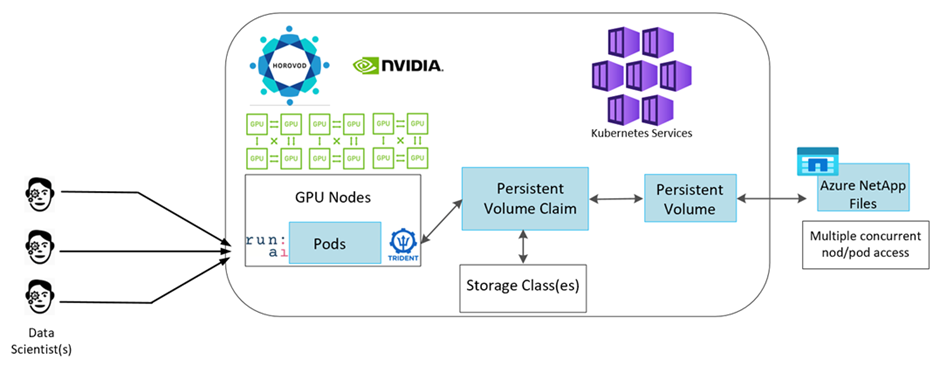

Solution overview

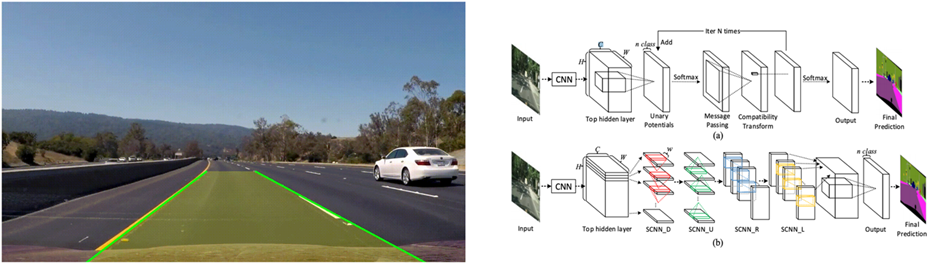

In this architecture, the focus is on the most computationally intensive part of the AI or machine learning (ML) distributed training process of lane detection. Lane detection is one of the most important tasks in autonomous driving, which helps to guide vehicles by localization of the lane markings. Static components like lane markings guide the vehicle to drive on the highway interactively and safely.

Convolutional Neural Network (CNN)-based approaches have pushed scene understanding and segmentation to a new level. Although it doesn’t perform well for objects with long structures and regions that could be occluded (for example, poles, shade on the lane, and so on). Spatial Convolutional Neural Network (SCNN) generalizes the CNN to a rich spatial level. It allows information propagation between neurons in the same layer, which makes it best suited for structured objects such as lanes, poles, or truck with occlusions. This compatibility is because the spatial information can be reinforced, and it preserves smoothness and continuity.

Thousands of scene images need to be injected in the system to allow the model to learn and distinguish the various components in the dataset. These images include weather, daytime or night-time, multilane highway roads, and other traffic conditions.

For training, there is a need for good quality and quantity of data. Single GPU or multiple GPUs can take days to weeks to complete the training. Data-distributed training can speed up the process by using multiple and multi-node GPUs. Horovod is one such framework that grants distributed training but reading data across clusters of GPUs could act as a hindrance. Azure NetApp Files provides ultrafast, high throughput and sustained low latency to provide scale-out/scale-up capabilities so that GPUs are leveraged to the best of their computational capacity. Our experiments verified that all the GPUs across the cluster are used more than 96% on average for training the lane detection using SCNN.

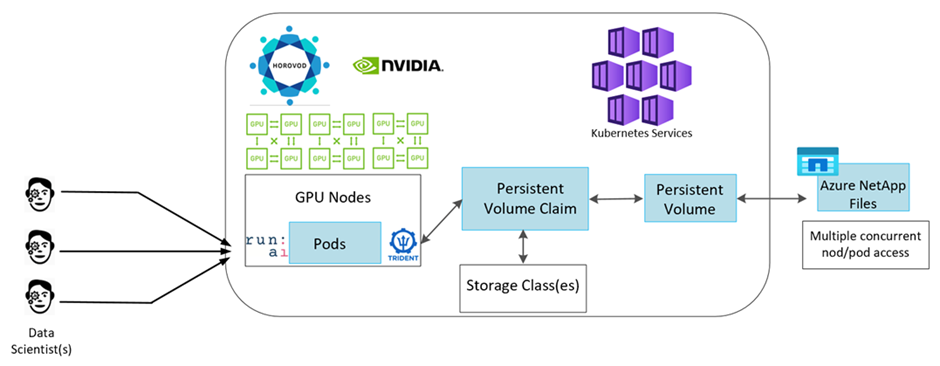

Solution technology

This section covers the technology requirements for the lane detection use case by implementing a distributed training solution at scale that fully runs in the Azure cloud. The figure below provides an overview of the solution architecture.

The elements used in this solution are:

- Azure Kubernetes Service (AKS)

- Azure Compute SKUs with NVIDIA GPUs

- Azure NetApp Files (ANF)

- Run:ai

- Astra Trident

- Horovod

- Helm

Links to all the elements mentioned here are listed in the Additional Information section.

Cloud resources and services requirements

The following table lists the hardware components that are required to implement the solution. The cloud components that are used in any implementation of the solution might vary based on customer requirements.

|

Cloud |

Minimum Quantity |

|

AKS |

Minimum of three system nodes and three GPU worker nodes |

|

Virtual machine (VM) SKU system nodes |

Three Standard_DS2_v2 |

|

VM SKU GPU worker nodes |

Three Standard_NC6s_v3 |

|

Azure NetApp Files |

2 TiB standard tier |

Software requirements

The following table lists the software components that are required to implement the solution. The software components that are used in any implementation of the solution might vary based on customer requirements.

|

Software |

Minimum version or other information |

|

AKS – Kubernetes version |

1.18.14 |

|

Run:ai CLI |

v2.2.25 |

|

Run:ai Orchestration Kubernetes Operator version |

1.0.109 |

|

Horovod |

0.21.2 |

|

Astra Trident |

21.01.1 |

|

Helm |

3.0.0 |

Distributed ML training for lane detection with Run:ai

This section provides details on setting up the platform for performing lane detection distributed training at scale using the Run:ai orchestrator. We discuss installation of all the solution elements and running the distributed training job on the said platform. ML versioning is completed by using Azure NetApp Files snapshots linked with Run:ai experiments for achieving data and model reproducibility. ML versioning plays a crucial role in tracking models, sharing work between team members, reproducibility of results, rolling new model versions into production, and data provenance.

Furthermore, this article provides a performance evaluation on multiple GPU-enabled nodes across AKS.

Finally, this article wraps up a section data protection and recovery for our training environments. Azure NetApp Files version control (snapshots) can capture point-in-time versions of the data, trained models, and logs associated with each experiment. It has rich API support making it easy to integrate with the Run:ai platform; all you have to do is to trigger an event based on the training state. You also must capture the state of the whole experiment without changing anything in the code or the containers running on top of Kubernetes (K8s).

Distributed training for lane detection use case using the TuSimple dataset

In this article, distributed training is performed on the TuSimple dataset for lane detection. Horovod is used in the training code for conducting data distributed training on multiple GPU nodes simultaneously in the Kubernetes cluster through AKS. Code is packaged as container images for TuSimple data download and processing. Processed data is stored on persistent volumes allocated by Astra Trident plug- in. For the training, one more container image is created, and it uses the data stored on persistent volumes created during downloading the data.

To submit the data and training job, use Run:ai for orchestrating the resource allocation and management. Run:ai allows you to perform Message Passing Interface (MPI) operations which are needed for Horovod. This layout allows multiple GPU nodes to communicate with each other for updating the training weights after every training mini batch. It also enables monitoring of training through the UI and CLI, making it easy to monitor the progress of experiments.

Azure NetApp Files snapshot is integrated within the training code and captures the state of data and the trained model for every experiment. This capability enables you to track the version of data and code used, and the associated trained model generated.

Setting up Azure NetApp Files

As organizations continue to embrace the scalability and flexibility of cloud-based solutions, Azure NetApp Files (ANF) has emerged as a powerful managed file storage service in Azure. ANF provides enterprise-grade file shares that are highly performant and integrate seamlessly with existing applications and workflows.

In this section, we will delve into two crucial aspects of leveraging the full potential of Azure NetApp Files: the creation of a delegated subnet and the initial configuration tasks. By following these steps, organizations can optimize their ANF deployment, enabling efficient data management and enhanced collaboration.

Firstly, we will explore the process of creating a delegated subnet, which plays a pivotal role in establishing a secure and isolated network environment for ANF. This delegated subnet ensures that ANF resources are efficiently isolated from other network traffic, providing an additional layer of protection and control.

Subsequently, we will discuss the initial configuration tasks necessary to set up Azure NetApp Files effectively. This includes key considerations such as setting up a NetApp account, and provisioning an ANF capacity pool.

By following these steps, administrators can streamline the deployment process and ensure smooth integration with existing infrastructure.

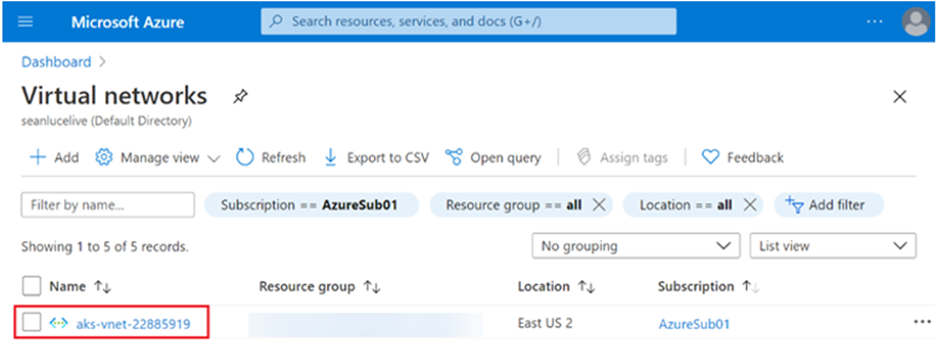

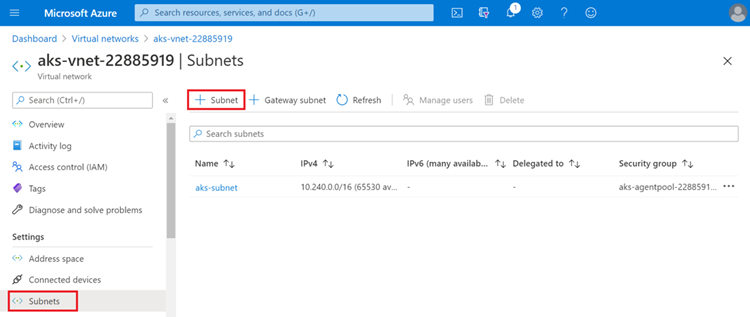

Create a delegated subnet for Azure NetApp Files

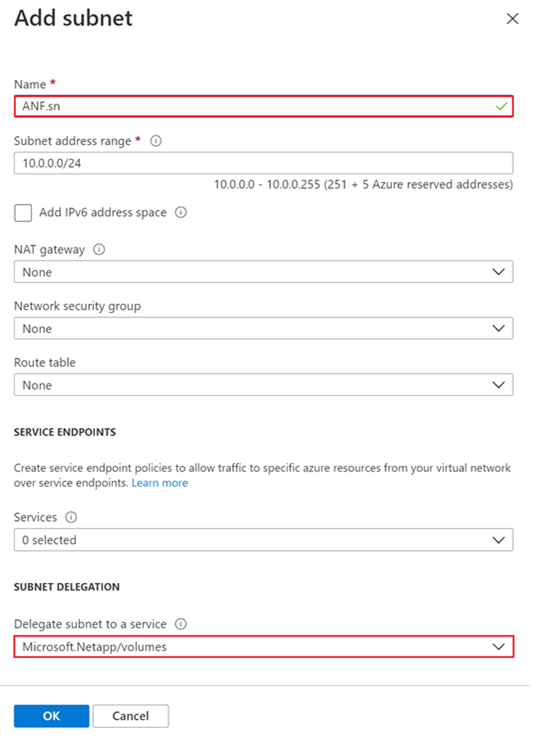

To create a delegated subnet for Azure NetApp Files, follow this series of steps:

- Navigate to Virtual networks within the Azure portal. Find your newly created virtual network. It should have a prefix such as aks-vnet, as seen here. Click the name of the virtual network.

- Click Subnets and select +Subnet from the top toolbar.

- Provide the subnet with a name such as ANF.sn and under the Subnet Delegation heading, select Microsoft.NetApp/volumes. Do not change anything else. Click OK.

Azure NetApp Files volumes are allocated to the application cluster and are consumed as persistent volume claims (PVCs) in Kubernetes. In turn, this allocation provides us the flexibility to map volumes to different services, be it Jupyter notebooks, serverless functions, and so on.

Users of services can consume storage from the platform in many ways. The main benefits of Azure NetApp Files are:

- Provides users with the ability to use snapshots.

- Enables users to store large quantities of data on Azure NetApp Files volumes.

- Procure the performance benefits of Azure NetApp Files volumes when running their models on large sets of files.

Azure NetApp Files configuration

To complete the setup of Azure NetApp Files, you must first configure it as described in Quickstart: Set up Azure NetApp Files and create an NFS volume.

However, you may omit the steps to create an NFS volume for Azure NetApp Files as you will create volumes through Astra Trident (see later in this article). Before continuing, be sure that you have:

- Registered for Azure NetApp Files and NetApp Resource Provider (through the Azure Cloud Shell).

- Created a NetApp account in Azure NetApp Files.

- Set up a capacity pool (Standard, Premium or Ultra – depending on your needs).

Setting up Azure Kubernetes Service

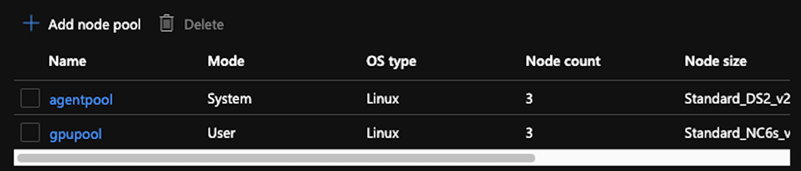

For setup and installation of the AKS cluster go to Create an AKS Cluster. Then, follow these series of steps:

- When selecting the type of nodes (whether it be system (CPU) or worker (GPU) nodes), select the following:

- Add primary system node named agentpool at the Standard_DS2_v2 size. Use the default three nodes.

- Add worker node gpupool with the the Standard_NC6s_v3 pool size. Use three nodes minimum for GPU nodes.

(!) Note

Deployment takes 5-10 minutes

- Add primary system node named agentpool at the Standard_DS2_v2 size. Use the default three nodes.

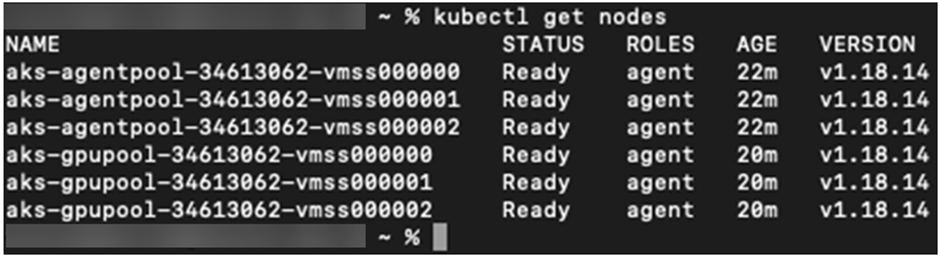

- After deployment is complete, click Connect to Cluster. To connect to the newly created AKS cluster, install the Kubernetes command-line tool from your local environment (laptop/PC). Visit Install Tools to install it as per your OS.

- To access the AKS cluster from the terminal, first enter az login and put in the credentials.

- Run the following two commands:

az account set --subscription xxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxxxx

aks get-credentials --resource-group resourcegroup --name aksclustername

- Enter this command in the Azure CLI:

kubectl get nodes

|

(i) Important

If all six nodes are up and running as seen here, your AKS cluster is ready and connected to your local environment.

|

Peering of AKS virtual network and Azure NetApp Files virtual network

Next, peer the AKS virtual network (VNet) with the Azure NetApp Files VNet by following these steps:

- In the search box at the top of the Azure portal, type virtual networks.

- Click VNet aks- vnet-name, then enter Peerings in the search field.

- Click +Add and enter the information provided in the table below:

|

Field |

Value or description |

|

Peering link name |

aks-vnet-name_to_anf |

|

SubscriptionID |

Subscription of the Azure NetApp Files VNet to which you’re peering |

|

VNet peering partner |

Azure NetApp Files VNet |

|

(i) Important

Leave all the nonasterisk sections on default

|

- Click ADD or OK to add the peering to the virtual network.

For more information, visit Create, change, or delete a virtual network peering.

Setting up Astra Trident

Astra Trident is an open-source project that NetApp maintains for application container persistent storage. Astra Trident has been implemented as an external provisioner controller that runs as a pod itself, monitoring volumes and completely automating the provisioning process.

Astra Trident enables smooth integration with K8s by creating and attaching persistent volumes for storing training datasets and trained models. This capability makes it easier for data scientists and data engineers to use K8s without the hassle of manually storing and managing datasets. Trident also eliminates the need for data scientists to learn managing new data platforms as it integrates the data management-related tasks through the logical API integration.

Install Astra Trident

To install Trident software, complete the following steps:

- First install helm.

- Download and extract the Trident 21.01.1 installer. For latest version, see here.

wget https://github.com/NetApp/trident/releases/download/v21.01.1/trident-installer-21.01.1.tar.gz

tar -xf trident-installer-21.01.1.tar.gz - Change the directory to trident-installer.

cd trident-installer

- Copy tridentctl to a directory in your system $PATH.

cp ./tridentctl /usr/local/bin

- Install Trident on K8s cluster with Helm:

- Change directory to helm directory.

cd helm

- Install Trident.

helm install trident trident-operator-21.01.1.tgz --namespace trident --create-namespace

- Check the status of Trident pods the usual K8s way:

kubectl -n trident get pods

- If all the pods are up and running, Astra Trident is installed, and you are good to move forward.

- Change directory to helm directory.

Set up Azure NetApp Files back-end and storage class

To set up Azure NetApp Files back-end and storage class, complete the following steps:

- Switch back to the home directory.

cd ~

- Clone the project repository lane-detection-SCNN-horovod.

- Go to the trident-config directory.

cd ./lane-detection-SCNN-horovod/trident-config

- Create an Azure Service Principle (the service principle is how Trident communicates with Azure to access your Azure NetApp Files resources).

az ad sp create-for-rbac –name

The output should look like the following example:

{

"appId": "xxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx",

"displayName": "netapptrident",

"name": "http://netapptrident",

"password": "xxxxxxxxxxxxxxx.xxxxxxxxxxxxxx",

"tenant": "xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxx"

} - Create the Trident backend json file.

- Using your preferred text editor, complete the following fields from the table below inside the anf-backend.json file.

Field

Value

subscriptionID

Your Azure Subscription ID

tenantID

Your Azure Tenant ID (from the output of az ad sp in the previous step)

clientID

Your appID (from the output of az ad sp in the previous step)

clientSecret

Your password (from the output of az ad sp in the previous step)

The file should look like the following example:

{

"version": 1,

"storageDriverName": "azure-netapp-files",

"subscriptionID": "********-****-****-****-************",

"tenantID": "********-****-****-****-************",

"clientID": "********-****-****-****-************",

"clientSecret": "SECRET",

"location": "westeurope",

"serviceLevel": "Standard",

"virtualNetwork": "anf-vnet",

"subnet": "default",

"nfsMountOptions": "vers=3,proto=tcp",

"limitVolumeSize": "500Gi",

"defaults": {

"exportRule": "0.0.0.0/0",

"size": "200Gi"

}

- Instruct Trident to create the Azure NetApp Files back- end in the trident namespace, using anf-backend.json as the configuration file as follows:

tridentctl create backend -f anf-backend.json -n trident

- Create the storage class:

- K8 users provision volumes by using PVCs that specify a storage class by name. Instruct K8s to create a storage class azurenetappfiles that will reference the Azure NetApp Files back end created in the previous step using the following:

kubectl create -f anf-storage-class.yaml - Check that storage class is created by using the following command:

kubectl get sc azurenetappfiles

The output should look like the following example:

|

(i) Important

Before moving on to the next chapter, make sure to follow the instructions in Deploy and set up volume snapshot components on AKS.

|

Installing and running Run:ai distributed lane detection training

To install Run:ai, complete the following steps:

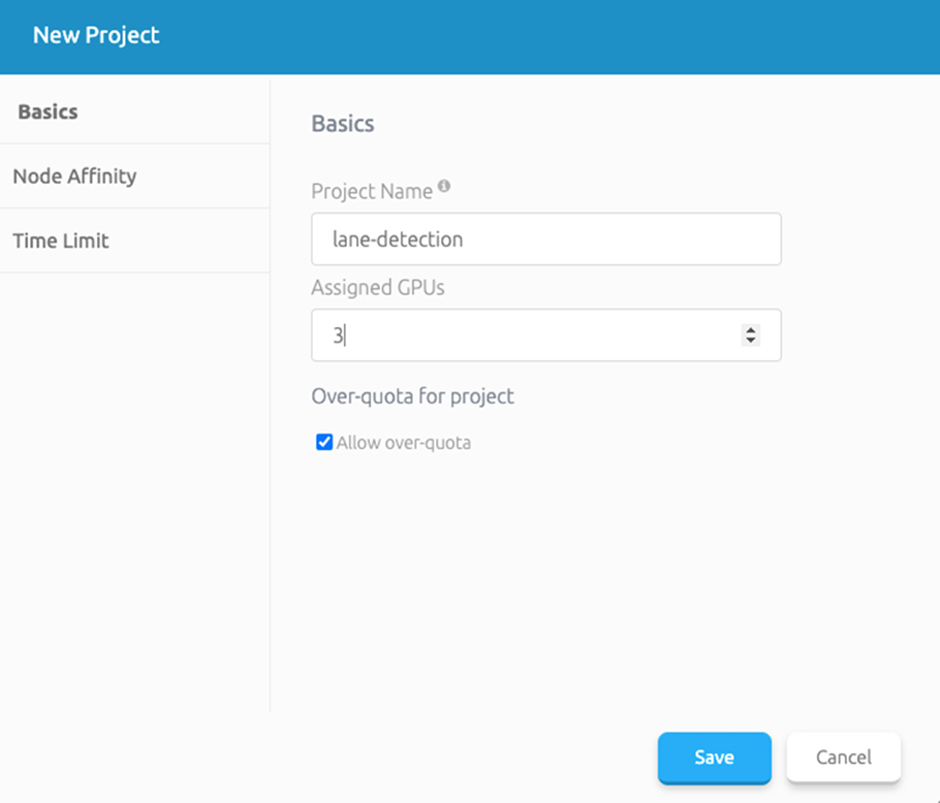

- Install Run:ai cluster on AKS.

- Go to app.runai.ai, click create New Project, and name it lane-detection. It will create a namespace on a K8s cluster starting with runai - followed by the project name. In this case, the namespace created would be runai-lane-detection.

- Install Run:ai CLI.

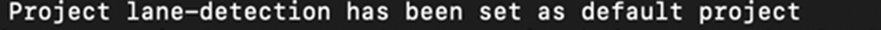

- On your terminal, set lane-detection as a default Run:ai project by using the following command:

`runai config project lane-detection`

The output should look like the following example:

- Create ClusterRole and ClusterRoleBinding for the project namespace (for example, lane-detection) so the default service account belonging to runai-lane-detection namespace has permission to perform volumesnapshot operations during job executions:

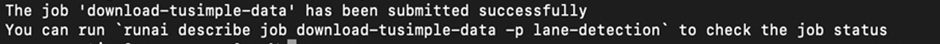

- List namespaces to check that runai-lane-detection exists by using this command:

kubectl get namespaces

The output should appear like the following example:

- List namespaces to check that runai-lane-detection exists by using this command:

- Create ClusterRole netappsnapshot and ClusterRoleBinding netappsnapshot using the following commands:

`kubectl create -f runai-project-snap-role.yaml`

`kubectl create -f runai-project-snap-role-binding.yaml`

Download and process the TuSimple dataset as Run:ai job

The process to download and process the TuSimple dataset as a Run:ai job is optional. It involves the following steps:

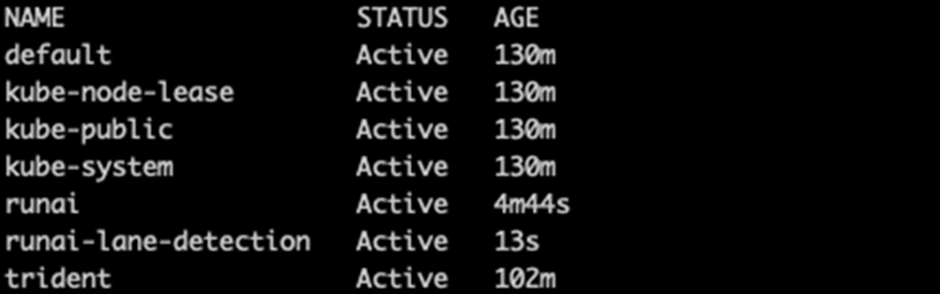

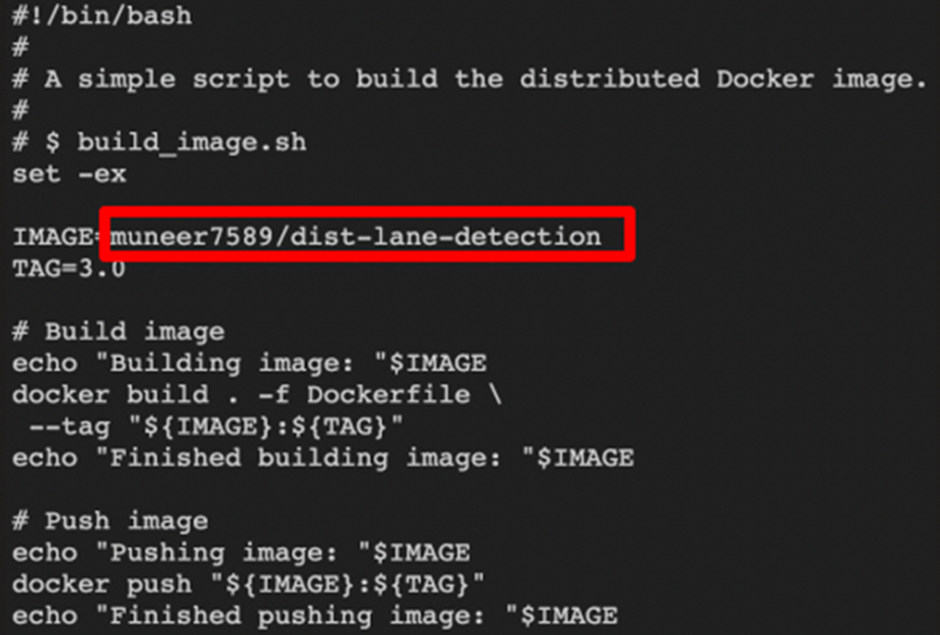

- Build and push the docker image, or omit this step if you want to use an existing docker image (for example, muneer7589/download-tusimple:1.0)

- Switch to the home directory:

cd ~

- Go to the data directory of the project lane-detection-SCNN-horovod:

cd ./lane-detection-SCNN-horovod/data

- Modify build_image.sh shell script and change docker repository to yours. For example, replace muneer7589 with your docker repository name. You could also change the docker image name and TAG (such as download-tusimple and 1.0):

- Run the script to build the docker image and push it to the docker repository using these commands:

chmod +x build_image.sh

./build_image.sh

- Switch to the home directory:

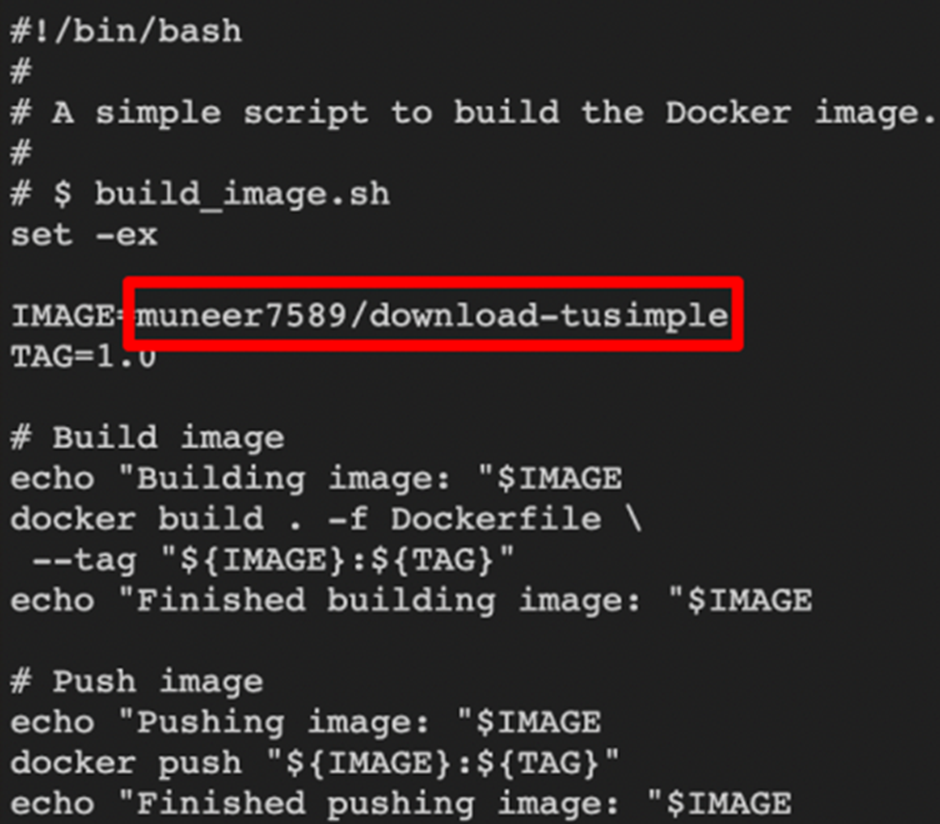

- Submit the Run:ai job to download, extract, pre-process, and store the TuSimple lane detection dataset in a pvc, which is dynamically created by Astra Trident:

- Use the following commands to submit the Run:ai job

runai submit

--name download-tusimple-data

--pvc azurenetappfiles:100Gi:/mnt

--image muneer7589/download-tusimple:1.0 - Enter the information from the table below to submit the Run:ai job:

Field

Value or description

-name

Name of the job

-pvc

PVC of the format

[StorageClassName]:Size:ContainerMountPath

In the above job submission, you are creating an PVC based on-demand using Trident with storage class azurenetappfiles. Persistent volume capacity here is 100Gi and it’s mounted at path /mnt.

-image

Docker image to use when creating the container for this job

The output should look like the following example:

- List the submitted Run:ai jobs.

runai list jobs

- Check the submitted job logs.

runai logs download-tusimple-data -t 10

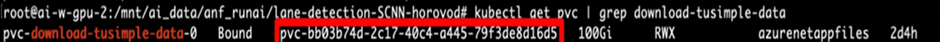

- List the pvc created. Use this pvc command for training in the next step.

kubectl get pvc | grep download-tusimple-data

The output should look like the following example:

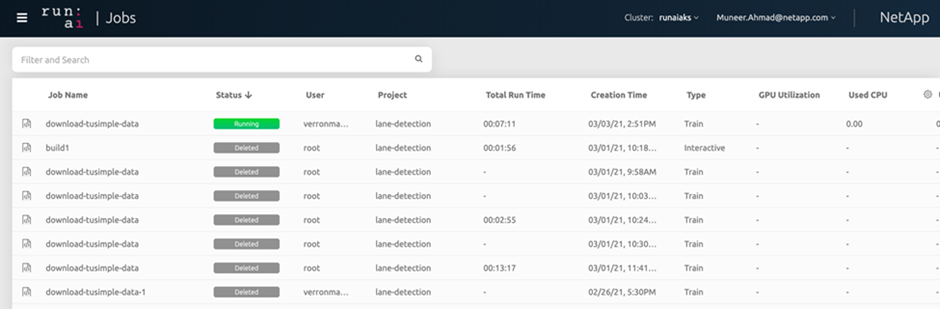

- Check the job in Run:ai UI (or app.run.ai).

- Use the following commands to submit the Run:ai job

Perform distributed lane detection training using Horovod

Performing distributed lane detection training using Horovod is an optional process. However, here are the steps involved:

- Build and push the docker image, or skip this step if you want to use the existing docker image (for example, muneer7589/dist-lane-detection:3.1):

- Switch to home directory.

cd ~

- Go to the project directory lane-detection-SCNN-horovod.

cd ./lane-detection-SCNN-horovod

- Modify the build_image.sh shell script and change docker repository to yours (for example, replace muneer7589 with your docker repository name). You could also change the docker image and TAG (muneer7589 and 3.1, for example).

- Run the script to build the docker image and push to the docker repository.

chmod +x build_image.sh

./build_image.sh

- Switch to home directory.

- Submit the Run:ai job for carrying out distributed training (MPI):

- Using submit of Run:ai for automatically creating PVC in the previous step (for downloading data) only allows you to have RWO access, which does not allow multiple pods or nodes to access the same PVC for distributed training. Update the access mode to ReadWriteMany and use the Kubernetes patch to do so.

- First, get the volume name of the PVC by running the following command:

kubectl get pvc | grep download-tusimple-data

- Patch the volume and update access mode to ReadWriteMany (replace volume name with yours in the following command):

kubectl patch pv pvc-bb03b74d-2c17-40c4-a445-79f3de8d16d5 -p

'{"spec":{"accessModes":["ReadWriteMany"]}}' - Submit the Run:ai MPI job for executing the distributed training` job using information from the table below:

runai submit-mpi

--name dist-lane-detection-training

--large-shm

--processes=3

--gpu 1

--pvc pvc-download-tusimple-data-0:/mnt

--image muneer7589/dist-lane-detection:3.1

-e USE_WORKERS="true"

-e NUM_WORKERS=4

-e BATCH_SIZE=33

-e USE_VAL="false"

-e VAL_BATCH_SIZE=99

-e ENABLE_SNAPSHOT="true"

-e PVC_NAME="pvc-download-tusimple-data-0"Field

Value or description

name

Name of the distributed training job

Large shm

Mount a large /dev/shm device

It is a shared file system mounted on RAM and provides large enough shared memory for multiple CPU workers to process and load batches into CPU RAM.

processes

Number of distributed training processes

gpu

Number of GPUs/processes to allocate for the job

In this job, there are three GPU worker processes (--processes=3), each allocated with a single GPU (--gpu 1)

pvc

Use existing persistent volume (pvc-download-tusimple-data-0) created by previous job (download-tusimple-data) and it is mounted at path /mnt

image

Docker image to use when creating the container for this job

Define environment variables to be set in the container

USE_WORKERS

Setting the argument to true turns on multi-process data loading

NUM_WORKERS

Number of data loader worker processes

BATCH_SIZE

Training batch size

USE_VAL

Setting the argument to true allows validation

VAL_BATCH_SIZE

Validation batch size

ENABLE_SNAPSHOT

Setting the argument to true enables taking data and trained model snapshots for ML versioning purposes

PVC_NAME

Name of the pvc to take a snapshot of. In the above job submission, you are taking a snapshot of pvc-download-tusimple-data-0, consisting of dataset and trained models

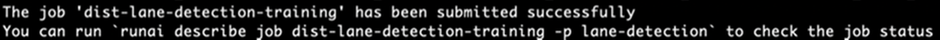

The output should look like the following example:

- List the submitted job.

runai list jobs

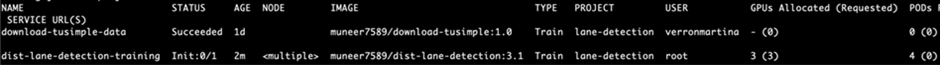

- Submitted job logs:

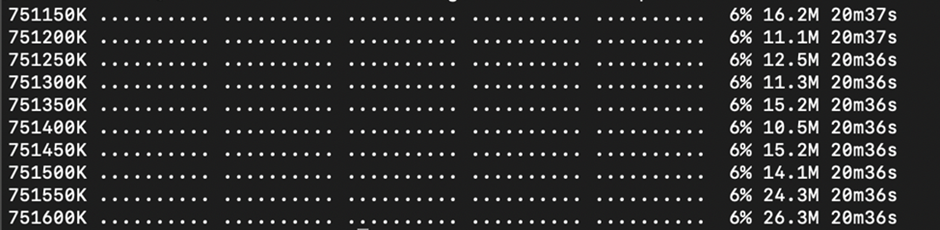

runai logs dist-lane-detection-training

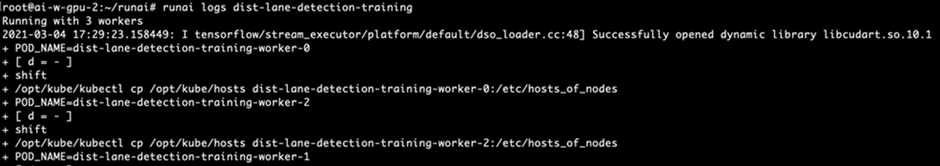

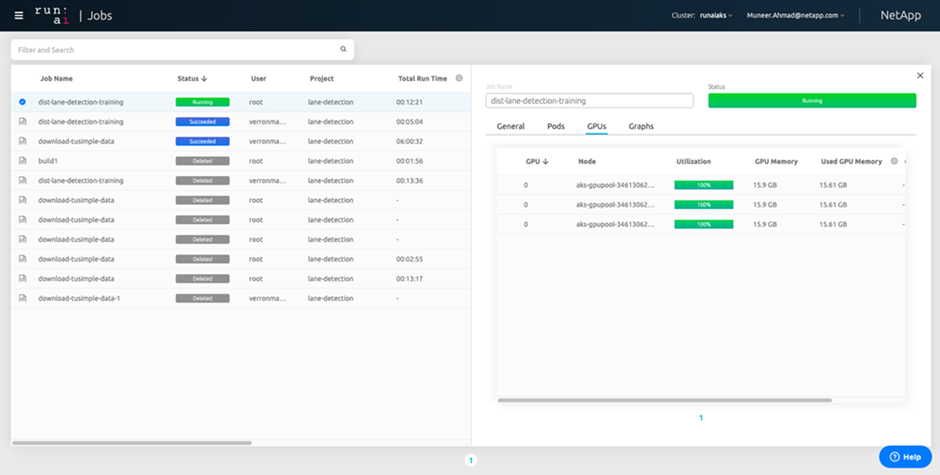

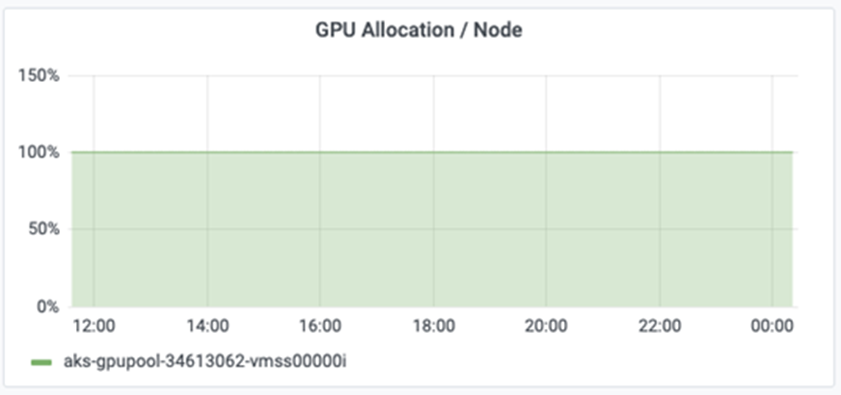

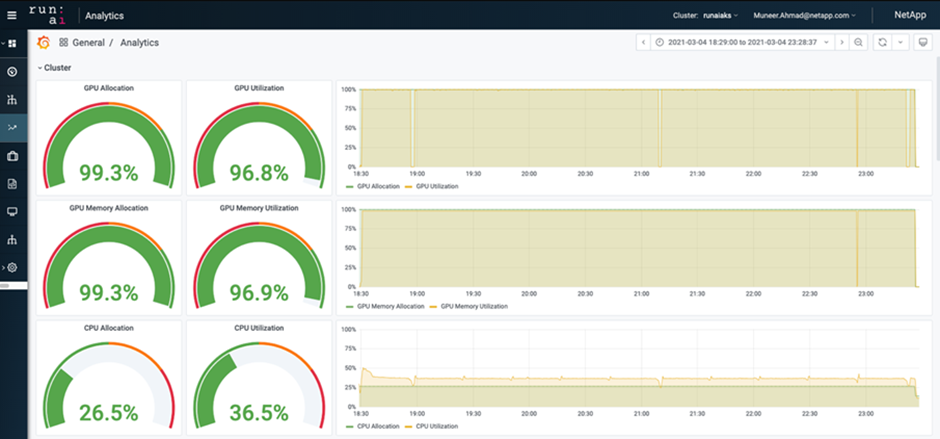

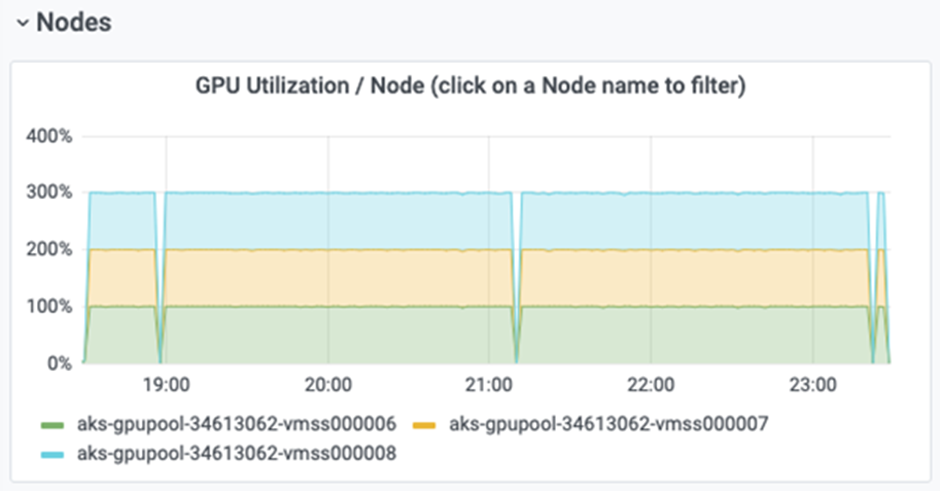

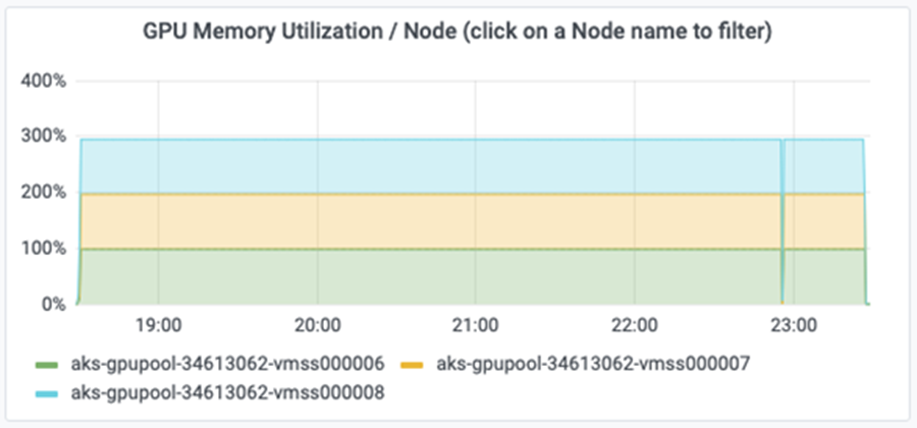

- Check training job in Run:ai GUI (or app.runai.ai): Run:ai Dashboard, as seen in the figures below. The first figure details three GPUs allocated for the distributed training job spread across three nodes on AKS, and the second Run:ai jobs:

- After the training is finished, check the Azure NetApp Files snapshot that was created and linked with Run:ai job.

runai logs dist-lane-detection-training --tail 1

kubectl get volumesnapshots | grep download-tusimple-data-0

- Using submit of Run:ai for automatically creating PVC in the previous step (for downloading data) only allows you to have RWO access, which does not allow multiple pods or nodes to access the same PVC for distributed training. Update the access mode to ReadWriteMany and use the Kubernetes patch to do so.

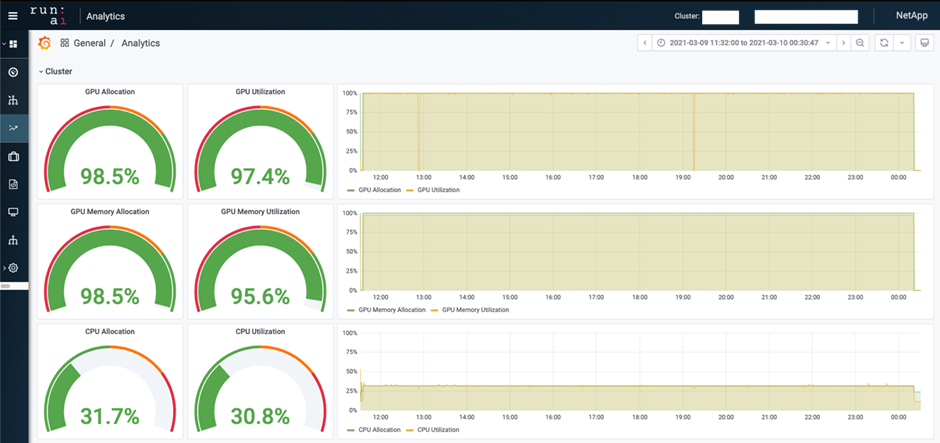

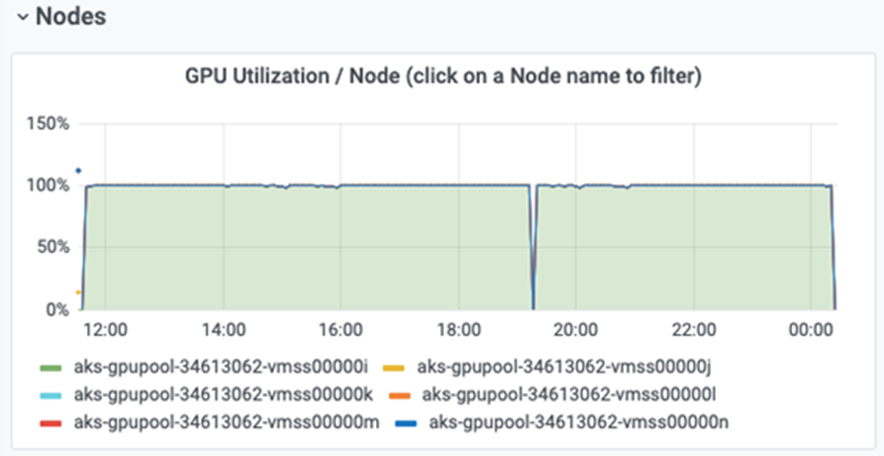

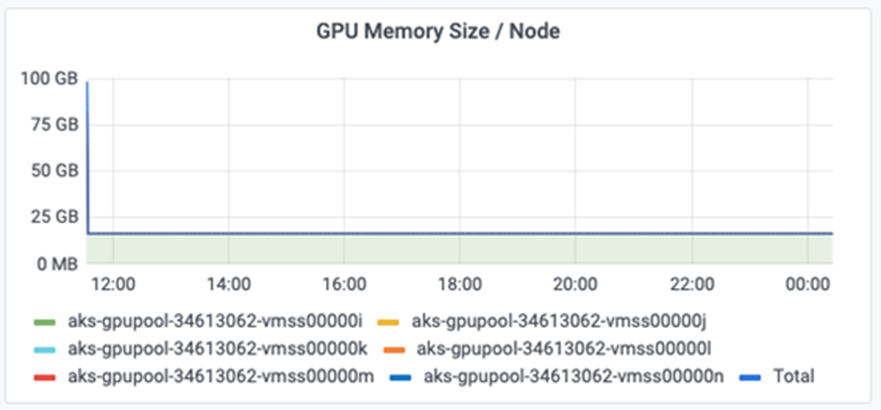

Performance evaluation

To show the linear scalability of the solution, performance tests have been done for two scenarios: one GPU and three GPUs. GPU allocation, GPU and memory utilization, different single- and three- node metrics have been captured during the training on the TuSimple lane detection dataset. Data is increased five- fold just for the sake of analyzing resource utilization during the training processes.

The solution enables customers to start with a small dataset and a few GPUs. When the amount of data and the demand of GPUs increase, customers can dynamically scale out the terabytes in the Standard Tier and quickly scale up to the Premium Tier to get four times the throughput per terabyte without moving any data. This process is further explained in the section, Azure NetApp Files service levels.

Processing time on one GPU was 12 hours and 45 minutes. Processing time on three GPUs across three nodes was approximately 4 hours and 30 minutes.

The figures shown throughout the remainder of this document illustrate examples of performance and scalability based on individual business needs.

The figure below illustrates 1 GPU allocation and memory utilization.

The figure below illustrates single node GPU utilization.

The figure below illustrates single node memory size (16GB).

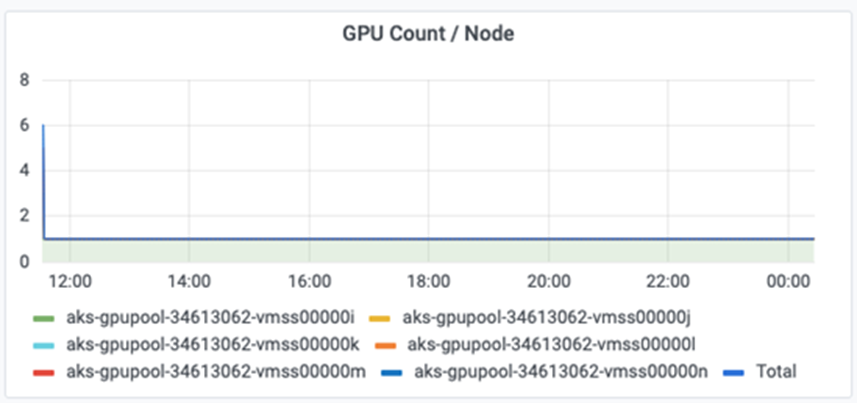

The figure below illustrates single node GPU count (1).

The figure below illustrates single node GPU allocation (%).

The figure below illustrates three GPUs across three nodes – GPUs allocation and memory.

The figure below illustrates three GPUs across three nodes utilization (%).

The figure below illustrates three GPUs across three nodes memory utilization (%).

Azure NetApp Files service levels

You can change the service level of an existing volume by moving the volume to another capacity pool that uses the service level you want for the volume. This existing service-level change for the volume does not require that you migrate data. It also does not affect access to the volume.

Dynamically change the service level of a volume

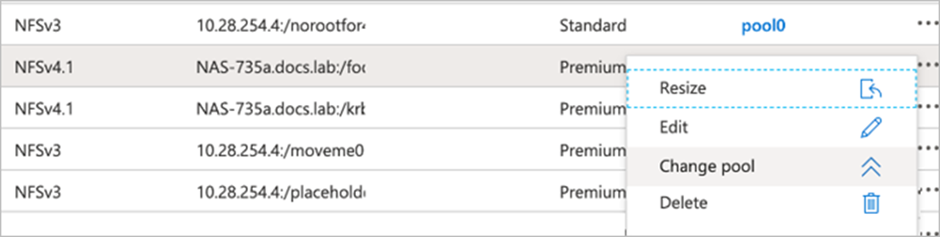

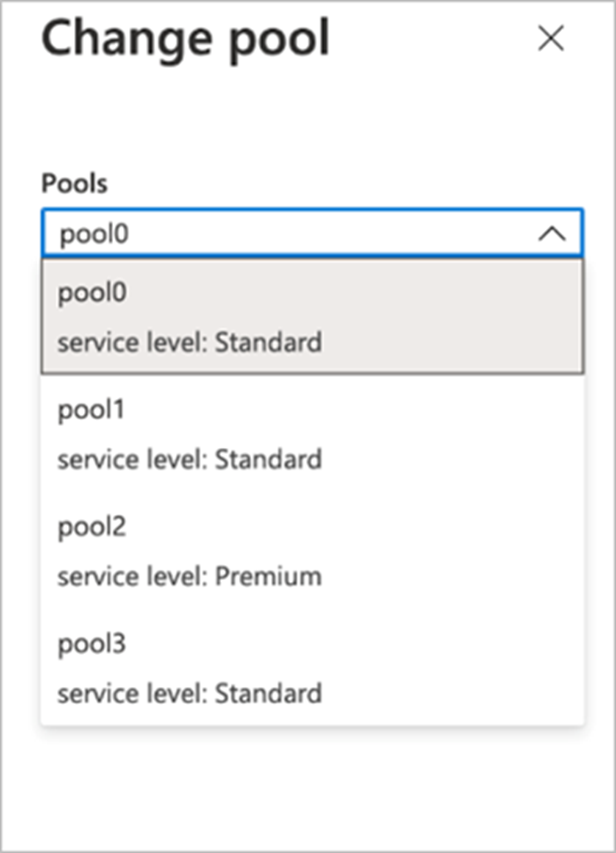

To change the service level of a volume, use the following steps:

- On the Volumes page, right-click the volume whose service level you want to change. Select Change Pool.

- In the Change Pool window, select the capacity pool you want to move the volume to. Then, click OK.

Automate service level change

- You can also use the following commands for Azure: CLI. For more information about changing the pool size of Azure NetApp Files, visit az netappfiles volume: Manage Azure NetApp Files (ANF) volume resources.

az netappfiles volume pool-change -g mygroup

--account-name myaccname

-pool-name mypoolname

--name myvolname

--new-pool-resource-id mynewresourceid

- The set- aznetappfilesvolumepool cmdlet shown here can change the pool of an Azure NetApp Files volume. More information about changing volume pool size and Azure PowerShell can be found by visiting Change pool for an Azure NetApp Files volume.

Set-AzNetAppFilesVolumePool

-ResourceGroupName "MyRG"

-AccountName "MyAnfAccount"

-PoolName "MyAnfPool"

-Name "MyAnfVolume"

-NewPoolResourceId 7d6e4069-6c78-6c61-7bf6-c60968e45fbf

Leveraging Azure NetApp Files snapshot data protection

Deploy and set up volume snapshot components on AKS

If your cluster does not come pre-installed with the correct volume snapshot components, you may manually install these components by running the following steps:

|

(i) Important

AKS 1.18.14 does not have pre-installed Snapshot Controller.

|

- Install Snapshot Beta CRDs by using the following commands:

kubectl create -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshotclasses.yaml

kubectl create -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshotcontents.yaml

kubectl create -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/client/config/crd/snapshot.storage.k8s.io_volumesnapshots.yaml - Install Snapshot Controller by using the following documents from GitHub:

kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/deploy/kubernetes/snapshot-controller/rbac-snapshot-controller.yaml

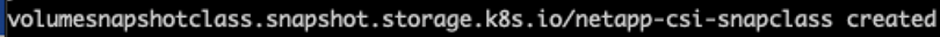

kubectl apply -f https://raw.githubusercontent.com/kubernetes-csi/external-snapshotter/release-3.0/deploy/kubernetes/snapshot-controller/setup-snapshot-controller.yaml - Set up K8s volumesnapshotclass: Before creating a volume snapshot, a volume snapshot class must be set up. Create a volume snapshot class for Azure NetApp Files, and use it to achieve ML versioning by using NetApp Snapshot technology. Create volumesnapshotclass netapp-csi-snapclass and set it to default `volumesnapshotclass `as such:

kubectl create -f netapp-volume-snapshot-class.yaml

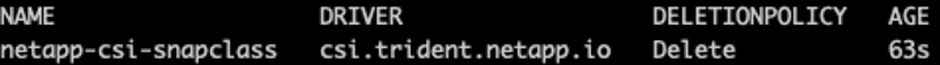

The output should look like the following example:

- Check that the volume Snapshot copy class was created by using the following command:

kubectl get volumesnapshotclass

The output should look like the following example:

Restore data from an Azure NetApp Files snapshot

To restore data from an Azure NetApp Files snapshot, complete the following steps:

- Switch to home directory.

cd ~

- Go to the project directory lane-detection-SCNN-horovod.

cd ./lane-detection-SCNN-horovod

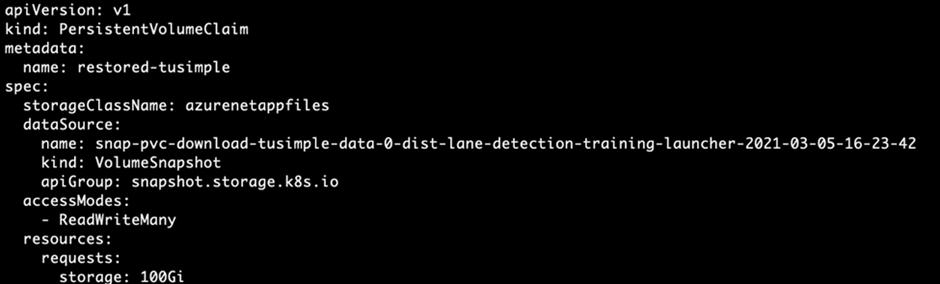

- Modify restore-snaphot-pvc.yaml and update dataSource name field to the Snapshot copy from which you want to restore data. You could also change PVC name where the data will be restored to, in this example its restored-tusimple.

- Create a new PVC by restore-snapshot-pvc.yaml.

kubectl create -f restore-snapshot-pvc.yaml

The output should look like the following example:

- If you want to use the just restored data for training, job submission remains the same as before; only replace the PVC_NAME with the restored PVC_NAME when submitting the training job, as seen in the following commands:

runai submit-mpi

--name dist-lane-detection-training

--large-shm

--processes=3

--gpu 1

--pvc restored-tusimple:/mnt

--image muneer7589/dist-lane-detection:3.1

-e USE_WORKERS="true"

-e NUM_WORKERS=4

-e BATCH_SIZE=33

-e USE_VAL="false"

-e VAL_BATCH_SIZE=99

-e ENABLE_SNAPSHOT="true"

-e PVC_NAME="restored-tusimple"

Conclusion

Microsoft, NetApp and Run:ai have partnered in the creation of this article to demonstrate the unique capabilities of the Azure NetApp Files together with the Run:ai platform for simplifying orchestration of AI workloads. This article provides a reference architecture for streamlining the process of both data pipelines and workload orchestration for Distributed Machine Learning Training for Lane Detection, by ensuring the use of the full potential of NVIDIA GPUs.

In conclusion, with regard to distributed training at scale (especially in a public cloud environment), the resource orchestration and storage component is a critical part of the solution. Making sure that data managing never hinders multiple GPU processing, therefore results in the optimal utilization of GPU cycles. Thus, making the system as cost effective as possible for large- scale distributed training purposes.

Data fabric delivered by NetApp overcomes the challenge by enabling data scientists and data engineers to connect together on-premises and in the cloud to have synchronous data, without performing any manual intervention. In other words, data fabric smooths the process of managing AI workflow spread across multiple locations. It also facilitates on demand-based data availability by bringing data close to compute and performing analysis, training, and validation wherever and whenever needed. This capability not only enables data integration but also protection and security of the entire data pipeline.

Additional Information

- Deep Learning Network Architecture: Spatial Convolutional Neural Network

https://arxiv.org/abs/1712.06080

- Distributed deep learning training framework: Horovod

https://horovod.ai/

- Run:ai container orchestration solution: Run:ai product introduction

https://docs.run.ai/home/components/

- Run:ai installation documentation

https://docs.run.ai/Administrator/Cluster-Setup/cluster-install/#step-3-install-runai https://docs.run.ai/Administrator/Researcher-Setup/cli-install/#runai-cli-installation

- Submitting jobs in Run:ai CLI

https://docs.run.ai/Researcher/cli-reference/runai-submit/

https://docs.run.ai/Researcher/cli-reference/runai-submit-mpi/

- Azure Cloud resources: Azure NetApp Files

https://learn.microsoft.com/azure/azure-netapp-files/

- Azure Kubernetes Service

https://azure.microsoft.com/services/kubernetes-service/-features

- Azure VM with GPU SKUs

https://learn.microsoft.com/azure/virtual-machines/sizes-gpu

- Astra Trident

https://github.com/NetApp/trident/releases

- Data Fabric powered by NetApp

https://www.netapp.com/data-fabric/what-is-data-fabric/

- NetApp Product Documentation

https://www.netapp.com/support-and-training/documentation/

Published on:

Learn moreRelated posts

Configuring Advanced High Availability Features in Azure Cosmos DB SDKs

Azure Cosmos DB is engineered from the ground up to deliver high availability, low latency, throughput, and consistency guarantees for globall...

IntelePeer supercharges its agentic AI platform with Azure Cosmos DB

Reducing latency by 50% and scaling intelligent CX for SMBs This article was co-authored by Sergey Galchenko, Chief Technology Officer, Intele...

From Real-Time Analytics to AI: Your Azure Cosmos DB & DocumentDB Agenda for Microsoft Ignite 2025

Microsoft Ignite 2025 is your opportunity to explore how Azure Cosmos DB, Cosmos DB in Microsoft Fabric, and DocumentDB power the next generat...

Episode 414 – When the Cloud Falls: Understanding the AWS and Azure Outages of October 2025

Welcome to Episode 414 of the Microsoft Cloud IT Pro Podcast.This episode covers the major cloud service disruptions that impacted both AWS an...

Now Available: Sort Geospatial Query Results by ST_Distance in Azure Cosmos DB

Azure Cosmos DB’s geospatial capabilities just got even better! We’re excited to announce that you can now sort query results by distanc...

Query Advisor for Azure Cosmos DB: Actionable insights to improve performance and cost

Azure Cosmos DB for NoSQL now features Query Advisor, designed to help you write faster and more efficient queries. Whether you’re optimizing ...