Understand Power BI Semantic Model Size | Memory Error Fixes (Part 1)

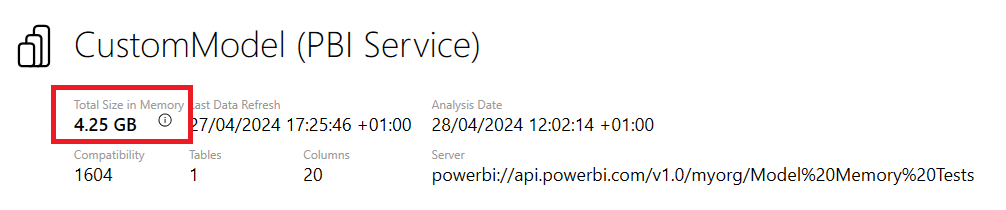

If you've ever encountered the "We cannot complete the requested operation because there isn't enough memory" error in Power BI, then this article is for you. Here, you'll get a better understanding of this error and how it relates to the size of your semantic model.

You might already know that semantic models in Power BI are limited in the amount of memory they can use. This limit applies to all types of semantic models, including Import, Direct Lake, and DirectQuery. However, you may not need to worry about this limit as much when working in DirectQuery mode.

The amount of memory available to your semantic model is dependent on several factors, such as the amount of RAM installed and the processing power of your machine. In this article, you'll learn how to monitor and manage your semantic model's memory usage to prevent the unwanted error from happening again.

To ensure your semantic model works at its optimal best, it's essential to have a grasp of its memory usage. With this in mind, this tutorial explores the nuances of semantic model memory usage in Power BI, helping you unlock the full potential of your data visualization and analysis efforts.

Continue reading Power BI Semantic Model Memory Errors, Part 1: Model Size

Published on:

Learn moreRelated posts

Power BI Semantic Model Memory Errors, Part 2: Max Offline Semantic Model Size

This blog post addresses a common error encountered by users of Power BI, namely "Database 'xyz' exceeds the maximum size limit on disk". In t...

Model explorer with calculation group authoring is now available in Power BI service including Direct Lake semantic models

With the model explorer available for editing data models in the Power BI service these semantic models, both Direct Lake and those in import ...

Does Excel Have a Dark Mode? Yes, Let’s Enable it

Are you bothered by the brightness of your computer screen while working in Excel? Wondering if Excel has a dark mode and how to enable it? Al...

Understanding data temperature with Direct Lake in Fabric

As part of Microsoft Fabric, a new storage mode to connect from Power BI to data in OneLake has been introduced. Direct Lake it makes to possi...

Performance Testing Power BI Direct Lake Mode Datasets In Fabric

How to run performance tests on Power BI Direct Lake datasets in Microsoft Fabric The post Performance Testing Power BI Direct Lake Mode Datas...

40 Days of Fabric: Day 4 – Direct Lake

Day 4 of the 40 Days of Fabric series explores Direct Lake and its benefits over traditional DirectQuery or Import storage modes in Microsoft ...

Power BI Data Model Optimization With VertiPaq

If you're working with Power BI data models, this tutorial is a game-changer. Here, you'll learn how to leverage the power of VertiPaq Analyze...

Power BI DirectQuery Mode And Degenerate Dimensions

Why using a column from a large fact table as a dimension could result in errors in Power BI DirectQuery mode

Power BI Fast and Furious with Aggregations

Power BI is a versatile solution that not only caters to small datasets but also can handle large datasets. When dealing with huge data volume...