Use ABAC in RBAC roles in Azure Storage to perform upload/download blobs & restrict delete blobs

Disclaimer: Please test this solution before implementing it for your critical data.

Scenario:

This article explains step by step procedures to accomplish the requirements in Azure Storage using built in RBAC roles:

- Read/Download and write/upload operation for containers and blobs should be allowed for the users.

- Delete operations should be restricted.

Pre-Requisites:

- Azure Storage GPV2 / ADLS Gen 2 Storage account

- Make sure to have enough permissions(Microsoft.Authorization/roleAssignments/write permissions) to assign roles to users , such as Owner or User Access Administrator

Action:

You could follow the steps below to create a Storage Blob Data Contributor role with conditions using the Azure portal :

Step 1:

- Sign-in to the Azure portal with your credentials.

- Go to the storage account where you could like the role to be implemented/ scoped to.

- Select Access Control (IAM)->Add-> Add role assignment:

Step 2:

- In Assignment type, select Job function roles and proceed to Role.

- On the Roles tab, select (or search for) Storage Blob Data Contributor and click Next.

- On the Members tab, select User, group, or service principal to assign the selected role to one or more Azure AD users, groups, or service principals.

- Click Select members.

- Find and select the users, groups, or service principals.

- You can type in the Select box to search the directory for display name or email address.

- Please continue with Step 3 to configure conditions.

Step 3:

The Storage Blob Data Contributor provides access to read, write and delete blobs. However, we would need to add appropriate conditions to restrict the delete operations.

- On the Conditions (optional) tab, click Add condition. The Add role assignment condition page appears:

- In the Add action section, click Add action.

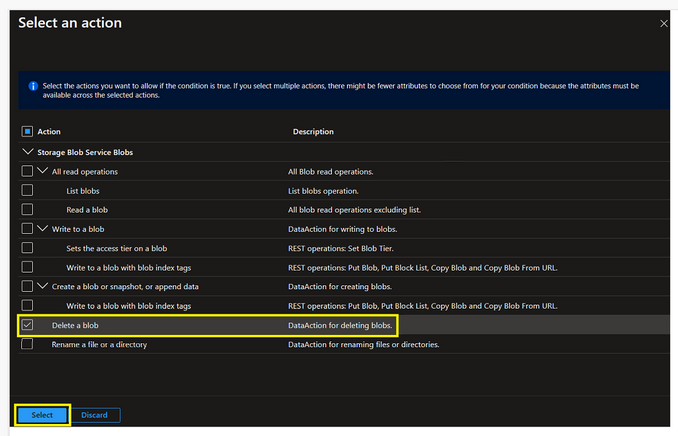

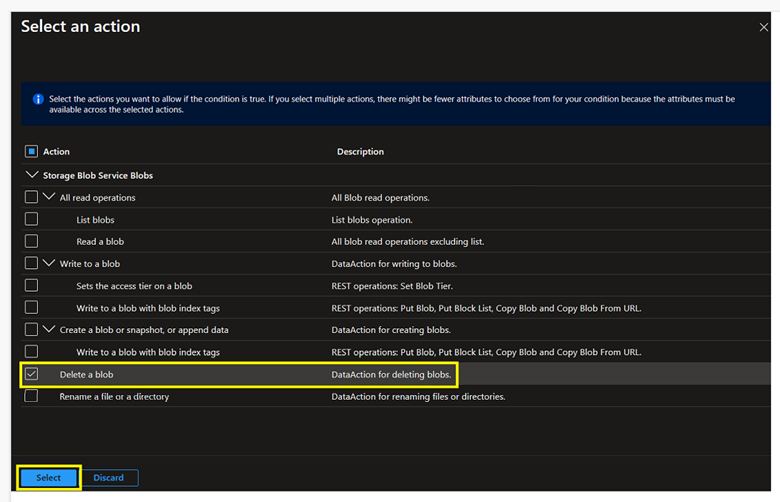

- The Select an action pane appears. This pane is a filtered list of data actions based on the role assignment that will be the target of your condition. Check the box next to Delete a blob, then click Select:

- In the Build expression section, click Add expression. The Expression section expands.

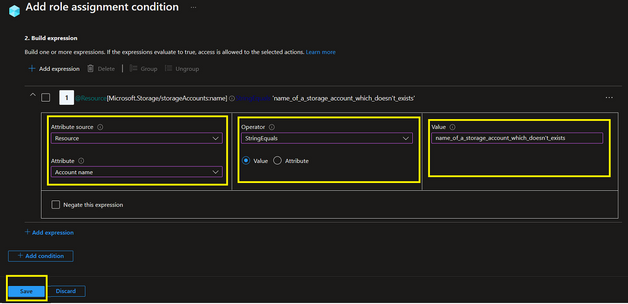

- Specify the following expression settings:

|

Setting |

Value |

|

Attribute source |

Resource |

|

Attribute |

Account Name |

|

Operator |

StringEquals |

|

Value |

name_of_a_storage_account_which_doesn't_exists |

Important:

- Please note that this section where you configure the expression settings is very crucial and can be done in many ways. In my scenario, I selected the above values in such a way, so that the expression evaluates to false and access is not allowed to the selected action(i.e to delete a blob). Here, in my case above, I gave a random name for the Value settings i.e Account Name that doesn’t exists at all, so the expression would ideally fail and this will help to restrict delete blob operation for selected member.

- You can go ahead and configure it as per your desire in such a way that expression can be never true.

- On the Review + assign tab, click Review + assign to assign the role with the condition.

- After a few moments, the security principal is assigned the role.

Please Note

Along with the above permission, I have given the user Reader permission at the storage account level. You could give the Reader permission at the resource level/resource group level/subscription level too.

We mainly have Management Plane and Data Plane while providing permissions to the user.

- The Management plane consists of operation related to storage account such as getting the list of storage accounts in a subscription, retrieve storage account keys or regenerate the storage account keys, etc.

- The Data plane access refers to the access to read, write or delete data present inside the containers.

- For more info, please refer to : https://docs.microsoft.com/en-us/azure/role-based-access-control/role-definitions#management-and-dat...

- To understand about the Built in roles available for Azure resources, please refer to : https://docs.microsoft.com/en-us/azure/role-based-access-control/built-in-roles

Hence, it is important that you give minimum of ‘Reader’ role at the Management plane level to test it out in Azure Portal.

Step 4:

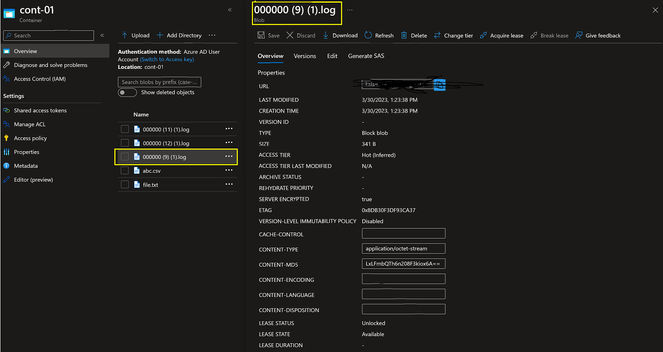

Test the condition (Ensure that the authentication method is set to Azure AD User Account and not Access key)

- Upload blobs to blob storage container successfully.

- Download/Read blobs is successful.

- Delete blobs from blob storage container causing the below failure.

Note: The above solution only helps in protecting deletions of blob in container and doesn’t protect against deletion of containers.

If you would like to add the restriction of container deletion as well, you can explore options like acquiring a lease on the container/containers along with the above implementation.

Please refer Lease Container (REST API) - Azure Storage | Microsoft Learn , Manage blob containers using the Azure portal - Azure Storage | Microsoft Learn for acquiring lease over the container.

Conclusion:

You can use ABAC conditions for allowing/restricting other data actions as well based on your use case scenarios.

Related documentations:

- You can also refer to the technical blog- Custom RBAC role in Azure Storage to perform upload / download operation & restrict delete operation - Microsoft Community Hub which talks about using custom role at resource group level/subscription level /management group level to perform read and write operations but restrict delete operations.

- What is Azure attribute-based access control (Azure ABAC)? | Microsoft Learn

- Azure built-in roles - Azure RBAC | Microsoft Learn

- Tutorial: Add a role assignment condition to restrict access to blobs using the Azure portal - Azure ABAC - Azure Storage | Microsoft Learn

Hope this helps!

Published on:

Learn moreRelated posts

Azure Developer CLI (azd): One command to swap Azure App Service slots

The new azd appservice swap command makes deployment slot swaps fast and intuitive. The post Azure Developer CLI (azd): One command to swap Az...