Leveraging Knowledge Graphs with Azure OpenAI

Introduction

In many industries, information can be scattered across multiple documents, or across multiple pages in a very large document. Moreover, combining information across documents and sources is essential for day-to-day operations. In many industries (Legal, Insurance, Medical), information is spread across the documents in the form of Names Entities (E.g. - Address, Phone number, Policy Name etc.) that can be linked to each other through “Relationships”. Manually identifying entities and relationships across thousands of documents can be costly and time intensive. In this document, we go through the process of automating named entity recognition and identifying relationships through knowledge graphs.

Knowledge Graphs are a way of structuring and organizing information using/following a specific topology called an ontology. Knowledge Graphs represent a network of entities - I.e., objects, places, events, or concepts – and the relationships between these entities. This is powerful as it allows extracting data across multiple unstructured and structured sources as long as they are tied to the same entity or relationship.

Knowledge Graphs provide multiple key benefits that compel enterprise companies to use them. Listed below are a subset of key benefits:

- Combined data sources that may have been siloed previously.

- Combine structured and unstructured data.

- Summarize relationships efficiently.

- Gather effective insights.

- Visualize relationships and flow of information across multiple sources.

By transitioning from traditional databases, knowledge graphs can help enterprises use the power of Large Multimodal Models (LMMs), Natural Language Processing (NLP), and other tools to better leverage their data. Leveraging knowledge graphs to store information and for question answering enables us to pack in the most relevant features of multiple documents into a concise format, thereby making best use of token sizes.

GPTs models can help transform unstructured data into structured knowledge graphs with relationships for future querying. This notebook goes over how to enhance knowledge graph tasks by using LLMs. We will:

- Showcase how to use Azure OpenAI's (AOAI) GPT-4 models to perform Name Entity Recognition (NER) on a corpus of documents, that will be used to extract entities and relationships to build the knowledge graph.

- Perform a Natural Language to Code (NL-to-Code) prompt engineering solution to generate a cypher query that will build that knowledge graph, and NL-to-Code for to perform question answering against the knowledge graph.

- Answer retrieval from the knowledge graph the fetch relevant information.

- Prompt engineering to leverage the search results to generate answers either by RAG or other prompt engineering methods.

Covered Concepts

The notebook will be divided into the main components below:

- Introduction to Knowledge Graphs

- Use Cases

- Dataset overview

- Ontologies

- Method

- Entity Extraction (NER)

- Generate Knowledge Graph (NL-to-Code)

- Query Knowledge Graph (NL-to-Code)

- Alternate out-of-the-box solutions

- Evaluation

Use Case Overview

This notebook will cover two use cases for knowledge graphs: an auto insurance company and another, technology company. Both cases have a pre-defined ontology, outlined in more detail later in the document. There can be other cases where organizations do not have an ontology but would like to use knowledge graphs for question answering. In this case, you could either use out of box solutions (as described in later sections). Lang-chain extracts "knowledge triples" to create its knowledge graph from documents. Another alternative is to build out entities and relations that are important for the organization to extract and use that as a starting point.

Use Case 1: Auto Insurance Company

Auto insurance firms receive hundreds of insurance claims every day. Within these claims, the user is often required to describe the events in as much detail as they can provide. Since this information is free-form, the detail and style in which users provide information can be highly variable. Insurance companies are often looking to get specific details from these narratives quickly, so that the process of filing claims can move faster.

Use Case 2: Technology Company

Chatbots are an increasingly popular way to interact with customers to answer commonly asked questions about products. In the technology industry, information can come from unstructured (pdfs, txt) and structures (data base) like data sources that are required to be combined to answer questions. By first converting unstructured data into an offline knowledge graph structure, chatbots can reduce inference latency by extracting only the relevant nodes and edges. This can increase the customer response rate and increase satisfaction for the customers.

Dataset Breakdown

Use Case 1: Auto Insurance Company

We have 5 different sample claims (text files) filed by policyholders. The information is scattered in easily identifiable entities (Policyholder, Claim Date, Claim Number etc.) and also within narratives provided by the policyholders.

Here is an example of an insurance claim:

Claim Report

Policyholder: Jennifer Thompson

Claim Number: 123456789

Date of Loss: 08/01/2021

Policy Type: Comprehensive Auto Insurance

Product: All-Inclusive Auto Insurance Package

Description of Loss:

On August 1, 2021, at approximately 4:00 PM, Jennifer Thompson was driving her 2015 Honda Civic, which is covered under our All-Inclusive Auto Insurance Package. Jennifer's partner, Michael Thompson, and their child, Emily Thompson, were also in the vehicle at the time of the accident. The accident occurred when Jennifer, who was driving at a moderate speed, failed to notice a stop sign and collided with another vehicle at the intersection.

The collision resulted in significant damage to Jennifer's Honda Civic and the other vehicle involved. The front bumper, headlights, and hood of Jennifer's car were severely damaged. The airbags deployed, and the windshield cracked. The other vehicle, a 2017 Toyota Corolla, sustained significant damage to its side doors and panels.

Jennifer sustained minor injuries, including bruises and whiplash, as a result of the accident. Her partner, Michael, and their child, Emily, were fortunately unharmed. The driver of the other vehicle, a 30-year-old male named David Johnson, complained of neck pain and was transported to a nearby hospital for further examination.

Coverage:

Jennifer's All-Inclusive Auto Insurance Package provides coverage for the damages sustained in this accident. The package contains comprehensive collision coverage, which covers the damages to her 2015 Honda Civic. Additionally, personal injury protection (PIP) is included in her policy, covering Jennifer's medical expenses related to her minor injuries. Furthermore, liability coverage is provided in the package, which will cover the damages to the 2017 Toyota Corolla and any medical expenses incurred by David Johnson.

Conclusion:

Based on the information provided, it is determined that Jennifer Thompson caused the accident by failing to stop at the stop sign. The risk/event involved her Honda Civic and led to damages affecting both her vehicle and the Toyota Corolla. Jennifer's All-Inclusive Auto Insurance Package contains the necessary coverage to address the damages and injuries sustained in this accident. Our claims department will process the claim and coordinate with the involved parties to ensure a smooth resolution.

Our next step is to identify the named entities found in the ontology above and extract them along with corresponding relationships.

We have used documentation that is available online about Surface devices (e.g., Surface Pro 9, Surface Go 3, Surface Laptop 5, etc.) as a dataset for this use case. The webpages have been converted into pdf’s that are about 1000 pages. The text from these pages is extracted using Form Recognizer and then named entities and relationships that we are looking for are extracted from those texts.

Below is an example image of the online documentation used:

Ontologies

For knowledge graphs, an ontology is a description of the data structure that will be translated into the graph. A knowledge graph is created when you apply an ontology to a set of data points The ontology will outline the entities and relationships and may be used as a schema to ensure consistency. An ontology outlines the entities and relationships that will make up our knowledge graph.

The ontology describes basic entities and how they are connected to each other. Ontologies are expected to be a more structured representation of how various elements are connected to each other.

Later in the document, we will demonstrate how to use the ontologies to generate a knowledge graph though perform entity extraction.

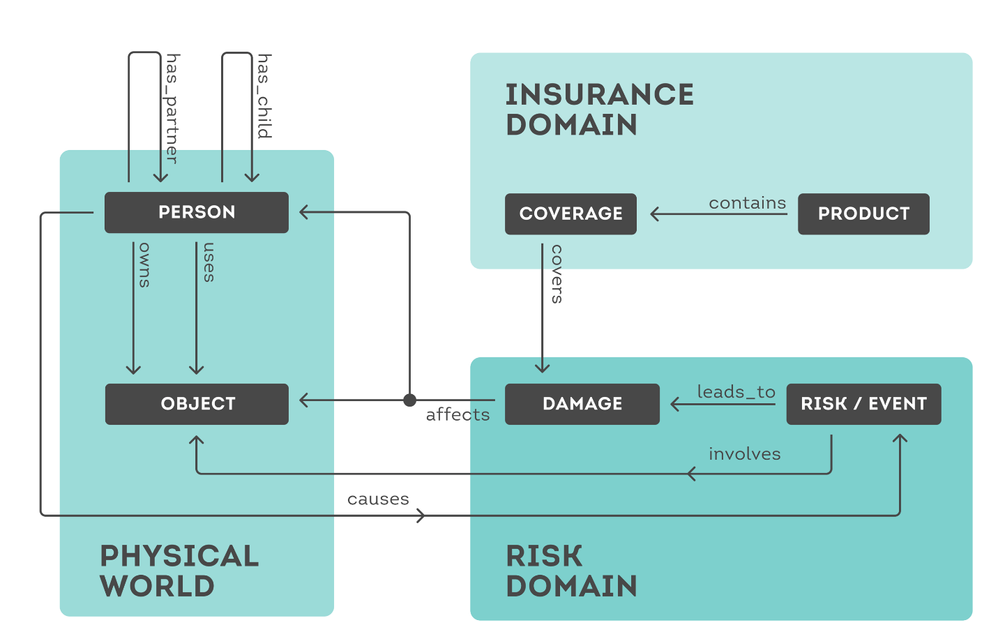

Use Case 1: Auto Insurance Company Ontology

For our insurance use case, our ontology is defined as such below. We will use this ontology to construct and query our knowledge graph.

Given this ontology, we will show how to generate the corresponding knowledge graph further in the notebook. As we can see above, the ontology describes basic entities and how they are connected to each other. Ontologies are expected to be a more structured representation of how various elements are connected to each other (e.g. - the event involves an object).

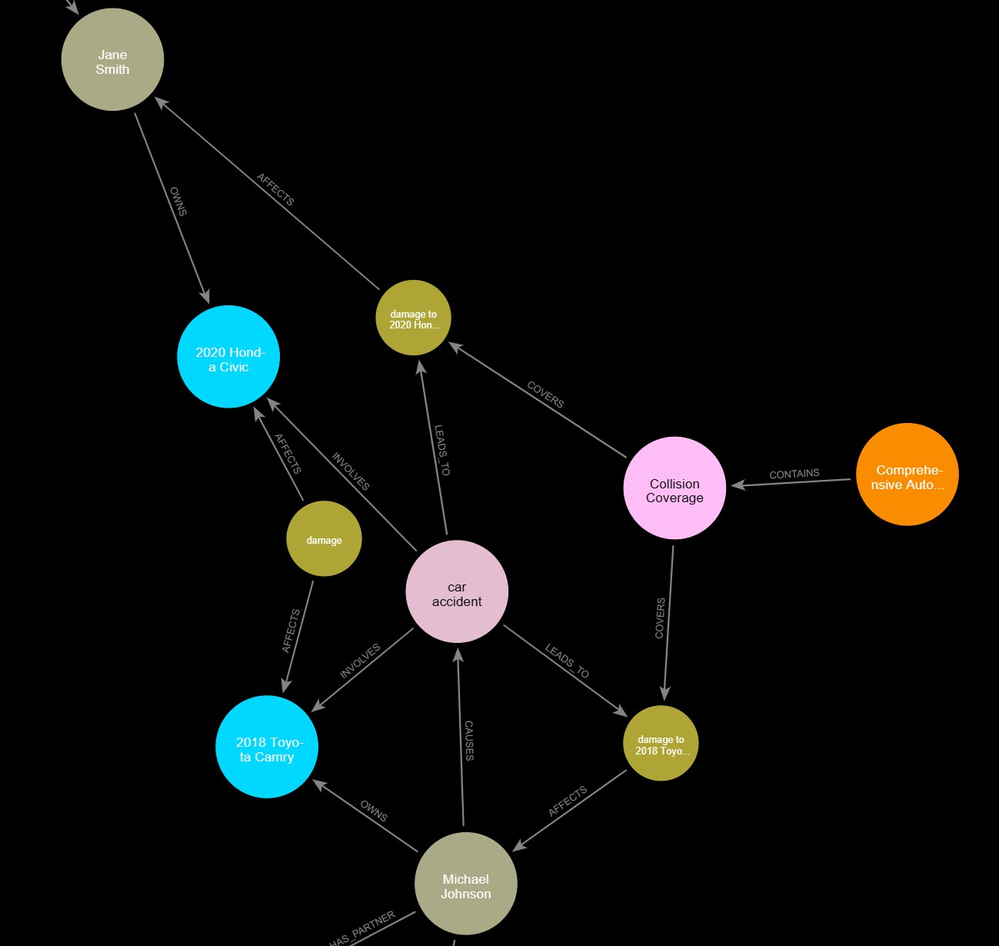

Below is a snippet of the generated knowledge graph from the ontology given above. As you can see, the circular elements represent the entities (such as a person, coverage, object) as described in the ontology above as gray rectangles. Then there are arrows connecting the different entities which represent the relationships between the entities, shown as the arrows between the entities above. We see “Car accident” (entity “Event”) involves (relationship “involves”) “2018 Toyota Camry” (Entity “Object”).

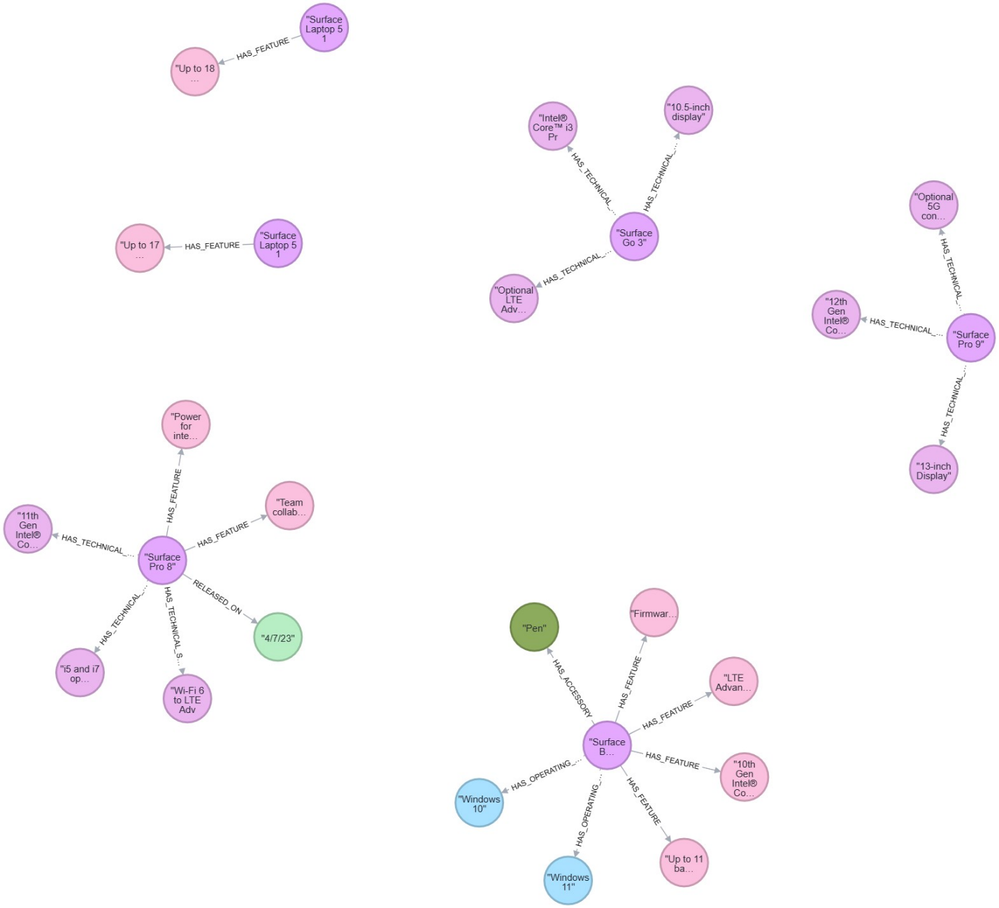

Use Case 2: Technology Company Ontology

For the technology company use case, the ontology is defined below as entities and relationships.

Entity types:

- Surface (the central node connecting to all devices belonging to the Surface family)

- Surface device (name of a Surface device), e.g. Surface Pro 6, Surface Pro X, Surface Slim Pen 2

- Features, e.g. Inking feedback, Windows Fax and Scan

- Accessories

- Operating system, e.g. Windows 11 ARM, Windows 10

- Technical specifications

Relationships:

- [Surface], specific device, [Surface device]

- [Surface device], has feature, [Feature]

- [Surface device], has accessories, [Accessory]

- [Surface device], has operating system, [Operating system]

- [Surface device], has technical specifications, [Technical specification]

Below is a snippet of the generated knowledge graph from the ontology given above. As you can see, the circular elements represent the entities (such as a feature, accessory, operating system) as described in the ontology above as gray rectangles. Then there are arrows connecting the different entities which represent the relationships between the entities, shown as the arrows between the entities above. We see Surface Hub (entity “surface device”) has operating system (relationship “has operating system”) Windows 10 (entity “operating system “).

We will be performing the following steps for both use cases:

- Perform Name Entity Recognition (NER) with GPT-4 to find the relationships and entities to form the Knowledge Graph (using a given ontology).

- Use GPT-4 to generate the code to build the Knowledge Graph (NL to Code).

- Populate the Knowledge Graph with unstructured data present within the insurance claims.

- Query the Knowledge Graph to find relevant information.

NER with Azure OpenAI

Name Entity Recognition (NER) is a common natural language processing task. NER extracts information from text and places it in a predetermined category. Given the ontology defined above, we can perform NER using Azure OpenAI GPT-4 models through prompt engineering.

Specifically, we can instruct GPT on how to extract entities that fit our ontology. We craft two prompts: a system prompt and user prompt. The system message is included at the beginning of the prompt and used to prime the model for the general context, instructions, or other information relevant to the use case. The user prompt can reinforce specific instructions for the task at hand and provide instructions for output format.

We pass in our original data sources and perform NER using a few-shot approach by providing examples of what the desired outcome should mirror. Additionally, a temperature of 0 because we want to curb creativity. See more details on NER with AOAI.

Use Case 1 (Auto Insurance) Prompts:

System Prompt:

'''Assistant is a Named Entity Recognition (NER) expert. The assistant can identify named entities such as a person, place, or thing. The assistant can also identify entity relationships, which describe how entities relate to each other (eg: married to, located in, held by). Identify the named entities and the entity relationships present in the text by returning comma separated list of tuples representing the relationship between two entities in the format (entity, relationship, entity). Only generate tuples from the list of entities and the possible entity relationships listed below. Return only generated tuples in a comma separated tuple separated by a new line for each tuple.

Entities:

- Person

- Object

- Damage

- Coverage

- Product

- Risk/Event

Relationships:

- [PERSON],has_partner,[PERSON]

- [PERSON],has_child,[PERSON]

- [PERSON],causes,[RISK/EVENT]

- [PERSON],owns,[OBJECT]

- [PERSON],uses,[OBJECT]

- [DAMAGE],affects,[PERSON]

- [DAMAGE],affects,[OBJECT]

- [RISK/EVENT],involves,[OBJECT]

- [RISK/EVENT],leads_to,[DAMAGE]

- [COVERAGE],covers,[DAMAGE]

- [PRODUCT],contains,[COVERAGE]

Example output:

Michael, has_partner, Emily

Michael, has_child, Josh

Michael, owns, Toyota Prus

Extracted Entities:

User Prompt:

"Identify the named entities and entity relationships in the insurance claim text above. Return the entities and entity relationships in a tuple separated by commas. Return only generated tuples in a comma separated tuple separated by a new line for each tuple.

Text:”

Use Case 2 (Technology) Prompts:

System Prompt:

'''Assistant is a Named Entity Recognition (NER) expert. The assistant can identify named entities such as a person, place, or thing. The assistant can also identify entity relationships, which describe how entities are associated or connected with each other (eg: married to, located in, held by). Identify the named entities and the entity relationships present in the text by returning comma separated list of tuples representing the relationship between two entities in the format (entity, relationship, entity). Only generate tuples from the list of entities and the possible entity relationships listed below. Return only generated tuples in a comma separated tuple separated by a new line for each tuple.

Entities:

- Surface device

- Features

- Accessories

- Operating system

- Technical specifications

- Release date

Relationships:

- [SURFACE_DEVICE], has feature, [FEATURES]

- [SURFACE_DEVICE], has accessories, [ACCESSORIES]

- [SURFACE_DEVICE], has operating system, [OPERATING_SYSTEM]

- [SURFACE_DEVICE], has technical specifications, [TECHNICAL_SPECIFICATIONS]

- [SURFACE_DEVICE], released on, [RELEASE_DATE]

Example output:

(Surface Pro 9, has feature, Battery life up to 15.5 hrs)

(Surface Laptop Go 2, released on, June 7 2022)

User Prompt

"Identify the named entities and entity relationships in the technology documentation text above. Return the entities and entity relationships in a tuple separated by commas. Return only generated tuples in a comma separated tuple separated by a new line for each tuple.

Text:”

Generating a Knowledge Graph with Azure OpenAI

In this section we will utilize Neo4j to visualize and store our knowledge graph. Neo4j is a native graph database that implements a true graph model all the way down to a storage level. It is highly scalable and schema free. Neo4j stores data as nodes, edges connecting them, and attributes of nodes and edges. It uses a declarative language known as Cypher.

First, we must generate a graph database that we can query against. To do this, we will first convert our entity outputs into a csv, that will be used as input to our GPT4 prompt. We then ask GPT to generate the Cypher query to create the knowledge graph in Neo4j. Here we will show how GPT4 can generate a cypher query that will create all the nodes and relationships found during entity extraction.

To generate the cypher query, we perform a type of prompt engineering called Natural Language (NL) to Code. We input into the prompt our instructions in natural language and craft our instructions to specify the output in code.

The prompts used are shown below. Note that both prompts were the same across Use Case 1 and Use Case 2 for this portion.

System Prompt:

'''Assistant is an expert in Neo4j Cypher development. Create a cypher query to generate a graph using the data points provided.Data:'''

User Prompt:"Generate a cypher query to create new nodes and their relationships given the data provided. Return only the cypher query.Cypher query: "

By specifying what coding language in the instructions and providing a spot for the output, we are reinforcing the idea of what language and format for the expected output.

The result is a cypher query that can directly copied and pasted in Neo4j, and the knowledge graph is now ready to be queried.

Querying a Knowledge Graph with Azure OpenAI

Now that we have our knowledge graph, we can leverage it to retrieve answers quickly. Based on the format of the answer the prompt-completion problem can be NL-to-NL (for text answers) or NL-to-Code (for graph answers in Neo4j). In the section below, we describe NL-to-Code question answering. Knowledge graphs concisely represent all entities relevant to the use case.

Using Knowledge graphs as an input to be answering questions might help reduce the number of hops/API calls one has to make to retrieve answers from unstructured data sources.

Like the previous section, we input instructions in the system prompt, and pass the question as our user prompt. We ask for GPT to return a Cypher query which when executed, gives us the answer to our question.

Note that to query the knowledge graph with the method shown above, a predefined ontology is required and must be passed into the prompt to generate meaningful queries. The prompt must specific the naming conventions used in the graph as this is being converted to cypher query programming language, and therefore to find a match in the graph it must use the correct naming convention and existing relationships.

For the user message, you can provide the query you wish to answer based on the graph.

Use Case 1 (Auto Insurance) Prompt and Example Output:

System Prompt:

'''Assistant is an expert in Neo4j Cypher development. Only return a cypher query based on the user query. The cypher graph has the following schema:

Nodes: Accident, Car, Coverage, Damage, Insurance, Person

Entity Properties:

- Damage.description

- Accident.type

- Car.model

- Coverage.type

- Insurance.type

- Person.name

Relationships:

- Insurance -> CONTAINS -> Coverage

- Accident -> LEADS_TO -> Damage

- Accident -> INVOLVES -> Car

- Coverage -> COVERS -> Damage

- Damage -> AFFECTS -> Car

- Damage -> AFFECTS -> Person

- Person -> USES -> Car

- Person -> OWNS -> Car

- Person -> HAS_CHILD -> Person

- Person -> HAS_PARTNER -> Person

- Person -> CAUSES -> Accident”

Example questions and output:

- Question: Find all people who have caused an accident and the type of accident they caused. MATCH (p:Person)-[:CAUSES]->(a:Accident) RETURN p.name as Person, a.type as Accident_Type

- Question: What types of insurance contain specific coverages? MATCH (i:Insurance)-[:CONTAINS]->(c:Coverage) RETURN i.type, c.type

- Question: What types of insurance coverages cover specific damages? MATCH (c:Coverage)-[:COVERS]->(d:Damage) RETURN c.type, d.description

- Question: Find all coverages that cover a specific damage type and the insurance they belong to. MATCH (cov:Coverage)-[:COVERS]->(d:Damage {description: 'bruises'}), (i:Insurance)-[:CONTAINS]->(cov)RETURN cov.type as Coverage_Type, i.type as Insurance_Type

- Question: Find the person who caused the accident and what damage occurred and to who. MATCH (p:Person)-[:CAUSES]->(a:Accident), (a)-[:LEADS_TO]->(d:Damage), (d)-[:AFFECTS]->(i:Person)RETURN p.name as Person, i.name as Victim, d as Damage

- Question: Given a model of car, find the car owner and the damages occurred to the car. MATCH (a:Accident)-[:INVOLVES]->(c:Car {model: '2015 Honda Accord'}), (a)-[:LEADS_TO]->(d:Damage), (p:Person)-[:OWNS]->(c)RETURN p.name as Car_Owner, d.description as Damage_Description

Use Case 2 (Technology Company) Prompt and Example Output:

System Prompt:

'''Assistant is an expert in Neo4j Cypher development. Only return a cypher query based on the user query. The cypher graph has the following schema:

Nodes: Surface, Device, Feature, OS, Spec

Relationships:

- Surface -> SPECIFIC_DEVICE -> Device

- Device -> HAS_FEATURE -> Feature

- Device -> HAS_OS -> OS

- Device -> HAS_SPEC -> Spec

Surface node does not accept any value for name.”

Example questions and output:

- Question: What are some of the features of Surface Go 3 for Business? MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device {name: 'Surface Go 3 for Business'})-[:HAS_FEATURE]->(f:Feature) RETURN f.name

- Question: Give me all the devices that have 11 hours of battery. MATCH (d:Device)-[:HAS_SPEC]->(s:Spec) WHERE s.battery = '11 hours' RETURN d

- Question: What are the features and usable operating systems of Surface Go 3 for Business? MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device {name: 'Surface Go 3 for Business'})-[:HAS_FEATURE]->(f:Feature) WITH collect(f.name) as Features MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device {name: 'Surface Go 3 for Business'})-[:HAS_OS]->(o:OS) RETURN Features, collect(o.name) as OperatingSystems

- Question: Give me all Surface devices and their supported OS's. MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device)-[:HAS_OS]->(o:OS) RETURN s, d, o

- Question: Give me all Surface devices and their technical specifications. MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device)-[:HAS_SPEC]->(sp:Spec) RETURN s, d, sp

- Question: Find all Surface devices that support a specific OS and the technical specifications of those devices. MATCH (s:Surface)-[:SPECIFIC_DEVICE]->(d:Device)-[:HAS_OS]->(o:OS {name: 'YourSpecificOSName'}), (d)-[:HAS_SPEC]->(sp:Spec) RETURN s, d, o, sp

Alternate Methods for Knowledge Graphs

The sections above assume that the organization already has a pre-defined ontology they would like to work with. However, if the organization does not have a pre-defined ontology, below are some out-of-box recommendations for Question Answering using knowledge graphs:

1. Langchain - GraphQA goes over question answering using a graph structure. GraphQA takes text as input and uses "knowledge triplets" for entity extraction and graph creation. A knowledge triple is a clause that contains a subject, a predicate, and an object. It then uses this graph to answer questions. Prompts for GraphQA are pre-built, but can be customized as required for the use case. Visit https://python.langchain.com/docs/modules/chains/additional/graph_qa for more details.

2. OntoGPT - OntoGPT framework and SPIRES tool offer a more structured method of extracting knowledge from unstructured text for integration into KGs. This object-oriented approach, while working within the constraints of LLMs, can handle complex, nested relationships and has a special focus on consistency, quality control and ontology alignment. Visit https://apex974.com/articles/ontogpt-for-schema-based-knowledge-extraction for more details.

Evaluation

In this notebook we discussed two major use cases for using LLMs: Name Entity Extraction (NER) and Natural Language-to-Code (NL-to-Code). Each of these cases can be evaluated independently during the development cycle. This section will elaborate on evaluation methods for each use case.

NER

To evaluate NER tasks, a text document and corresponding ground-truth entities for the document is required. After running the NER task and generating a list of entities and relationships, one can compute the precision, recall, and accuracy of the task through a confusion matrix by comparing the generated classification to the ground truth classification through a binary classification evaluation method.

While evaluating NER, it is recommended to go over fuzzy matched ground truth-GPT4 extracted entities to make sure that the required entities have been extracted and mapped correctly.

Refer to this documentation for more information regarding confusion matrices for binary classification: sklearn.metrics.confusion_matrix — scikit-learn 1.3.0 documentation

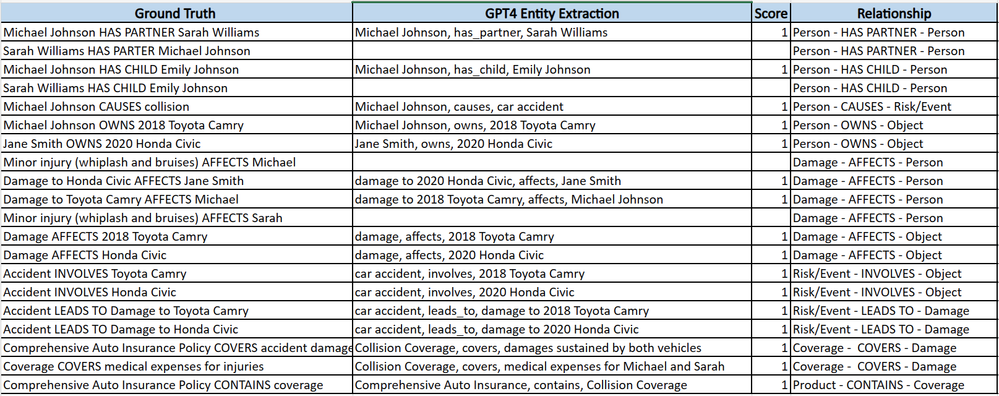

Use Case 1 (Auto Insurance) NER Evaluation:

The image above shows an example evaluation for the first claim in the data folder. It has ground truth relationships mapped from the ontologies and then GPT4 NER results. It correctly classified 16/20 entities. This can be improved with prompt engineering effort by incorporating few-shot learning or finetuning.

Use Case 2 (Technology) NER Evaluation:

For Use Case 2, the image below shows evaluation based on 3 different online PDFs for technical support for Surface Devices. GPT4 correctly classified 19/23 entities.

NL-to-Code

To evaluate NL-to-Code tasks, it is essential that a domain expert is involved in human in the loop to check the accuracy and viability of the generate code. Multiple metrics can be used to evaluate NL-to-Code tasks such as code readability, does the code sample run, and accuracy of results. Specifically for knowledge graphs, we also should check that all the entities and relationships identified in the previous step also are found in the knowledge graph.

All these metrics require human expertise in the domain to provide iterative feedback to the prompts to improve on the results. With code, the same functionality can be written in many ways but yield the same result. Therefore, an evaluation dataset can be the query and the expected outcome which can be evaluated in a binary classification fashion.

The first NL-to-Code task was to convert the extracted entities and generate a cypher query that will be used to populate the knowledge graph. The next NL-to-Code task was answering a question against the knowledge graph. Here an expert is required to know the ontology of the knowledge graph to be asked grounded questions. To mimic this, we propose five questions for each use case and validate for code completions (did the query run) and correct response (was the response accurate).

Use Case 1 (Auto Insurance) NL-to-Code Evaluation:

For Use Case 1, GPT-4 extracted 16 entity-relationships tuples from the NER step above. It then correctly mapped 16/16 extracted entities in the cypher query. This was evaluated by checking the nodes that were created and ensuring the mapping between entities and relationships is reflected in the cypher query.

For the next NL-to-Code task of question answering, we evaluated 5 out of the 5 queries measured correctly for both code completeness and response accuracy.

Use Case 2 (Technology) NL-to-Code Evaluation:

For Use Case 2, GPT-4 extracted 19 entity-relationships tuples from the NER step above. It then correctly mapped 19/19 extracted entities in the cypher query. This was evaluated by checking the nodes that were created and ensuring the mapping between entities and relationships is reflected in the cypher query.

For the next NL-to-Code task of question answering, we evaluated 5 out of the 5 queries measured correctly for both code completeness and response accuracy.

Conclusion

In conclusion, we have shown how to leverage Azure OpenAI GPT models with the power of knowledge graph while leveraging Neo4j. We found that the combination of AOAI and Neo4j to generate and query knowledge graphs is extremely powerful. This methodology can be applied to many technical use cases such as NER, NL-to-SQL, etc across different industries.

Published on:

Learn moreRelated posts

Teams Delivers a Slack Migration Tool

Microsoft announced the availability of a Slack to Teams migration tool in the Microsoft 365 admin center. The new tool exists to assist the 7...

Semantic Reranking with Azure SQL, SQL Server 2025 and Cohere Rerank models

Supporting re‑ranking has been one of the most common requests lately. While not always essential, it can be a valuable addition to a solution...

How Azure Cosmos DB Powers ARM’s Federated Future: Scaling for the Next Billion Requests

The Cloud at Hyperscale: ARM’s Mission and Growth Azure Resource Manager (ARM) is the backbone of Azure’s resource provisioning and management...

Introducing new tool to migrate content from Slack to Microsoft Teams

A new Slack to Microsoft Teams migration tool will roll out from December 2025 to March 2026, allowing admins to migrate Slack channel content...

Microsoft Purview DLM: Retirement of SharePoint online information management and in-place records management features

Starting April 2026, SharePoint Online will retire legacy features like Information Management Policies and In-Place Records Management. Organ...

SharePoint Online Dumps Legacy Compliance Features

MC1211579 (3 January 2026) announces the retirement of four legacy SharePoint compliance features in favor of Purview Data Lifecycle managemen...

Microsoft 365 Copilot Help & Learning Portal

The Copilot Prompt Gallery + Help & Learning Portal provides central hubs where you can discover, customize, save, and reuse high‑quality ...

🔔 M365 Office Hours - January 2026

Teams Admin Center Simplifies External Collaboration

Microsoft is rolling out a UX update for the Teams admin center to make it easier to manage external collaboration settings. The new UX doesn’...