Forthcoming Upgrade to HBv2 VMs for HPC

At Supercomputing 2019, we announced HBv2 virtual machines (VMs) for HPC with AMD EPYC™ 'Rome' CPUs and the cloud’s first use of HDR 200 Gbps InfiniBand networking from NVIDIA Networking (formerly Mellanox). HBv2 VMs have proven very popular with HPC customers on a wide variety of workloads, and have powered some of the most advanced at-scale computational science ever on the public cloud.

Today, we’re excited to share that we’re making HBv2 VMs even better. By taking learnings from our efforts to optimize HBv3 virtual machines, we will soon be enhancing the HBv2-series in the following ways:

- simpler NUMA topology for application compatibility

- better HPC application performance

- new constrained core VM sizes to better fit application core-count or licensing requirements

- Fixing a known issue that prevented offering HBv2 VMs with 456 GB of RAM

This article details the changes we will soon make to the global HBv2 fleet, what the implications are for HPC applications, and what actions we advise so that customers can smoothly navigate this transition and get the most out of the upgrade.

Overview of Upgrades to HBv2

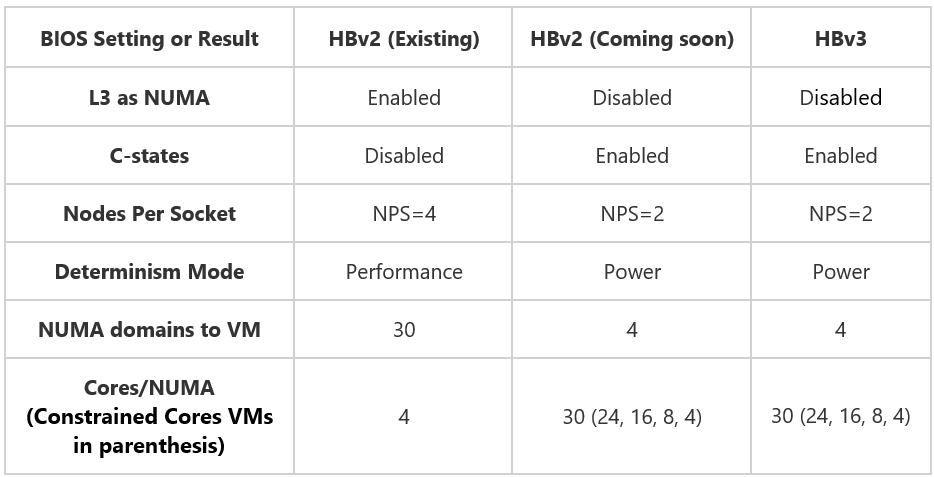

The BIOS of all HBv2 servers will be upgraded with the platform changes tabulated below. These upgrades bring the BIOS configurations of HBv3 (already documented in HBv3 VM Topology) and HBv2 in alignment with one another, and in doing synchronize so how the hardware topology appears and operates within the VM.

Simpler NUMA topology for HPC Applications

A notable improvement will be a significant reduction in NUMA complexity presented into customer VMs. To date, HBv2 VMs have featured 30 NUMA domains (4-cores per NUMA). After the upgrade, every HBv2 VM will feature a much simpler 4 NUMA configuration. This will help assist customer applications that do not function correctly nor optimally with a many-NUMA hardware topology.

In addition, while there will be no hard requirement to use HBv2 VMs any differently than before, the best practice for pinning processes to optimize performance and performance consistency will change. From an application runtime perspective, the existing HBv2 process pinning guidance will no longer apply. Instead, optimal process pinning for HBv2 VMs will be identical to what we already advise for HBv3 VMs. By adopting this guidance, users will gain the following benefits:

- Best performance

- Best performance consistency

- Single configuration approach across both HBv3 and HBv2 VMs (for customers that want to use HBv2 and HBv3 VMs as a fungible pool of compute)

Better HPC workload performance

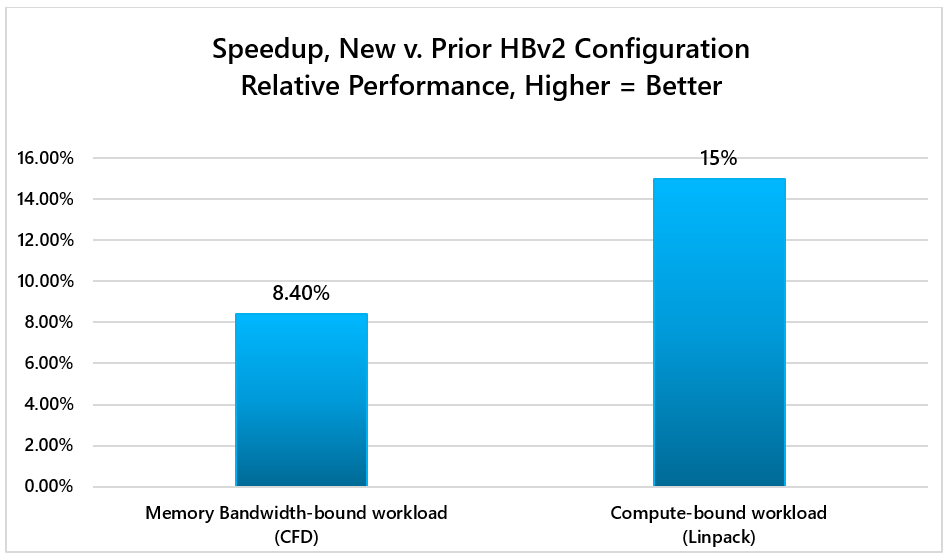

These changes will also result in higher performance across a diverse set of HPC workloads due to enhancements to peak single-core boost clock frequencies, and better memory interleaving. In our testing, the upgrade to HBv2 VMs improve representative memory bandwidth-bound and compute-bound HPC workloads by as much as 8-15%:

Constrained Core VM sizes

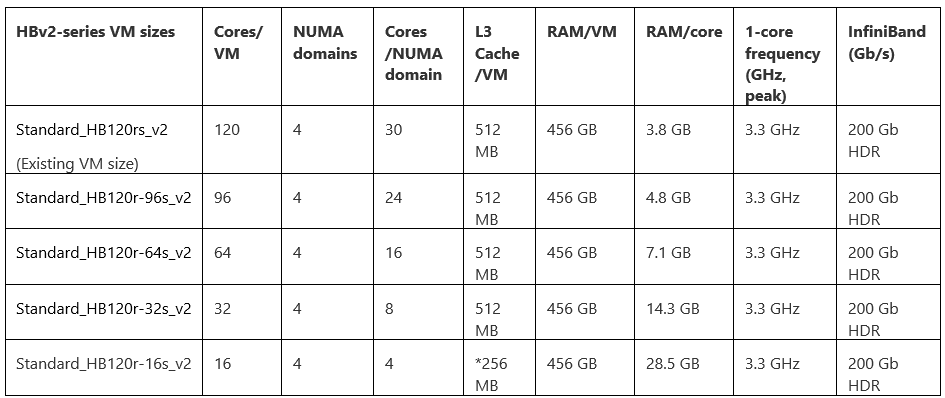

Finally, soon after the BIOS changes are made to HBv2 servers we will introduce four new constrained core VM sizes. See below for details:

*Due to the architecture of Zen2-based CPUs like EPYC 2nd Generation, the 16-core HBv2 VM size only exposes 256 MB out of a possible 512 MB of L3 cache within the server

As with HBv3 VMs, constrained cores VM sizes for the HBv2-series will enable customers to right-size the core count of their VMs on the spectrum of maximum performance per core (16-core VM size) to maximum performance per VM (120 core VM size). Across all VM sizes, global shared assets like memory bandwidth, memory capacity, L3 cache, InfiniBand, local SSD, etc. remain constant. Doing so increases how those assets are allocated on a per-core basis. In HPC, common scenarios for which this is useful include:

- Providing more memory bandwidth per CPU core for CFD workloads.

- Allocating more L3 cache per core for RTL simulation workloads.

- Driving higher CPU frequencies to fewer cores in license-bound scenarios.

- Giving more memory or local SSD to each core.

Fixing Known Issue with Memory Capacity in HBv2 VMs

The upgrade will also address a known issue from late 2021 that required a reduction from the previously offered 456 GB of RAM per HBv2 VM to only 432 GB. After the upgrade is complete, all HBv2 VMs will once again feature 456 GB of RAM.

Navigating the Transition

Customers will be notified via the Azure Portal shortly before the upgrade process begins across the global HBv2 fleet. From that point forward any new HBv2 VM deployments will land on servers featuring the new configuration.

Because many scalable HPC workloads, especially tightly coupled workloads, expect a homogenous configuration across compute nodes, we *strongly advise* against mixing HBv2 configurations for a single job. As such, we recommend that once the upgrade has rolled out across the fleet that customers with VM deployments from before the upgrade began (and thus still utilizing servers with the prior BIOS configuration) de-allocate and re-allocate their VMs so that the have a homogenous pool of HBv2 compute resources.

As part of notifications sent via the Azure portal, we will also advise customers visit the Azure Documentation site for the HBv2-series virtual machines where all changes will be provided in greater detail.

We are excited to bring these enhancements to HBv2 virtual machines to our customers across the world. We’re also happy to take customer questions and feedback by contacting us at Azure HPC Feedback.

Published on:

Learn more