Build Your Feature Engineering System on AML Managed Feature Store and Microsoft Fabric

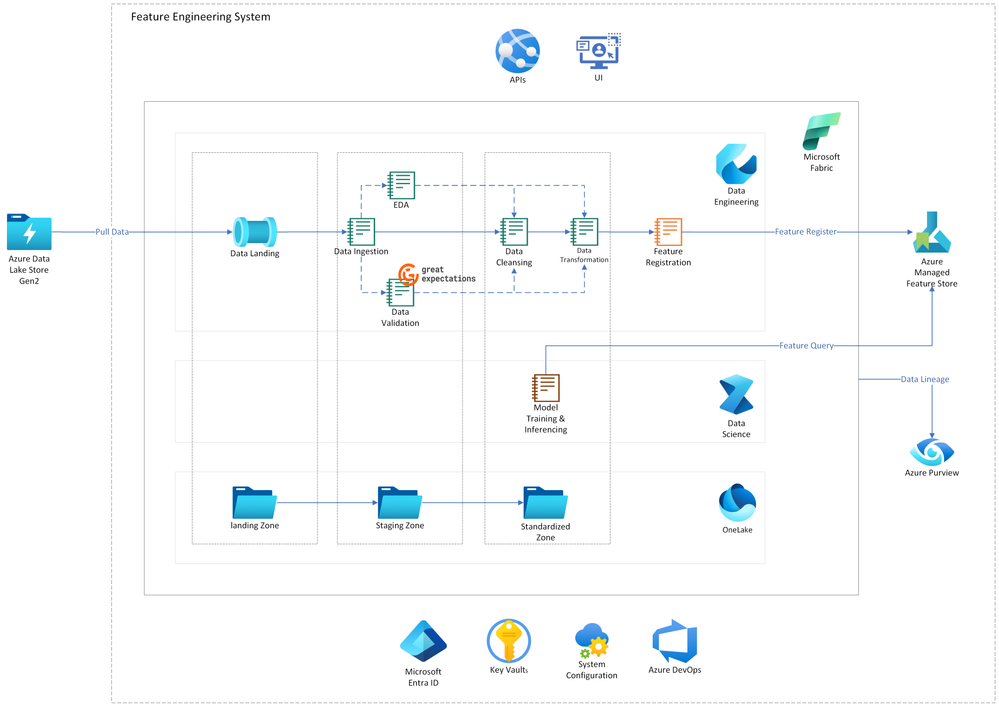

Feature Engineering System

Feature engineering is the process of using domain knowledge to extract features (characteristics, properties, attributes) from raw data. The extracted features are used for training the models that can predict values for relevant business scenarios. A feature engineering system provides the tools, processes, and techniques used to perform feature engineering consistently and efficiently.

This article elaborates on how to build a feature engineering system based on Azure Machine Learning managed feature store and Microsoft Fabric.

Imagine the following scenario:

Gary, a data scientist at Contoso Company, is working on an important project. He aims to find out when demand for online vehicle services is highest. To do this, he uses two main sources of information. One is public data from New York Taxi trips. The other is private records from transportation companies. Gary's work stopped suddenly when he couldn't access the needed data. This happened because Bo, the data engineer, was not available. This situation showed how much Gary's project depends on the team's ability to manage data internally. When Bo came back, everyone saw how much they needed a system to move data automatically. This system would make work easier and help data scientists and engineers at Contoso work better together.

This scenario underscores the challenges of data accessibility and the importance of automation in streamlining data workflows.

Our Architecture

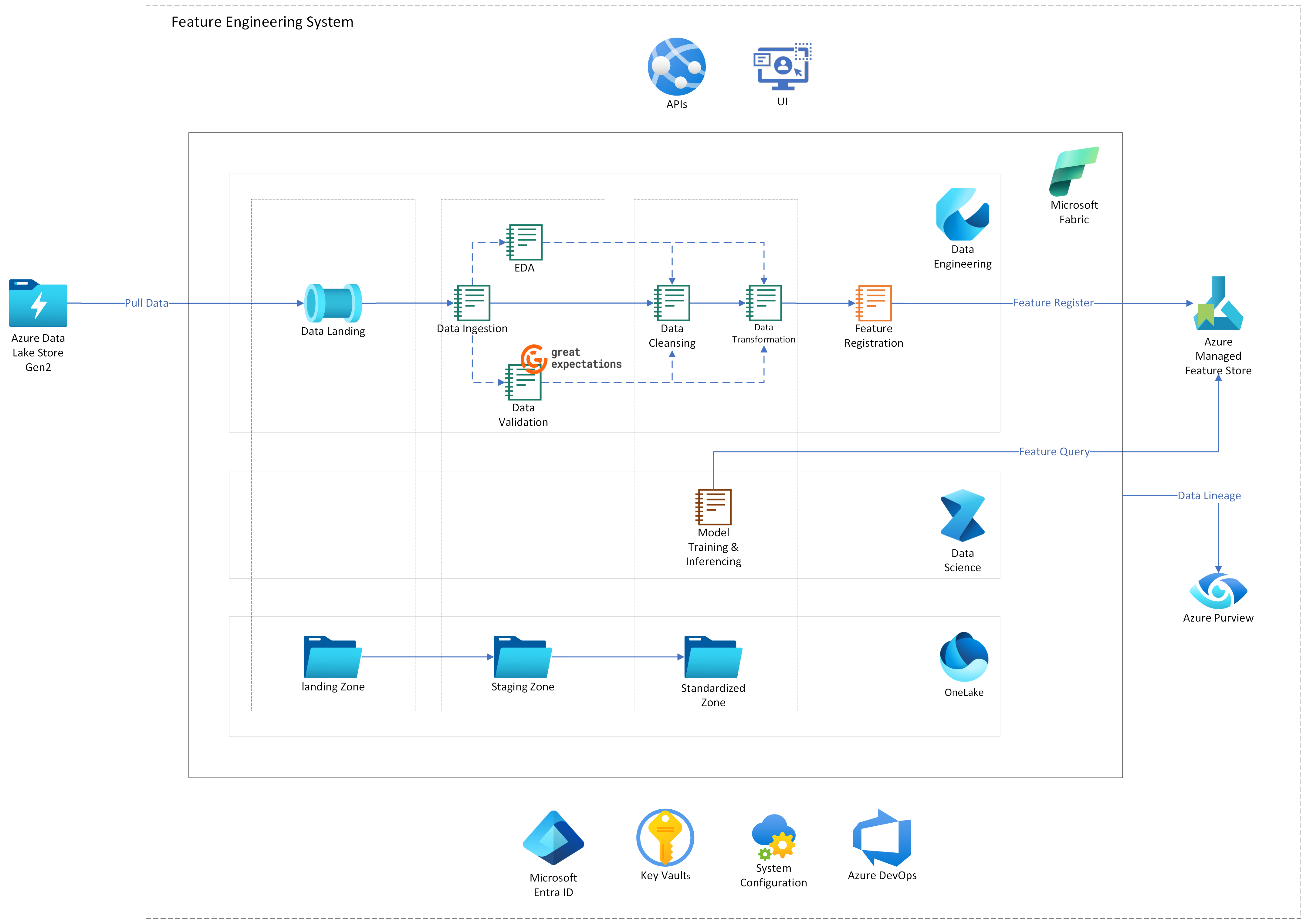

As the architecture diagram shows, running on Microsoft Fabric, a data pipeline lands, ingests, and transforms the incoming data. The transformed data is registered as features in Azure Machine Learning managed feature store. The features are then ready to be used for model training and inferencing. In the meantime, Purview tracks and monitors the data lineage of both the data pipeline and features.

Data Flow

The following dataflow corresponds to the preceding diagram:

- Data landing: The data is copied from Azure Data Lake Storage Gen2 and landed to Lakehouse landing zone.

- Data ingestion: The data is ingested from landing zone to staging zone.

- (Optional) EDA: Data scientists analyze and investigate the data for a summary of characteristics.

- (Optional) Data validation: The data is validated to ensure it meets the requirements for the next steps.

- Data transformation: The data is transformed into features in standard zone and stored in Azure Machine Learning managed feature store.

- Model training and inferencing: The features are used for model training and inferencing.

- Data lineage: Purview tracks and monitors the data pipeline and features.

Components

- Azure Data Lake Storage Gen2 is used to store data.

- Microsoft Fabric is used as the orchestrator of data pipeline and the storage of data and models. It provides a set of integrated services that enable you to ingest, store, process, and analyze data in a single environment.

- The Azure Machine Learning managed feature store is used to store and manage features. It enables machine learning professionals to independently develop and discover features.

- Purview (formerly known as Azure Data Catalog) provides unified data governance to track lineage and monitor data assets.

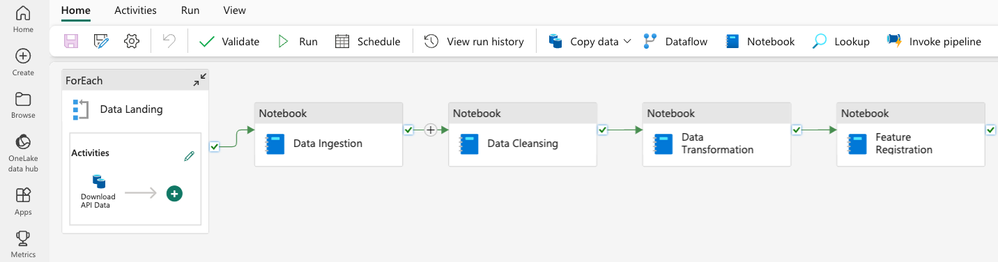

The Data Pipeline

Microsoft Fabric provides a set of integrated services that enable you to ingest, store, process, and analyze data in a single environment. Microsoft Fabric's data pipeline that can help build and manage data flows. It works with other components such as Lakehouse and Data warehouse to provide a complete data solution. It can also be used as a pipeline to orchestrate data movement and data transformation. It allows you to solve the most complex extract, transform, and load (ETL) scenarios.

In our sample, the pipeline is composed of several activities, and each activity is responsible for a specific task such as data landing, data ingestion, data cleansing, data transformation. In this case, we use the pipeline to download data from a web application, and then store the data in the Fabric One Lake storage. After that, we'll use the data to build features and store them in the Azure Machine Learning managed feature store. Finally, we use the features to train and inference the model.

Data Validation

Great Expectations is a Python library that provides a framework for describing the acceptable state of data and then validating that the data meets those criteria.

We use Great Expectations for data validation, and export logs to Azure Log Analytics or Azure Monitor to generate data quality reports and use Data Docs which render Expectations in a clean, human-readable format.

The Feature Store

Azure Machine Learning managed feature store (MFS) streamlines machine learning development, providing a scalable, secure, and managed environment for handling features.

Features are crucial data inputs for your machine learning model, representing the attributes, characteristics, or properties of the data used in training. In MFS, a feature set is a group of related features. A feature set can be defined with a specification in yaml format as simple as following.

In our example, the registration of the feature set is seamlessly integrated into the data pipeline, taking place in a single step following the data transformation process.

Upon registration, the feature set is housed in MFS. The source data, after processing and transformation, now resides in Fabric OneLake, making it easily accessible and manageable. Concurrently, the specifics of the feature set are meticulously cataloged in Purview, providing a comprehensive and organized view of the data’s metadata.

Model Training and Inferencing

Model training in machine learning involves creating a mathematical model that generalizes and learns from training data. This process utilizes Support Vector Regression from the sklearn package to train the model for data analysis. In regression analysis, the objective of model training is to develop a probabilistic model that accurately represents the relationship between dependent and independent variables. The management of experiments is facilitated by using the MLflow API, which offers a structured approach to tracking experiments' metrics, parameters, and outcomes.

The model training process includes several steps to prepare and analyze the data effectively. Initially, relevant feature columns, such as borough_id and weekday_pickup, are selected from the dataset. The data is then divided into training and testing sets, with Support Vector Regression applied to train the model, considering scaled_demand as the dependent variable and all others as independent variables. MLflow plays a crucial role in tracking the training metrics, parameters, and the final Support Vector Regression model. After finalizing the model, it's saved for further tracking, allowing for simplified management and comparison across different model versions. This saved model can later be loaded for inferencing purposes, where it's run on a sample dataset to demonstrate its predictive capabilities.

Data lineage

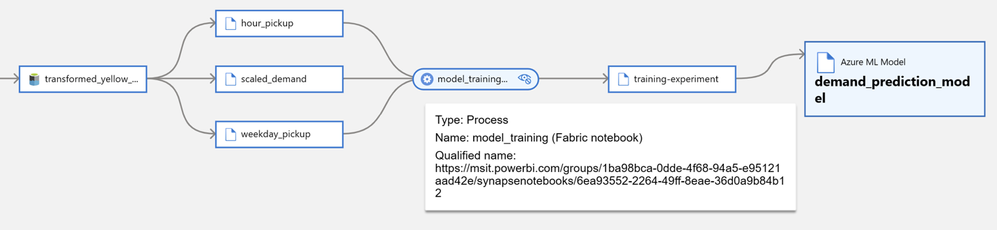

Data lineage encompasses the lifecycle of data, tracing its origin and movement across the data estate. Within Microsoft Fabric, a built-in lineage view aids users in comprehending data flow from source to destination.

For instance, in a machine learning use case, users can explore how a fraud detection model is trained and delve into relevant nodes. While the provided use case illustrates data processing at the Fabric artifacts level (such as Lakehouse, notebooks, and experiments), more detailed insights—such as the source data processed in a Fabric notebook or the storage location of processed data—require a lineage view with finer-grained data assets.

In the following sections, we outline a customized approach for registering such granular data lineage in Microsoft Purview.

Data pipeline lineage

To have a fine-grained end-to-end lineage view for data pipeline processing logic, we could register processed files/tables as data assets to Purview, from landed source files in landing zone all the way to the transformed files saved in standardization zone. At the end of each data processing Fabric notebook, we have logic to collect and register data assets and relevant data lineage to Purview using Purview REST APIs.

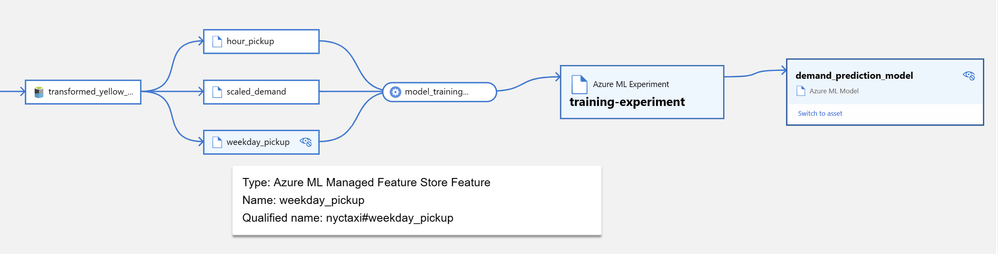

Features lineage

After registering the user-defined features in the Azure Machine Learning managed feature store, it can also apply similar custom logic to register fine-grained features lineage in Purview.

In the features lineage view, let’s direct our attention to the features assets (in the second column). It reveals that all features originate from the transformed data generated by the data pipeline’s transformation stage. End users can trace the lineage path further backward to gain a deeper understanding of the data processing lifecycle.

Machine learning Model Lineage

The machine learning model lineage view plays a crucial role in data lineage. It provides insights into the features used for training a machine learning model, identifies the notebook containing the source code, locates training experiment run details, and showcases performance metrics.

Potential Use Cases

- A video clip recommendation model relies on user profile features. A dedicated team consistently updates all user profiles, typically daily. For the training model to perform optimally, it must access the most recent values of the features that correspond to the training labels and recommendation algorithms. The use of feature engineering system becomes beneficial when numerous similar features are involved in the training model.

- Consider the example of user profile features. It's highly probable that these features serve not only the video clip recommendation model but also various other models. Different teams utilizing these models can share common features across teams, yielding significant computational cost savings and reducing training time.

Related Resources

Deploy the implementation from following link:

Next Steps

- What is feature engineering

- Announcing Managed Feature Store in Azure Machine Learning

- Microsoft Fabric Overview

- Data Science documentation in Microsoft Fabric

Published on:

Learn more