A quick start guide to benchmarking AI models in Azure: MLPerf Inferencing v2.0

This blog was authored by Aimee Garcia, Program Manager - AI Benchmarking. Additional contributions by Program Manager Daramfon Akpan, Program Manager Gaurav Uppal, Program Manager Hugo Affaticati.

Microsoft Azure’s publicly available AI inferencing capabilities are led by the NDm A100 v4, ND A100 v4 and NC A100 v4 virtual machines (VMs) powered by the latest NVIDIA A100 Tensor Core GPUs. These results showcase Azure’s commitment to making AI inferencing available to all researchers and users in the most accessible way while raising the bar in AI inferencing in Azure. To see the announcement on Azure.com please click here.

Highlights from the results

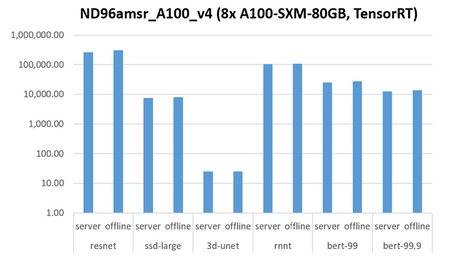

ND96amsr A100 v4 powered by NVIDIA A100 80G SXM Tensor Core GPU

|

Benchmark |

Samples/second |

Queries/second |

Scenarios |

|

bert-99 |

27.5K+ |

~22.5K |

Offline and server |

|

resnet |

300K+ |

~200K+ |

Offline and server |

|

3d-unet |

24.87 |

|

Offline |

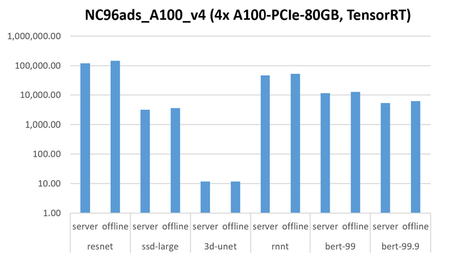

NC96ads A100 v4 powered by NVIDIA A100 80G PCIe Tensor Core GPU

|

Benchmark |

Samples/second |

Queries/second |

Scenarios |

|

bert-99.9 |

~6.3K |

~5.3K |

Offline and server |

|

resnet |

144K |

~119.6K |

Offline and server |

|

3d-unet |

11.7 |

|

Offline |

The results were generated by deploying the environment using the VM offerings and Azure’s Ubuntu 18.04-HPC marketplace image.

Steps to reproduce the results in Azure

Set up and connect to a VM via SSH - decide which VM you want to benchmark

- Image: Ubuntu 18.04-HPC marketplace image

- Availability: Depending on client need (ex. No redundancy)

- Region: Depending on client need (ex. South Central US)

Set up the dependencies

- Verify the nvidia-docker version:

cd /mnt

nvidia-smi

- If the driver version is less than 510, install the following: CUDA Toolkit 11.6 Downloads | NVIDIA Developer

sudo wget https://developer.download.nvidia.com/compute/cuda/repos/ubuntu1804/x86_64/cuda-ubuntu1804.pin

sudo mv cuda-ubuntu1804.pin /etc/apt/preferences.d/cuda-repository-pin-600

sudo dpkg -i cuda-repo-ubuntu1804-11-6-local_11.6.1-510.47.03-1_amd64.deb

sudo apt-key add /var/cuda-repo-ubuntu1804-11-6-local/7fa2af80.pub

sudo apt-get update

sudo apt-get -y install cuda

- Update docker to the latest version:

sudo dpkg -P moby-cli

curl https://get.docker.com | sh && sudo systemctl --now enable docker

sudo chmod 777 /var/run/docker.sock

- To check the docker version run:

docker info (add check docker version by running this)

sudo reboot

You should have version 20.10.12 or newer

- To verify the version again:

nvidia-smi

- Create and run the script to mount the nvme disk using the following:

cd /mnt

sudo touch nvme.sh

sudo vi nvme.sh

- RAID the nvme disks and mount onto the machine by copying and inserting the following into your file:

#!/bin/bash

NVME_DISKS_NAME=`ls /dev/nvme*n1`

NVME_DISKS=`ls -latr /dev/nvme*n1 | wc -l`

echo "Number of NVMe Disks: $NVME_DISKS"

if [ "$NVME_DISKS" == "0" ]

then

exit 0

else

mkdir -p /mnt/resource_nvme

# Needed incase something did not unmount as expected. This will delete any data that may be left behind

mdadm --stop /dev/md*

mdadm --create /dev/md128 -f --run --level 0 --raid-devices $NVME_DISKS $NVME_DISKS_NAME

mkfs.xfs -f /dev/md128

mount /dev/md128 /mnt/resource_nvme

fi

chmod 1777 /mnt/resource_nvme

- Run the script:

sudo sh nvme.sh

- Update Docker root directory in the docker daemon config file:

sudo vi /etc/docker/daemon.json

Add this line after the first curly bracket:

"data-root": "/mnt/resource_nvme/data",

- Run the following:

sudo systemctl restart docker

cd resource_nvme

- Now that your environment is set up, get the repository from the MLCommons github and run the benchmarks:

- When setting up the scratch path, the path should be /mnt/resource_nvme/scratch

export MLPERF_SCRATCH_PATH=/mnt/resource_nvme/scratch

- Run benchmarks by following the steps in the README.md file in the working directory. To open the file:

vi README.md

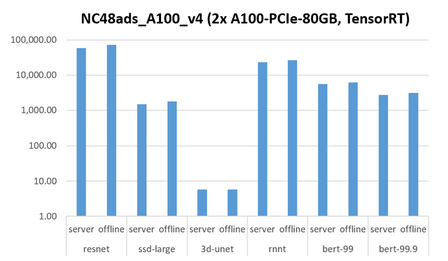

Below are graphs showing the achieved results for the NDm A100 v4, NC A100 v4 and ND A100 v4 VMs. The units are in throughput/second (samples and queries).

More about MLPerf

To learn more about MLCommons benchmarks, visit the MLCommons website.

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...