Demystifying Azure OpenAI Networking for Secure Chatbot Deployment

Introduction

Azure AI Landing Zones provide a solid foundation for deploying advanced AI technologies like OpenAI's GPT-4 models. These environments are designed to support AI enthusiasts, but it's essential to grasp their networking aspects, especially concerning Platform as a Service (PaaS) offerings.

In this article, we'll dive into the networking details of OpenAI Landing Zones, focusing on how PaaS services handle networking configurations, manage inbound and outbound connectivity, and handle DNS resolution. The knowledge you gain here is applicable not only to OpenAI but also to other PaaS and AI Services within Azure.

But there's more! We'll walk you through a practical scenario involving the creation of an Open AI Chat Web Application. This application allows for seamless interaction between users and AI. To make it even better, we've provided a link to a GitHub repository where you can deploy this application yourself. It's a hands-on opportunity to put what you've learned into practice, transforming concepts into real AI-powered applications.

Whether you're new to AI networking or looking to expand your knowledge, join us on this informative journey through Azure AI Landing Zones, PaaS networking, and the world of practical AI deployment.

PaaS Services Networking in Azure

PaaS services in Azure are a powerful way to host your applications without the overhead of managing infrastructure. However, understanding how PaaS services handle networking is essential for building secure and efficient solutions. Let's delve deeper into the networking aspects of PaaS services.

PaaS services in Azure do not have a private IP address assigned by default. They can only have a private IP address if they are configured with a private endpoint. Private endpoints allow the PaaS service to communicate securely within the Azure Virtual Network (VNet) using a private IP address. This private IP enables communication between the PaaS service and other resources within the same VNet, such as virtual machines or other PaaS services.

Without a private endpoint, the PaaS service can only be accessed via its public IP address or through other networking configurations like VNet integration or firewall rules. Public IP addresses enable external clients and users to access the PaaS service over the internet. VNet integration allows the PaaS service to securely access resources within a VNet, such as databases or virtual machines, over a private connection.

To summarize, PaaS services in Azure can have both public IP addresses and private IP addresses, but the private IP address is only available if the service is configured with a private endpoint. The private IP address enables communication within the VNet, while the public IP address allows external access over the internet.

Inbound and Outbound Connectivity

Inbound and outbound connectivity are essential aspects of PaaS services' networking. Let's break down how these aspects work in the context of Azure.

Inbound Connectivity:

Inbound connectivity refers to the ability of external clients or users to access a PaaS service. This can be achieved through various means, including public IP addresses or private endpoints.

- If a PaaS service has a public IP address, it can be accessed directly over the internet by external clients.

- If a PaaS service is configured with a private endpoint, inbound connectivity is achieved by mapping the service to a private IP address within a VNet. This allows clients within the same VNet to securely access the PaaS service without going through the public internet.

Outbound Connectivity:

Outbound connectivity refers to the ability of the PaaS service to initiate outbound connections to external resources, such as databases or APIs.

- By default, PaaS services have outbound connectivity enabled, allowing them to communicate with external resources over the internet.

- If a PaaS service is configured with a private endpoint, it does not necessarily mean that the service will have a private outbound IP address.

- The private endpoint primarily focuses on inbound connectivity and provides a private IP address for secure communication within the VNet. Outbound connectivity still uses the public IP address of the PaaS service to communicate with external resources.

In summary, a private endpoint for a PaaS service enhances inbound connectivity by providing a private IP address within a VNet. However, it does not automatically provide a private outbound IP address. Outbound connectivity still relies on the public IP address of the PaaS service.

Understanding Azure Network Traffic

In Azure, the flow of network traffic between Platform as a Service (PaaS) services can take different paths, depending on your network configuration and architecture. It's crucial to comprehend when traffic stays within the Azure network backbone and when it traverses to the public internet. Here's a breakdown:

Traffic Staying Within Azure's Network Backbone

-

Within the Same Azure Region: When PaaS services are located within the same Azure region, and no specific network configurations divert traffic outside of that region, the traffic usually remains within the Azure network backbone. Azure's extensive and well-connected network backbone ensures optimal performance and security.

-

Virtual Network (VNet) Integration: Integration of PaaS services with an Azure Virtual Network (VNet) enables communication entirely within Azure's network backbone. VNets create isolated network spaces within Azure, allowing PaaS services connected to the same VNet to communicate using private IP addresses without crossing the public internet.

-

Private Endpoints: Azure offers Private Endpoints for many PaaS services. When you configure PaaS services to use Private Endpoints, traffic between these services and other resources, like virtual machines or databases, can stay within the Azure network backbone. Private Endpoints provide private IP addresses within your VNet for secure and private communication.

-

Regional Peering: Azure features regional network peering, facilitating efficient and secure communication between resources in different Azure regions within the same geographical area. When PaaS services in different regions need to communicate, Azure's regional peering ensures traffic takes an optimized path within the Azure backbone, minimizing latency and ensuring security.

-

Use of Azure Front Door or Azure Application Gateway: Azure Front Door and Azure Application Gateway act as reverse proxies and load balancers for web applications. When traffic flows through these services, they can efficiently route it to PaaS services while ensuring that the traffic remains within Azure's network backbone. They also provide security features like Web Application Firewall (WAF) protection.

Internet-Bound Traffic

-

Internet-Bound Traffic: Sometimes, PaaS services need to communicate with resources external to Azure, such as external APIs, services, or the public internet. In such cases, the traffic exits the Azure network backbone and travels over the public internet to reach its destination.

-

Network Security Group (NSG) and Firewall Rules: Network Security Groups and Azure Firewall allow you to define access controls and firewall rules for traffic entering and exiting your Azure resources. The configurations within these security features determine whether traffic stays within Azure or is allowed to exit to external destinations.

Does My Traffic Go to the Public Internet?

It's essential to understand that even if a resource in Azure has a public IP address for outbound traffic, it doesn't necessarily mean that the traffic will exit the Azure network backbone. Azure's network architecture is designed for intelligent traffic routing. Here's how it works:

-

Azure's Internet Egress Points: Azure strategically places internet egress points within its data centers. These egress points are where outbound traffic from Azure resources, such as virtual machines and PaaS services, is routed when it needs to access the public internet.

-

Traffic Optimization: Azure's network routing is optimized to keep traffic within the Azure backbone for as long as possible. When a resource in Azure initiates an outbound connection to a destination on the public internet, Azure's network routing directs the traffic to the nearest internet egress point.

-

Egress Points vs. Data Centers: It's crucial to note that these internet egress points are separate from Azure data centers. While data centers host Azure resources, egress points are specialized network infrastructure designed for efficiently routing traffic to the internet.

-

Public IPs and Network Address Translation (NAT): Azure often uses Network Address Translation (NAT) to manage outbound traffic. Even if a resource has a public IP address assigned, the traffic from that resource may be translated to a different, shared IP address at the egress point before it reaches the public internet. This helps improve security and resource utilization.

In summary, Azure's network architecture is designed to intelligently route traffic based on destination and optimize performance. Even if a resource in Azure has a public IP address for outbound traffic, Azure strives to keep the traffic within its network backbone for as long as possible, ensuring efficient and secure communication. However, when traffic needs to reach external destinations on the public internet, it will exit Azure's network through designated egress points. This design balances performance, security, and efficiency in handling outbound traffic from Azure resources.

OpenAI Landing Zones and Chatbot Scenarios

Now that we have a better understanding of PaaS services' networking, let's apply this knowledge to OpenAI Landing Zones, specifically in the context of deploying a chatbot web application.

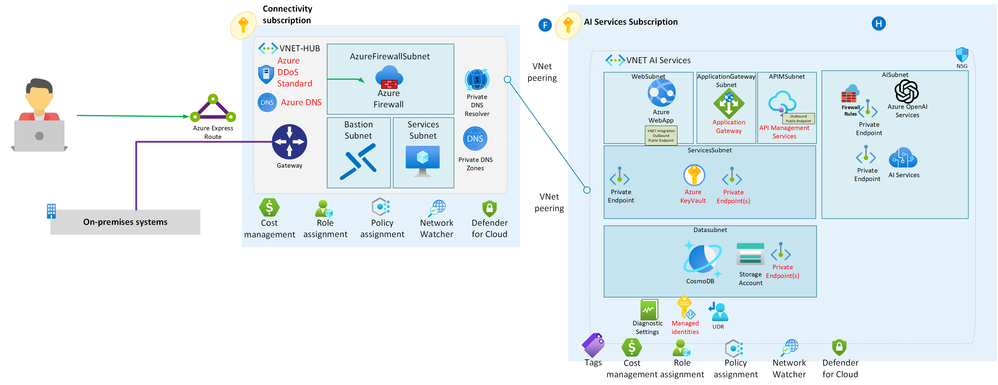

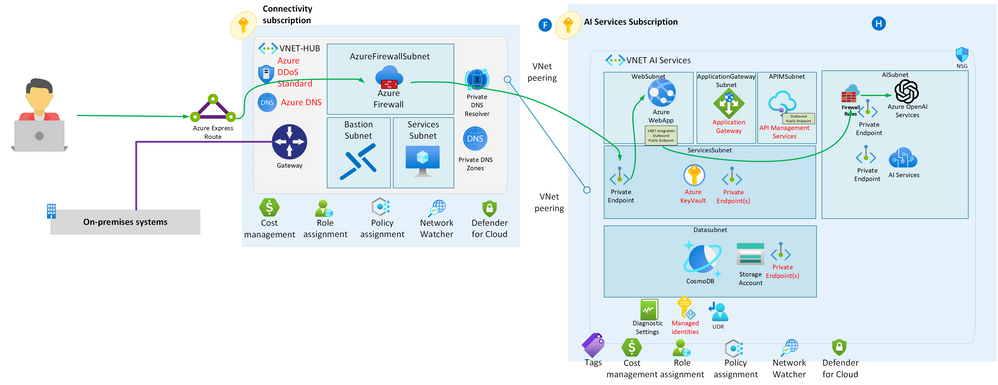

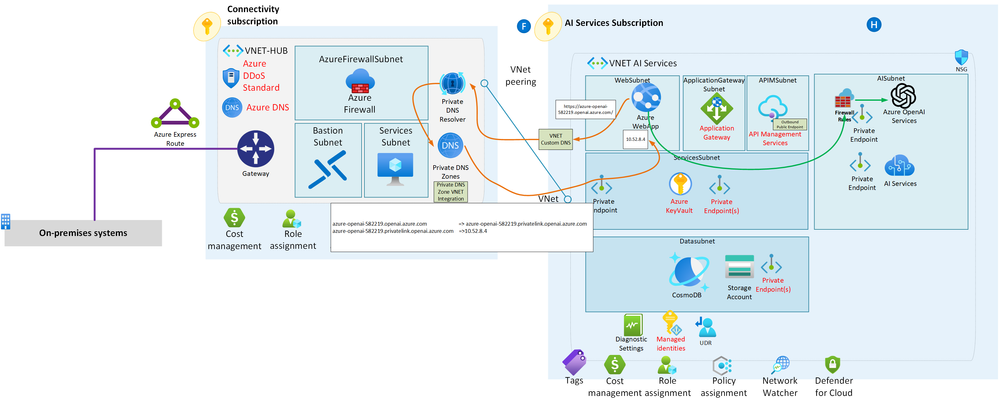

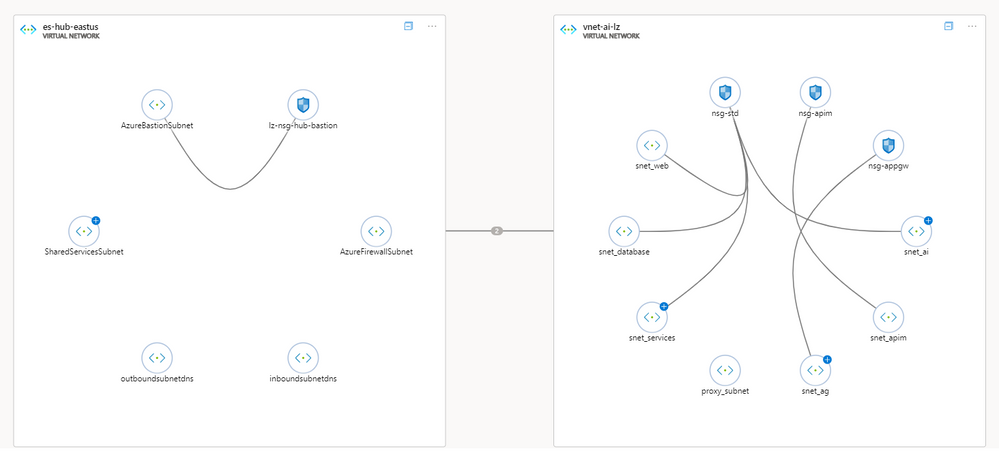

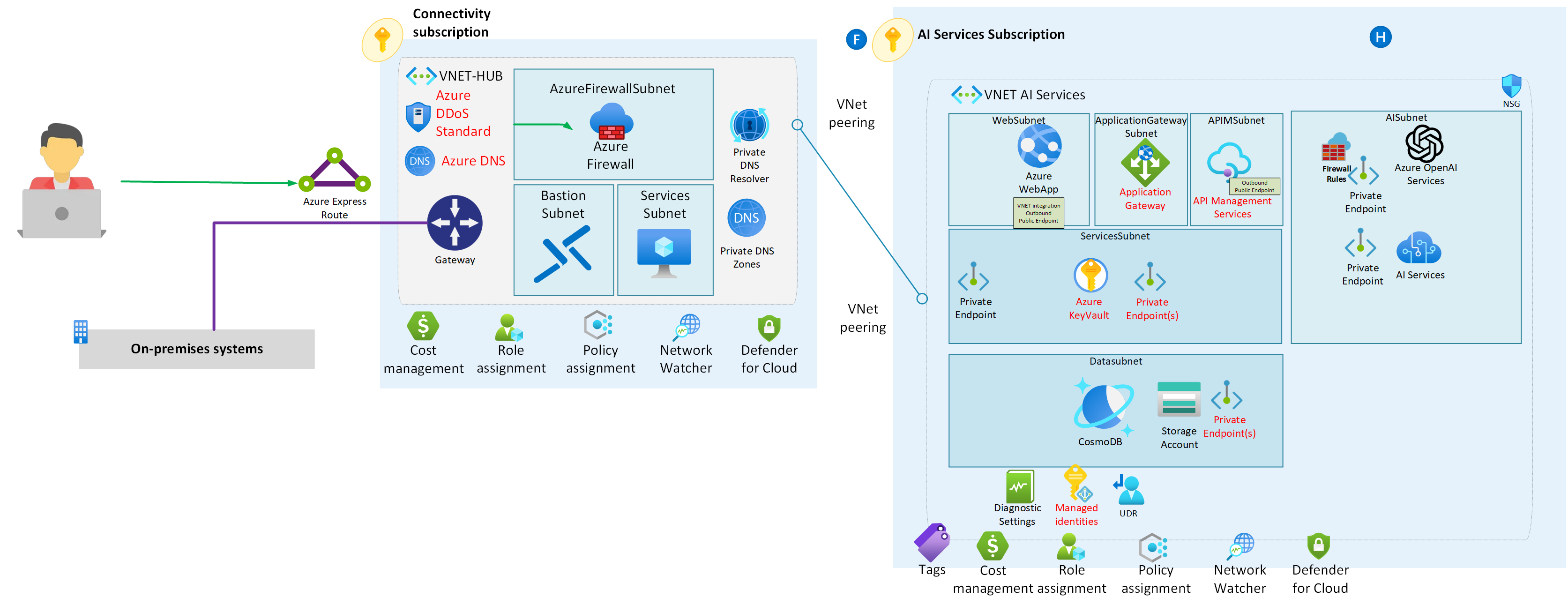

Scenario 1: Secure Communication to Azure App Service via Private Link

In this scenario, we're building a secure and structured Azure environment designed to ensure the utmost protection for your resources.

Let's break down the key components of this setup:

-

Azure Landing Zone: This serves as the foundation of our Azure environment. Think of it as a meticulously organized framework that ensures every aspect of your cloud deployment follows best practices in terms of security, compliance, and efficiency. It's like having a well-structured blueprint for your cloud infrastructure.

-

Jumpbox or Bastion Host: To access and manage our resources securely, we employ a jumpbox or bastion host. These are like gatekeepers, allowing authorized users to enter the Azure environment while keeping unauthorized access at bay. It's akin to having a secure entrance to a restricted area.

-

Azure App Service: Within our environment, we have an Azure App Service. This is where we host a web application that requires a secure connection to Azure OpenAI. Imagine it as the platform where your web application resides, like a dedicated workspace for your digital application.

-

Private Link: To ensure that our communication remains private and secure, we utilize Private Link. This feature allows us to establish a direct and private connection between our Azure App Service and Azure OpenAI. Think of it as having a secret tunnel that only authorized entities can use, keeping your data and interactions confidential.

-

Firewall Rules: In Azure OpenAI, we configure specific firewall rules that permit communication exclusively from the dynamic IP address of our Azure App Service. This meticulous configuration ensures that only the designated service can access Azure OpenAI, enhancing security by allowing only trusted communication.

This entire architectural setup is dedicated to maximizing security, maintaining isolation, and controlling communication in a well-organized manner. It's like building a fortified castle with guarded entrances, secret passages, and strict rules to safeguard your valuable digital assets.

Certainly, let's explain how this scenario represents a standard approach to securing Azure OpenAI by using networking as a perimeter. Additionally, we'll introduce the concept of creating an identity perimeter through zero trust and managed identities for an even more comprehensive security strategy.

Standard Networking Perimeter for Azure OpenAI Security:

In the described scenario, we've established a robust security perimeter around our Azure OpenAI environment using standard networking practices. Here's how it works:

-

Private Link: We've employed Private Link to establish a dedicated and private connection between our Azure App Service and Azure OpenAI. This serves as a crucial networking perimeter that ensures all communication remains within the protected Azure environment. It's similar to having a secure fortress wall around your critical resources, allowing only authorized access.

-

Firewall Rules: To further enhance security, we've configured precise firewall rules within Azure OpenAI. These rules specify that only communication originating from the dynamic IP address of our Azure App Service is allowed. This means that even within the private network perimeter, only a specific, trusted entity can interact with Azure OpenAI. It's akin to having a heavily guarded gate within the fortress, allowing entry only to those with the right credentials.

Identity Perimeter: Leveraging Zero Trust and Managed Identities:

While the networking perimeter is a foundational security layer, modern security strategies go beyond it to create an identity perimeter. Here's how we can extend our security approach:

-

Zero Trust: Zero Trust is a security framework that operates under the principle of "never trust, always verify." In this context, it means that we don't rely solely on network perimeters but instead verify the identity of users and devices attempting to access resources. Think of it as a system of multiple checkpoints and identity verification steps throughout your environment, ensuring that only authenticated and authorized entities can proceed.

-

Managed Identities: Azure provides Managed Identities, which are a secure way to handle identities and access to Azure resources. With Managed Identities, we can ensure that only authorized applications or services can interact with Azure OpenAI. These identities are centrally managed and rotated automatically for enhanced security. It's like having digital badges for your applications, allowing them to access specific resources securely.

By combining the networking perimeter with the principles of Zero Trust and Managed Identities, we create a comprehensive security strategy. It's like fortifying our castle not only with strong walls but also with rigorous identity checks at every entry point, making our Azure OpenAI environment exceptionally secure and well-protected.

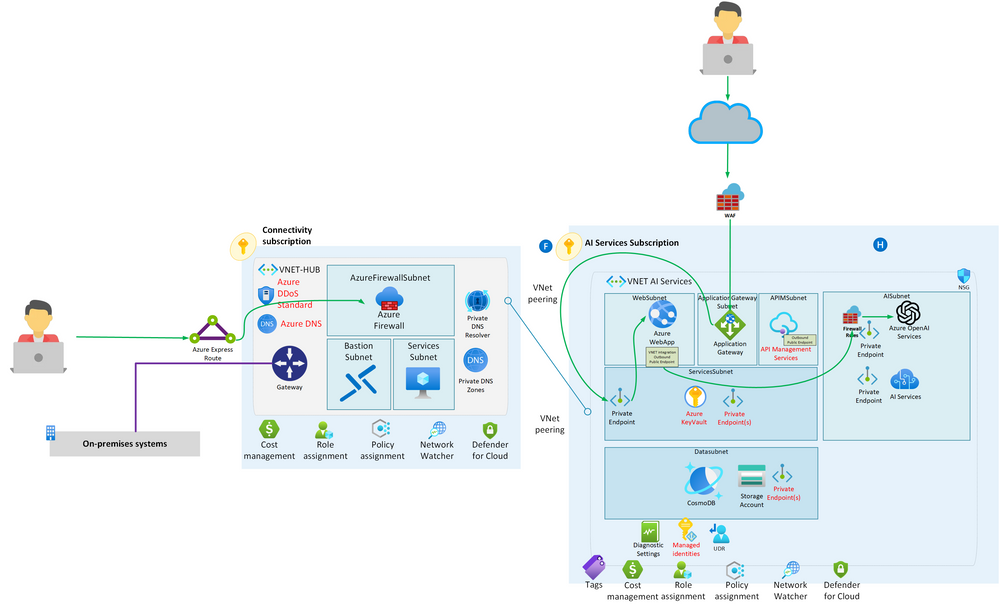

Scenario 2: Securely Exposing the Application with Application Gateway and WAF

Building upon the first scenario, this advanced configuration aims to securely expose the web application to external users while maintaining robust security measures. This scenario includes the following components:

-

Standard Azure Landing Zone: The foundation of this architecture remains consistent with the Azure Landing Zone, ensuring a well-structured and secure environment.

-

Internal Network Access: Access to the internal network is achieved through a jumpbox or bastion host, providing controlled and monitored access to critical resources.

-

Azure App Service with Private Link: The web application continues to be hosted within the Azure App Service, utilizing a private link for connections. This private link ensures that the web application can securely communicate with Azure OpenAI via a private endpoint, maintaining isolation from the public internet.

-

Azure OpenAI Integration: Azure OpenAI remains a crucial part of this scenario, facilitating AI capabilities for the web application. It is integrated through a private endpoint, ensuring data privacy and security.

-

Azure Application Gateway (App GW): An Azure Application Gateway is introduced as a key component. It acts as a reverse proxy and load balancer for the web application. App GW enhances security and traffic management.

-

Web Application Firewall (WAF): The Azure Application Gateway is equipped with a Web Application Firewall (WAF), which serves as a critical security layer. The WAF provides protection against common web vulnerabilities and attacks, safeguarding the web application from potential threats.

-

Firewall Rules in Azure OpenAI: Firewall rules in Azure OpenAI are meticulously configured to maintain secure communication. These rules ensure that only authorized traffic, including that originating from the Azure App Service's dynamic IP address, can access the OpenAI services.

This architecture brings an extra layer of protection and load balancing capabilities through the Azure Application Gateway and Web Application Firewall. The App GW efficiently distributes incoming traffic to the web application while safeguarding it against malicious activities. Meanwhile, the private link and endpoint architecture maintain the privacy and security of data exchanged between the web application and Azure OpenAI.

While Scenario 1 prioritizes the network as a perimeter for security, this advanced scenario combines network security with enhanced application-level security through the Azure Application Gateway and Web Application Firewall. This comprehensive approach ensures that the web application remains highly available, performs optimally, and is well-protected against potential threats, providing a robust and secure environment for AI-powered applications.

DNS Resolution for Both Scenarios

In both of the scenarios outlined above, DNS resolution plays a critical role in enabling secure and efficient communication between the Azure App Service and Azure OpenAI. Here's how the DNS resolution process works:

-

DNS Query: When the Azure App Service needs to connect to Azure OpenAI's private endpoint, it initiates a DNS query to resolve the hostname (e.g., "azure-openai.com") to an IP address. This DNS query is directed to the Azure Private DNS Resolver, which is configured using the custom DNS within the Virtual Network (VNet).

-

Azure Private DNS Zone: Within the virtual network of the hub, where the Private DNS Resolver resides, there is an associated Azure Private DNS Zone. This DNS zone is specifically configured for Azure OpenAI and contains the mapping of the hostname to its private IP address. This association is crucial, as it ensures that DNS requests for Azure OpenAI are resolved internally within your Azure network.

-

DNS Resolution: The Azure Private DNS Resolver consults the Azure OpenAI Private DNS Zone to obtain the private IP address associated with "azure-openai.com." This DNS resolution process occurs entirely within your Azure Virtual Network, maintaining security and privacy.

-

Response: The resolver then provides the Azure App Service with the private IP address it retrieved from the DNS zone.

-

Connection: Armed with the private IP address, your Azure App Service can establish a secure, private connection to Azure OpenAI's private endpoint. Importantly, this connection takes place entirely within your Azure network, eliminating the need for traffic to traverse the public internet.

This DNS resolution process ensures that DNS requests for Azure OpenAI are resolved to private IP addresses within your network, enhancing security and minimizing exposure to external networks. By leveraging Azure Private DNS and Private Link, you establish a secure communication channel between your application and Azure OpenAI while maintaining complete control over DNS resolution.

App Service Gotchas and Networking Considerations

Azure App Service is a versatile platform for hosting web applications, including chatbots. However, it does have some networking nuances that need to be addressed in the context of Azure OpenAI Landing Zones.

-

Dynamic Outbound IPs: Azure App Service may have dynamic, frequently changing outbound IP addresses. This dynamism can pose a challenge when configuring firewall rules or IP-based access control for services like Azure OpenAI, which typically require specifying allowed IP addresses.

Solution: To address this challenge, it's essential to allow a range of IP addresses to accommodate the dynamic nature of outbound IPs from your App Service. This range ensures that your firewall rules or IP restrictions remain effective despite IP changes.

-

Route Traffic Through a Fixed IP: In certain scenarios, Azure OpenAI may require a fixed, known IP address for communication. This is common when dealing with services that enforce IP whitelisting.

Solution: To meet this requirement, consider routing your App Service traffic through a specific, static IP address. You can achieve this by configuring a NAT Gateway (NATGW) or using Azure Front Door with a dedicated IP. However, keep in mind that even with these solutions, the traffic may still be routed through a public IP address, even though it doesn't leave Azure.

-

VNet Integration: Azure App Service can be integrated with Azure Virtual Networks (VNets) to enable secure communication between your web app and resources within the VNet. This integration can enhance security and enable the use of private endpoints for communication with services like Azure OpenAI.

-

Private Endpoints: Private endpoints offer a highly secure way to connect your Azure App Service to services like Azure OpenAI over a private network. They provide a private IP address within your VNet, ensuring that data does not traverse the public internet during communication.

-

Secure Communication to Azure OpenAI: When your Azure App Service needs to connect to Azure OpenAI, it should use a private endpoint for Azure OpenAI. This approach ensures that the communication remains within your VNet and does not rely on public IP addresses. Implementing encryption protocols like HTTPS or mutual TLS (mTLS) further enhances security. From the AppService side you need both private endpoint and vnet integration to make sure that you are using the private endpoint of azure open ai, if you only use private enpoint and no vnet integration then the AppService will try to use the public endpoint. In theory this might not matter because the traffic will stay in the microsoft backbone and will not go out to the public internet but it's always better to be sure.

-

Firewall Rules for Azure OpenAI: Given that the Azure App Service's outbound IP address is dynamic and public, you need to configure firewall rules in Azure OpenAI to allow communication specifically from the dynamic IP address of your App Service. This step ensures that your web application can establish a secure connection with Azure OpenAI while maintaining a private and secure network architecture.

In essence, the key to successfully integrating Azure App Service into your Azure OpenAI Landing Zone lies in managing outbound connectivity effectively while ensuring secure, private, and controlled communication with Azure OpenAI. By addressing these considerations and configurations, you can establish secure, reliable, and efficient communication within your Azure AI Landing Zone.

Securing with Azure Application Gateway

In certain scenarios, particularly when deploying a chatbot that requires web-based interaction, Azure Application Gateway (App Gateway) proves to be an invaluable asset. App Gateway serves as a powerful layer-7 load balancer that not only enhances the availability and scalability of your applications but also plays a pivotal role in securing them.

Frontend IP Configuration: When setting up Azure Application Gateway, you define a Frontend IP Configuration, which acts as the entry point for incoming traffic. You can configure this to be a public IP or a private IP address, depending on your security requirements. For a secure chatbot deployment, the use of a private IP address is often preferred, aligning with the best practices of keeping your chatbot communication within the Azure network.

Backend Pool with Private Endpoints: To establish a secure connection between Azure Application Gateway and your chatbot, you set up a backend pool pointing to the Azure App Service where your chatbot resides. Importantly, this backend pool should use the private endpoint associated with your App Service. Private Endpoints allow the chatbot service to communicate securely within your Azure Virtual Network using a private IP address.

Web Application Firewall (WAF): One of the standout features of Azure Application Gateway is its integration with Web Application Firewall (WAF). The WAF helps safeguard your chatbot by inspecting and filtering incoming traffic. It's a critical security layer that protects against a wide range of web-based threats, such as SQL injection, cross-site scripting (XSS), and more. Configuring and fine-tuning WAF rules according to your chatbot's specific requirements can significantly enhance its security posture.

SSL Termination: Azure Application Gateway also offers the advantage of SSL termination. When deploying a chatbot that requires secure communication over HTTPS, the gateway can handle the SSL/TLS encryption and decryption, thereby offloading this resource-intensive task from your chatbot service. This not only simplifies certificate management but also improves performance.

By integrating Azure Application Gateway into your chatbot deployment, you ensure that incoming requests are efficiently routed to your chatbot service via a private endpoint. The Web Application Firewall adds an essential security layer, and SSL termination simplifies secure communication setup. These components work harmoniously to protect your chatbot while maintaining optimal performance and accessibility. Incorporating Azure Application Gateway is a valuable step towards a secure and robust chatbot deployment within your Azure environment.

Conclusion

Deploying intelligent chatbots powered by OpenAI within Microsoft Azure offers numerous advantages, including scalability, reliability, and seamless integration with other Azure services. However, to ensure the optimal performance, security, and compliance of your chatbot solutions, it's crucial to pay careful attention to the networking aspects.

Azure Landing Zones provide a structured approach to setting up Azure resources, ensuring a strong foundation for your chatbot deployments. Understanding how PaaS services operate within Azure's network and utilizing features like Virtual Network (VNet) Integration and Private Endpoints can enhance the security and efficiency of your chatbot's networking.

Moreover, Azure's intelligent network architecture offers the capability to keep traffic within the Azure network backbone, minimizing latency and improving performance for chatbot interactions. It efficiently routes traffic to designated egress points only when necessary, ensuring that your chatbot's outbound traffic to the public internet is optimized and secure.

By implementing these best practices and leveraging Azure's networking capabilities, you can ensure that your chatbot solutions deliver exceptional user experiences while maintaining the highest standards of performance and security.

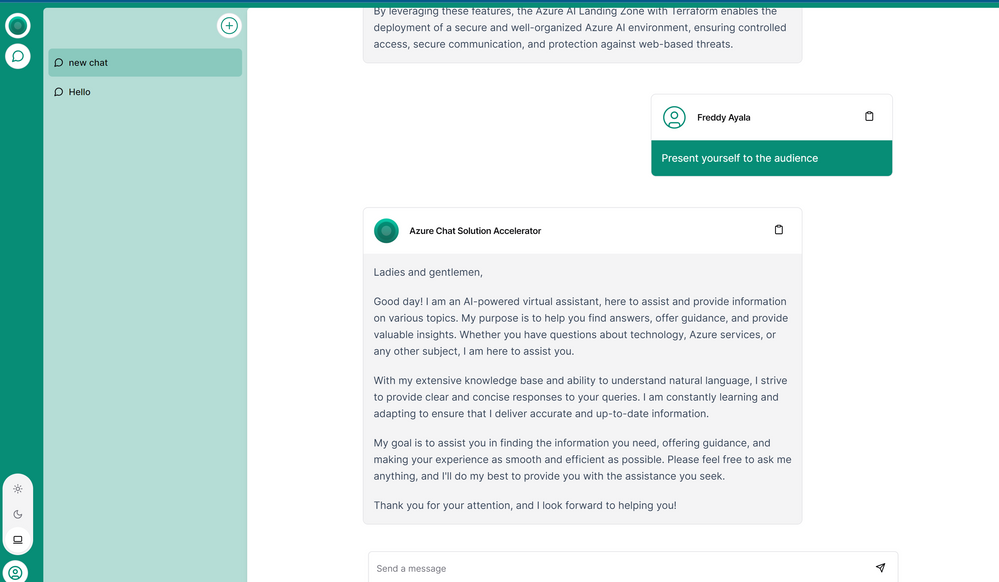

Certainly, you can utilize my repository available at https://github.com/FreddyAyala/AzureAIServicesLandingZone to access a comprehensive working example. This repository provides the resources and configurations needed to deploy both a complete AI Landing Zone and a functional chatbot using Azure OpenAI services.

Whether you're looking to set up an end-to-end AI Landing Zone or simply integrate a chatbot into your existing Azure environment, this repository offers a valuable starting point. It streamlines the deployment process, saving you time and effort, and allows you to harness the capabilities of OpenAI in Azure for your specific use cases.

By leveraging this repository, you can explore and implement AI solutions more efficiently, enabling you to take full advantage of the power of intelligent chatbots within your applications.

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...