Navigating confidential computing across Azure

What is confidential computing?

Confidential computing is the protection of data in use by performing computation in a hardware-based Trusted Execution Environment (TEE). While cloud native workloads data is typically protected when in transit through networking encryption (i.e. TLS, VPN), and at rest (i.e. encrypted storage), confidential computing enables data protection in memory while processing. The confidential computing threat model aims at removing or reducing the ability for a cloud provider operator and other actors in the tenant’s domain to access code and data while being executed.

Technologies like Intel Software Guard Extensions (SGX), or AMD Secure Encrypted Virtualization (SEV-SNP) are recent CPU improvements supporting confidential computing implementations. These technologies are designed as virtualization extensions and provide feature sets including memory encryption and integrity, CPU-state confidentiality and integrity, and attestation, for building the confidential computing threat model.

Figure 1 – The three states of data protection.

When used in conjunction with data encryption at rest and in transit, confidential computing eliminates the single largest barrier of encryption - encryption in use - by moving sensitive or highly regulated data sets and application workloads from an inflexible, expensive on-premises IT infrastructure to a more flexible and modern public cloud platform. Confidential computing extends beyond just generic data protection. TEEs are also being used to protect proprietary business logic, analytics functions, machine learning algorithms, or entire applications.

|

Customers like Signal, for example, adopt Azure confidential computing to provide a scalable and secure environment for its messenger app. Signal’s private contact discovery service efficiently and scalably determines whether the contacts in their address book are Signal users without revealing the contacts in their address book even to the Signal service, making contact data inaccessible to any unauthorized party, including staff at Signal or Microsoft as cloud provider. |

"We utilize Azure confidential computing to provide scalable, secure environments for our services. Signal puts users first, and Azure helps us stay at the forefront of data protection with confidential computing.” Jim O'Leary, VP of Engineering, Signal

|

Royal Bank of Canada (RBC) is currently piloting a confidential multiparty data analytics and machine learning pipeline on top of the Azure confidential computing platform, which ensures that participating institutions can be confident that their confidential customer and proprietary data is not visible to other participating institutions, including RBC itself. |

“Now with Azure confidential computing, we can protect data not only at rest and in transit, but also while it is in use, which completes the life cycle around data privacy.” Bob Blainey, RBC Fellow, Royal Bank of Canada

Confidential computing use cases

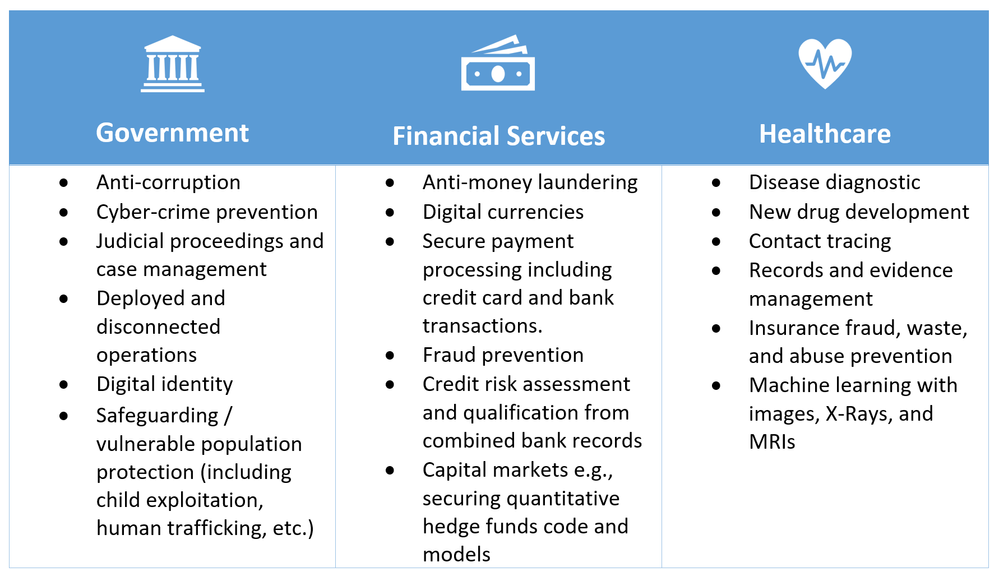

We have observed a variety of use cases for protecting data in regulated industries such as Government, Financial services, and Healthcare institutes. For example, preventing access to PII (Personally Identifiable Information) data helps protect the digital identity of citizens when accessing public services from all parties involved in the data access, including the cloud provider that stores it. The same personally identifiable information may contain biometric data that can be used for finding and removing known images of child exploitation and prevent human trafficking, as well as in digital forensics investigations.

Business transactions and project collaboration require the sharing of information among multiple parties. Often, data being shared is confidential, whether it’s PII, financial records, medical records, private citizen data, etc. Public and private organizations require data protection from unauthorized access, including the people who regularly deal with that data. This includes computing infrastructure admins or engineers, security architects, business consultants, and data scientists.

The use of machine learning for healthcare services has grown massively with the broader access to large datasets and imagery of patients captured by medical devices. Disease diagnostic and drug development benefit from access to datasets from multiple data sources. Hospitals and health institutes can collaborate by sharing their patient medical records with a centralized trusted execution environment (TEE). Machine learning services running in the TEE aggregate and analyze data and can provide a higher accuracy of prediction by training their models on consolidated datasets, with no risks of compromising the privacy of their patients.

Figure 2 – Some of potential business use cases that confidential computing help address.

Navigating Azure confidential computing offerings

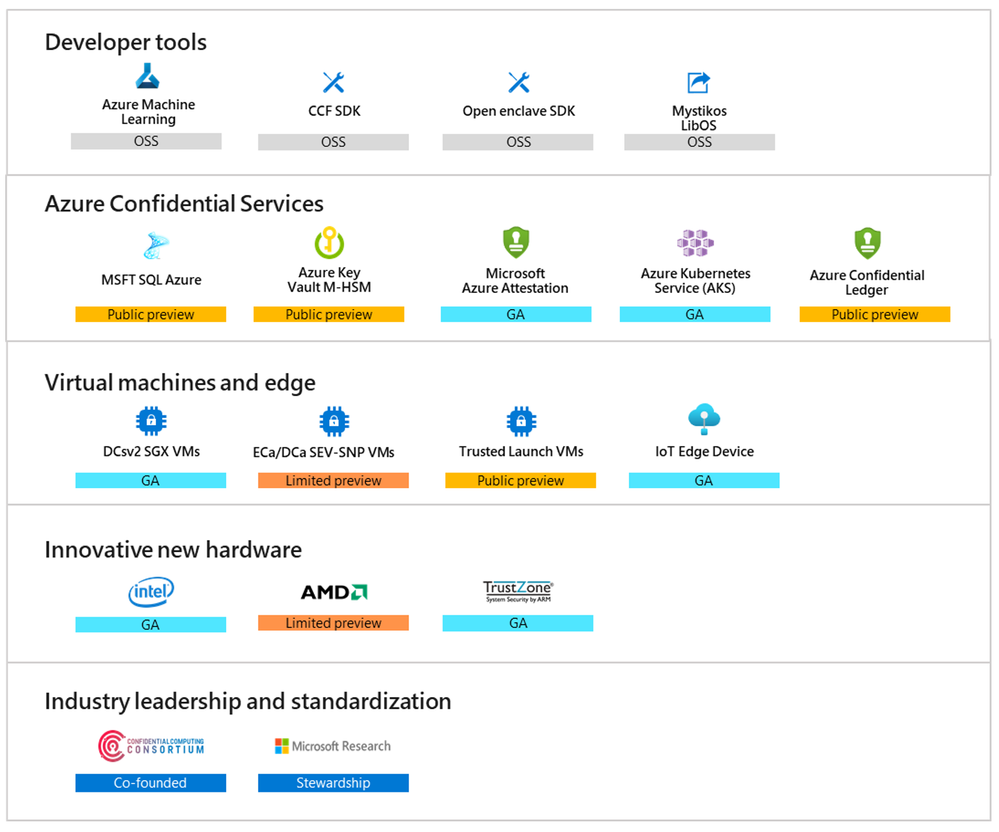

Microsoft's offerings for confidential computing extend from Infrastructure as a Service (IaaS) to Platform as a Service (PaaS) and as well as developer tools to support your journey to data and code confidentiality in the cloud.

Figure 3 – The Azure Confidential Computing technology stack.

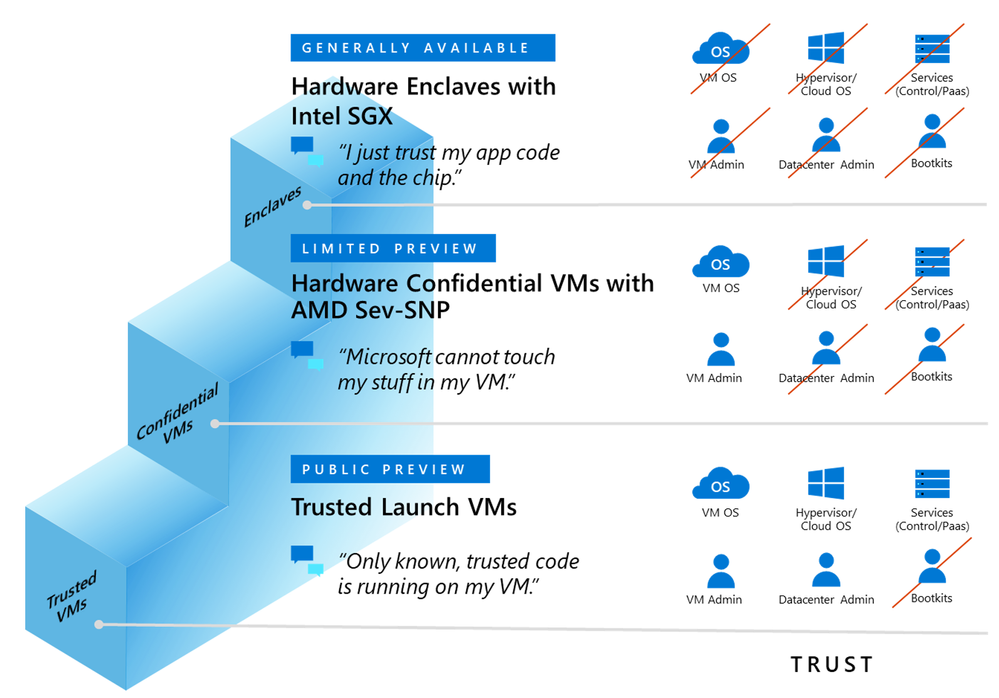

Azure offers different virtual machines for confidential computing IaaS workloads and customers can choose what’s best for them depending on their desired security posture. Figure 4 shows the “trust ladder” of what customers can expect from a security posture perspective on these IaaS offerings.

Figure 4 – The “trust ladder” of Azure confidential computing IaaS.

Our services currently generally available to the public include:

- Intel SGX-enabled Virtual Machines. Azure offers the DCsv2 series built on Intel SGX technology for hardware-based enclave creation. You can build secure enclave-based applications to run in the DCsv2-series of VMs to protect your application data and code in use.

- Enclave aware containers running on Azure Kubernetes Service (AKS). Confidential computing nodes on AKS use Intel SGX to create isolated enclave environments in the nodes between each container application.

- Microsoft Azure Attestation, a remote attestation service for validating the trustworthiness of multiple Trusted Execution Environments (TEEs) and verifying integrity of the binaries running inside the TEEs.

- Azure Key Vault Managed HSM, a fully managed, highly available, single-tenant, standards-compliant cloud service that enables you to safeguard cryptographic keys for your cloud applications, using FIPS 140-2 Level 3 validated Hardware Security Modules (HSM).

- Azure IoT Edge supports confidential applications that run within secure enclaves on an Internet of Things (IoT) device. IoT devices are often exposed to tampering and forgery because they are physically accessible by bad actors. Confidential IoT Edge devices add trust and integrity at the edge by protecting the access to telemetry data captured by and stored inside the device itself before streaming it to the cloud.

Additional services are currently in public preview, including our recent announcements at Microsoft Build 2021:

- Confidential Virtual Machines based on AMD SEV-SNP technology are currently in limited preview and available to selected customers. To sign up for access to the limited preview of Azure Confidential VMs, please fill in this form.

- Trusted Launch is a Generation 2 VM that is hardened with security features – secure boot, virtual trusted platform module, and boot integrity monitoring – that protects against boot kits, rootkits, and kernel-level malware.

- Always Encrypted with secure enclaves in Azure SQL. The confidentiality of sensitive data is protected from malware and high-privileged unauthorized users by running SQL queries directly inside a TEE when the SQL statement contains any operations on encrypted data that require the use of the secure enclave where the database engine runs.

- Azure Confidential Ledger. ACL is a tamper-proof register for storing sensitive data for record keeping and auditing or for data transparency in multi-party scenarios. It offers Write-Once-Read-Many guarantees which make data non-erasable and non-modifiable. The service is built on Microsoft Research’s Confidential Consortium Framework.

- Confidential Inference ONNX Runtime, a Machine Learning (ML) inference server that restricts the ML hosting party from accessing both the inferencing request and its corresponding response.

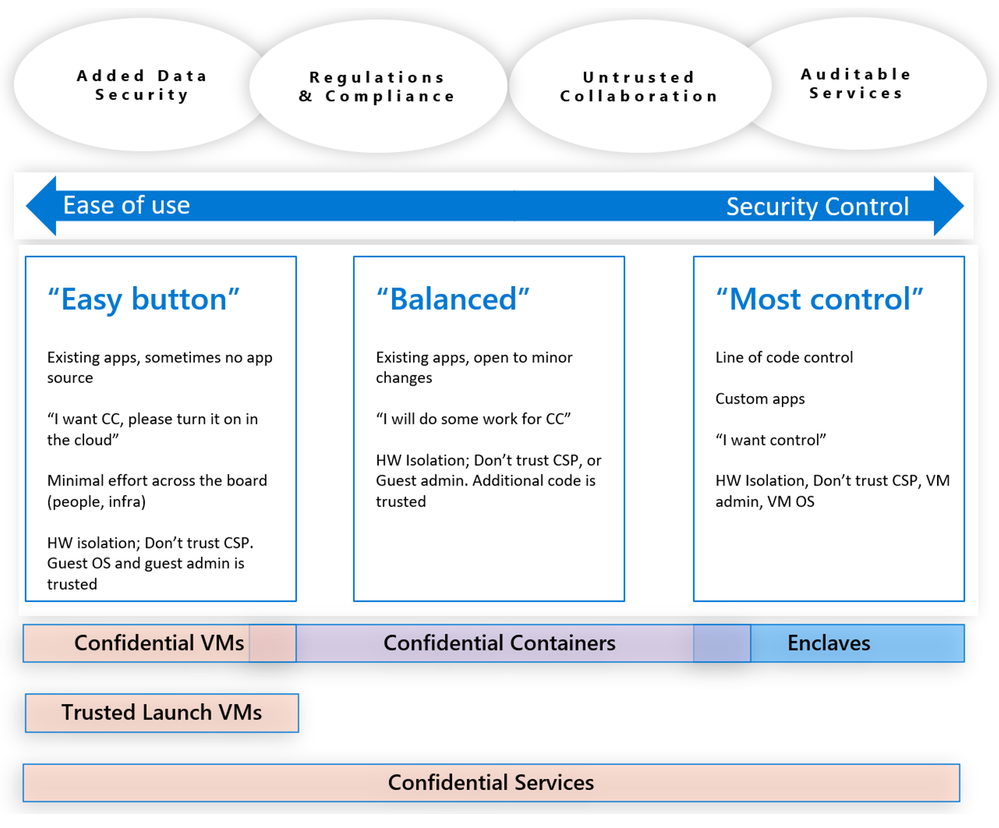

We have seen different workloads having different requirements based on their ability to modify their code, or when they would instead prefer to “lift and shift” to be confidential. Figure 5 can be useful to help navigate the Confidential IaaS, Containers, Enclaves and the PaaS offerings in Azure.

Figure 5 – Navigating the Azure confidential computing offerings.

Conclusion

Our vision with confidential computing is to transform the Azure Cloud to the Azure Confidential Cloud and move the industry from computing in the clear to computing confidentially in the cloud as well as the edge. Join us as we create this future!

For more information on the current services please visit https://aka.ms/azurecc or visit our docs.

Published on:

Learn moreRelated posts

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...

How Snowflake scales with Azure IaaS

Microsoft Rewards: Retirement of Azure AD Account Linking

Microsoft is retiring the Azure AD Account Linking feature for Microsoft Rewards by March 19, 2026. Users can no longer link work accounts to ...

Azure Function to scrape Yahoo data and store it in SharePoint

A couple of weeks ago, I learned about an AI Agent from this Microsoft DevBlogs, which mainly talks about building an AI Agent on top of Copil...

Maximize Azure Cosmos DB Performance with Azure Advisor Recommendations

In the first post of this series, we introduced how Azure Advisor helps Azure Cosmos DB users uncover opportunities to optimize efficiency and...

February Patches for Azure DevOps Server

We are releasing patches for our self‑hosted product, Azure DevOps Server. We strongly recommend that all customers stay on the latest, most s...

Building AI-Powered Apps with Azure Cosmos DB and the Vercel AI SDK

The Vercel AI SDK is an open-source TypeScript toolkit that provides the core building blocks for integrating AI into any JavaScript applicati...

Time Travel in Azure SQL with Temporal Tables

Applications often need to know what data looked like before. Who changed it, when it changed, and what the previous values were. Rebuilding t...