Enrich your Data Estate with Fabric Pipelines and Azure OpenAI

The benefits of Generative AI is of huge interest for many organisations and the possibilities seem endless. One such interesting use case is the ability to leverage Azure OpenAI models in data pipelines to create or enrich existing data assets.

The ability to integrate Azure OpenAI into Fabric data processing pipelines enables numerous integration scenarios to either create new datasets or augment existing datasets to support downstream analytics. As a simple example, a generative AI natural language model could be used to gather additional information about zip codes such as demographics (population, occupations etc) and this could in turn be ingested and conditioned to enrich the data.

The following example demonstrates how Fabric pipelines can be integrated with Azure OpenAI using the pipeline Web activity whilst also leveraging Azure API Management to provide an additional management and security layer. I am a big fan of API Management in front of any internal or external API services due to capabilities such as authentication, throttling, header manipulation and versioning. Further guidance on Azure OpenAI and API Management is described here Build an enterprise-ready Azure OpenAI solution with Azure API Management - Microsoft Community Hub.

The Fabric pipeline and Azure OpenAI flow is as follows:

- Extract data element from Fabric data warehouse (in this case, this is 'zip code')

- Pass the value into an Azure OpenAI natural language model (GPT 3.5 Turbo) via Azure API Management

- The GPT 3.5 Turbo model (which understands and generates natural language and code) returns information, back to the Fabric pipeline, based on the zip code; in this example population information is returned to the Fabric pipeline where the data can either be further processed and persisted to storage.

Fabric pipelines provide excellent range of integration options. The Web activity, coupled with dynamic processing in Fabric, is extremely powerful Web activity - Microsoft Fabric | Microsoft Learn and enables a range of API calls (GET, POST, PUT, DELETE and PATCH) to web services. Please note, the same functionality can be achieved in Azure Data Factory pipelines.

The diagram below illustrates the simple Fabric pipeline flow and activities.

Figure 1.0 Microsoft Fabric Pipeline integrating Azure OpenAI

The initial Script activity extracts a source data attribute, in this case a zip code, from the Fabric OneLake data warehouse. The output is persisted in a parameter varQuestionParameter. In this example, an intermediate variable is used for debugging purposes and can be removed later if needed.

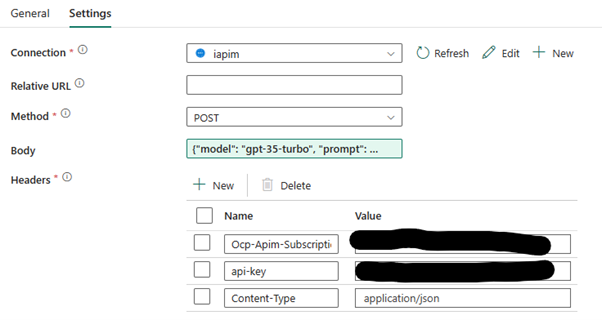

The pipeline Web activity is easily configured using a POST method (to the Azure OpenAI natural language model) via API Management using an APIM subscription key, API key and Content-Type as shown below.

Figure 2.0 Microsoft Fabric Pipeline Web Activity configuration

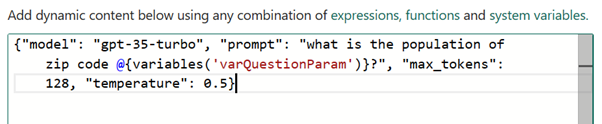

The body of the API POST is dynamically constructed using parameters as shown below.

Figure 3.0 Microsoft Fabric Pipeline Web Activity dynamic content

Dynamic expressions in Fabric pipelines are incredibly powerful and allow run-time configuration of activities, connections and datasets.

In the example shown above, max_tokens is a configurable parameter which specifies the maximum number of tokens (segmented text strings) that can be generated in the chat completion. Occasionally it is necessary to increase the value. For example, consider setting the max_token value higher to ensure that the model does not stop generating text before it reaches the end of the message.

In contrast, (sampling) temperature is used to control model creativity. A higher temperature (e.g., 0.7) results in more diverse and creative output, while a lower temperature (e.g., 0.2) makes the output more deterministic and focused. Examples of values and definitions can be found here Cheat Sheet: Mastering Temperature and Top_p in ChatGPT API - API - OpenAI Developer Forum.

The output of the model is passed back to the Fabric Web Activity which can then be persisted in the Fabric OneLake or other storage destination. This is just a simple example demonstrating how easy it is to introduce Generative AI scenarios into data integration pipelines.

Please post if you have questions/comments, or if you are exploring data pipeline and generative AI integration scenarios to enable new insights.

References

- Fabric Pipelines Ingest data into your Warehouse using data pipelines - Microsoft Fabric | Microsoft Learn

- Fabric Pipelines vs. Azure Data Factory Differences between Data Factory in Fabric and Azure - Microsoft Fabric | Microsoft Learn

- Azure OpenAI Service Models Azure OpenAI Service models - Azure OpenAI | Microsoft Learn

- Azure OpenAI and API Management Build an enterprise-ready Azure OpenAI solution with Azure API Management - Microsoft Community Hub.

- Azure Architecture Center Azure Architecture Center - Azure Architecture Center | Microsoft Learn

Published on:

Learn moreRelated posts

Microsoft Entra ID Governance: Azure subscription required to continue using guest governance features

Starting January 30, 2026, Microsoft Entra ID Governance requires tenants to link an Azure subscription to use guest governance features. With...

Azure Developer CLI (azd) – January 2026: Configuration & Performance

This post announces the January 2026 release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – January 2026: Conf...

Azure SDK Release (January 2026)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (January 2026)...

Azure Cosmos DB TV Recap – From Burger to Bots – Agentic Apps with Cosmos DB and LangChain.js | Ep. 111

In Episode 111 of Azure Cosmos DB TV, host Mark Brown is joined by Yohan Lasorsa to explore how developers can build agent-powered application...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

Accelerate Your Cosmos DB Infrastructure with GitHub Copilot CLI and Azure Cosmos DB Agent Kit

Modern infrastructure work is increasingly agent driven, but only if your AI actually understands the platform you’re deploying. This guide sh...

SharePoint: Migrate the Maps web part to Azure Maps

The SharePoint Maps web part will migrate from Bing Maps to Azure Maps starting March 2026, completing by mid-April. Key changes include renam...

Microsoft Azure Maia 200: Scott Guthrie EVP

Azure Cosmos DB TV Recap: Supercharging AI Agents with the Azure Cosmos DB MCP Toolkit (Ep. 110)

In Episode 110 of Azure Cosmos DB TV, host Mark Brown is joined by Sajeetharan Sinnathurai to explore how the Azure Cosmos DB MCP Toolkit is c...