Bring your own data to Azure OpenAI chat models

Introduction

Azure OpenAI models provide a secure and robust solution for tasks like creating content, summarizing information, and various other applications that involve working with human language. Now you can operate these models in the context of your own data. Try Azure OpenAI Studio today to naturally interact with your data and publish it as an app from from within the studio.

Getting Started

Follow this quickstart tutorial for pre-requisites and setting up your Azure OpenAI environment.

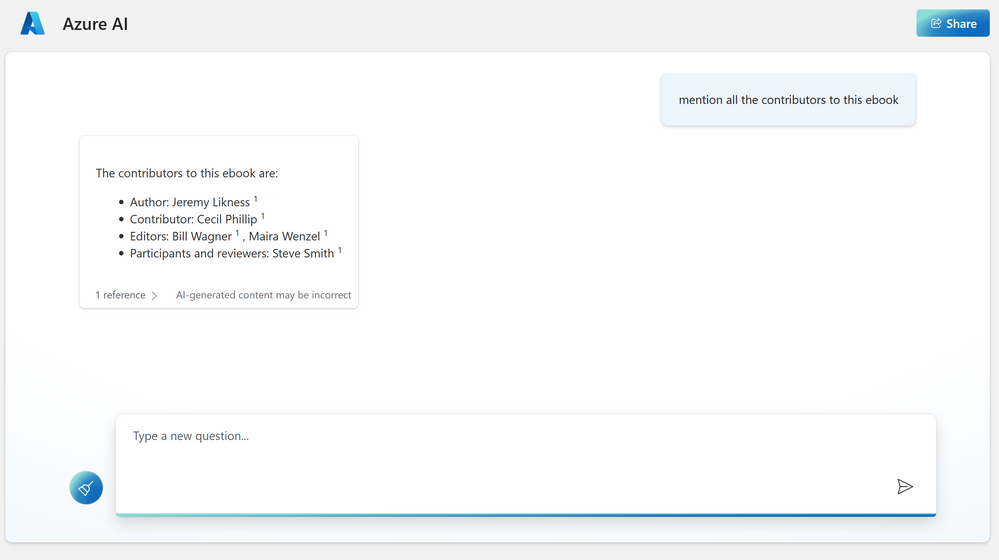

In order to try the capabilities of the Azure OpenAI model on private data, I am uploading an ebook to the Azure OpenAI chat model. This e-book is about "Serverless Apps: Architecture, patterns and Azure Implementation" written by Jeremy Likness and Cecil Phillip. You can download the e-book here

Before uploading own data

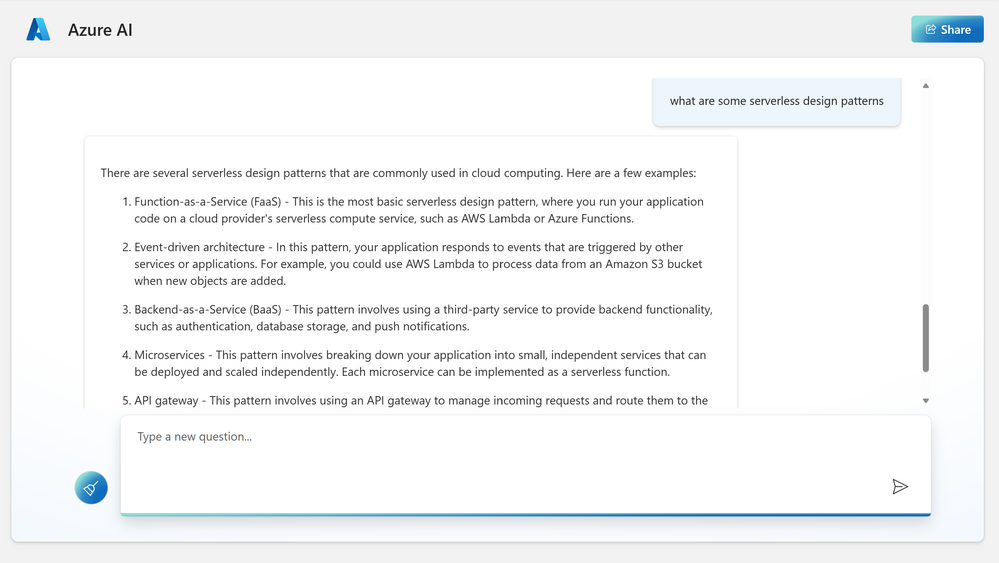

Prior to uploading this particular e-book, the model's response to the question on serverless design patterns is depicted below. While this response is relevant, let's examine if the model is able to pick up the e-book related content during the next iteration

After uploading own data

This e-book has an exclusive section that talks in detail about different design patterns like Scheduling, CQRS, Event based processing etc.

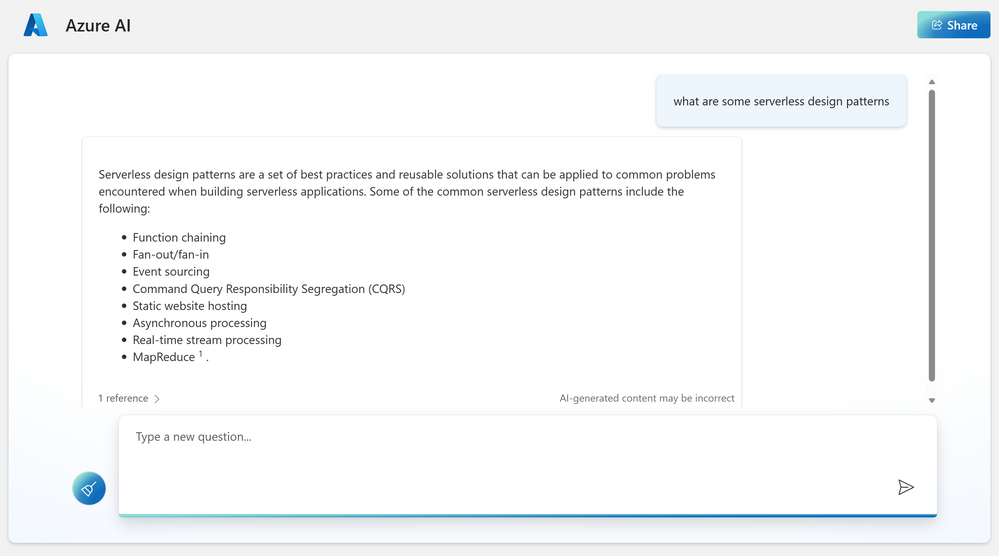

After training the model on this PDF data, I asked a few questions and the following responses were nearly accurate. I also limited the model to only supply the information from the uploaded content. Here's what I found.

Now when I asked about the contributors to this e-book, it listed everyone right.

Read more

With enterprise data ranging to large volumes in size, it is not practical to supply them in the context of a prompt to these models. Therefore, the setup leverages Azure services to create a repository of your knowledge base and utilize Azure OpenAI models to interact naturally with them.

The Azure OpenAI Service on your own data uses Azure Cognitive Search service in the background to rank and index your custom data and utilizes a storage account to host your content (.txt, .md, .html, .pdf, .docx, .pptx). Your data source is used to help ground the model with specific data. You can select an existing Azure Cognitive Search index, Azure Storage container, or upload local files as the source we will build the grounding data from. Your data is stored securely in your Azure subscription.

We also have another Enterprise GPT demo that allows you to piece all the azure building blocks yourself. An in-depth blog written by Pablo Castro chalks the detail steps here.

Getting started directly from Azure OpenAI studio allows you to iterate on your ideas quickly. At the time of writing this blog, the completions playground allow 23 different use cases that take advantage of different models under Azure OpenAI.

- Summarize issue resolution from conversation

- Summarize key points from financial report (extractive )

- Summarize an article (abstractive)

- Generate product name ideas

- Generate an email

- Generate a product description (bullet points)

- Generate a listicle-style blog

- Generate a job description

- Generate a quiz

- Classify Text

- Classify and detect intent

- Cluster into undefined categories

- Analyze sentiment with aspects

- Extract entities from text

- Parse unstructured data

- Translate text

- Natural Language to SQL

- Natural language to Python

- Explain a SQL query

- Question answering

- Generate insights

- Chain of thought reasoning

- Chatbot

Resources

There are different resources to get you started on Azure OpenAI. Here's a few:

- Apply for access to Azure OpenAI

- Azure OpenAI Service - Documentation, quickstarts, API reference - Azure Cognitive Services | Microsoft Learn

- Introduction to Azure OpenAI Service - Training | Microsoft Learn

- GitHub - Azure/azure-openai-samples

- Revolutionize your Enterprise Data with ChatGPT

Published on:

Learn moreRelated posts

Power Platform Data Export: Track Cloud Flow Usage with Azure Application Insights

In my previous article Power Platform Data Export: Track Power Apps Usage with Azure Data Lake, I explained how to use the Data Export feature...

Announcing General Availability of JavaScript SDK v4 for Azure Cosmos DB

We’re excited to launch version 4 of the Azure Cosmos DB JavaScript SDK! This update delivers major improvements that make it easier and faste...

Confluent Cloud Releases Managed V2 Kafka Connector for Azure Cosmos DB

This article was co-authored by Sudhindra Sheshadrivasan, Staff Product Manager at Confluent. We’re excited to announce the General Availabili...

Now in Public Preview: Azure Functions Trigger for Azure Cosmos DB for MongoDB vCore

The Azure Cosmos DB trigger for Azure Functions is now in public preview—available for C# Azure Functions using Azure Cosmos DB for MongoDB vC...

Now Available: Migrate from RU to vCore for Azure Cosmos DB for MongoDB via Azure Portal

We are thrilled to introduce a cost-effective, simple, and efficient solution for migrating from RU-based Azure Cosmos DB for MongoDB to vCore...

Generally Available: Seamless Migration from Serverless to Provisioned Throughput in Azure Cosmos DB

We are excited to announce the general availability (GA) of a highly requested capability in Azure Cosmos DB: the ability to migrate from serv...

Public Preview: Shape and Control Workloads with Throughput Buckets in Azure Cosmos DB

Imagine your application is processing customer checkouts in real-time, while a background process synchronizes data for reporting. Suddenly, ...

Microsoft Entra ID integration with Azure Cosmos DB for MongoDB (vCore)

Security is no longer a nice-to-have—it’s a foundational requirement for any cloud-native architecture. As organizations adopt managed databas...

How to use the Azure AI Foundry connector in Power Apps

Yesterday I looked at how to use the Azure AI Foundry connector in Power Automate today I'm doing the same within Power Apps, creating a Q�...