Tutorial: Blob backup and restore using Azure Backup via Azure CLI

Credits: Kartik Pullabhota (Sr. PM for Automation, HANA and Database backup using Azure Backup) for SME input and Swathi Dhanwada (Customer Engineer, Tech community) for testing.

Prerequisites

If you don't already have an Azure subscription, create a free account before you begin.

Azure CLI:

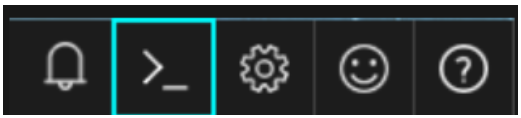

- Launch Cloud Shell from top-navigation of the Azure portal.

- Select a subscription to create a storage account and Microsoft Azure Files share.

- Select Create storage.

- After creation, check that the environment drop-down from the left-hand side of shell window says Bash.

Note: Support for Azure Blobs back up and restore via CLI is in preview and available as an extension in Az 2.15.0 version and later. The extension is automatically installed when you run the az dataprotection commands. Learn more about extensions.

Create resource group:

- To create a resource group from the Bash session within Cloud Shell, run the following:

RGNAME= ‘your resource group name’

LOCATION= ‘your location’

az group create --name $RGNAME --location $LOCATION

- To retrieve properties of the newly created resource group, run the following:

-

az group show --name $RGNAME

-

Create storage account

- Create a general-purpose storage account with the az storage account create command. The general-purpose storage account can be used for all four services: blobs, files, tables, and queues.

Create storage container

- Create a container for storing blobs with the az storage container create command.

az storage container create \

--account-name <storage-account> \

--name <container> \

--auth-mode login

Create a sample file (blob) and upload to container

- To upload a blob to Storage Container, you need “Storage Blob Data Contributor” permissions. The following example uses your Azure AD account to authorize the operation to create the container. Before you create the container, assign the Storage Blob Data Contributor role to yourself. Even if you are the account owner, you need explicit permissions to perform data operations against the storage account. For more information about assigning Azure roles, refer to Assign an Azure role for access to blob data.

az role assignment create \

--role "Storage Blob Data Contributor" \

--assignee ”object-id” \

--scope "/subscriptions/<subscription>/resourceGroups/<resource-group>/providers/Microsoft.Storage/storageAccounts/<storage-account>"

Note: To retrieve objectid of signed in user, run the following command.

az ad signed-in-user show --query objectId -o tsv

- To create or open a new file in Bash, execute the “vi” command with file name. For instance,

vi helloworld

- When the file opens, press the Insert key and write some content in the file. For instance, type Hello world, then press the Esc key. Next, type :x and then press Enter.

- To upload a blob to the container you created in the last step, use the az storage blob upload command.

az storage blob upload \

--account-name <storage-account> \

--container-name <container> \

--name helloworld \

--file helloworld \

--auth-mode login

- To check if the blob got uploaded, you can verify with following command.

az storage blob list \

--account-name <storage-account>\

--container-name <container> \

--output table \

--auth-mode login

Create backup vault

- Use the az dataprotection vault create command to create a Backup Vault. Learn more about creating a Backup Vault.

az dataprotection backup-vault create -g <rgname> --vault-name <backupvaultname> -l westus --type SystemAssigned --storage-settings datastore-type="VaultStore" type="LocallyRedundant"

Create backup policy for azure blob

- Create a protection policy to define when a backup job runs, and how long the recovery points are stored.

az dataprotection backup-policy get-default-policy-template --datasource-type AzureBlob > BlobPolicy.json

az dataprotection backup-policy create -g <rgname> --vault-name <backupvaultname> -n mypolicy --policy BlobPolicy.json

Grant required permissions to backup vault

- Operational backup also protects the storage account (that contains the blobs to be protected) from any accidental deletions by applying a Backup-owned Delete Lock. This requires the Backup vault to have certain permissions on the storage accounts that need to be protected. For convenience of use, these minimum permissions have been consolidated under the Storage Account Backup Contributor role.

- These can be performed via Portal, PowerShell or CLI.

Configure backup for azure blob

- Once relevant permissions are set, prepare configuration of backup by using the respective vault, policy, storage account, and inserting the values to az dataprotection backup-instance initialize command.

az dataprotection backup-instance initialize --datasource-type AzureBlob -l southeastasia --policy-id "subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxxxxxx/resourceGroups/<rgname>/providers/Microsoft.DataProtection/backupVaults/<backupvaultname>/backupPolicies/BlobBackup-Policy" --datasource-id "/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx/resourcegroups/blobrg/providers/Microsoft.Storage/storageAccounts/CLITestSA" > backup_instance.json

- Protect the disk using the az dataprotection backup-instance create command.

az dataprotection backup-instance create –g <rgname> --vault-name <backupvaultname> --backup-instance backup_instance.json

Restore Azure Blobs within a storage account

- As an initial step, list all backup instances within a vault using the az dataprotection backup-instance list command.

az dataprotection backup-instance list --resource-group <rgname> --vault-name <backupvaultname>

- Fetch the relevant instance name from previous command and verify it using the az dataprotection backup-instance show command.

az dataprotection backup-instance show --resource-group <rgname> --vault-name <backupvaultname> --name <backup-instance-name obtained from previous step>

Initialize Restore operation

- As the operational backup for blobs is continuous, there are no distinct points to restore from. Instead, you need to fetch the valid time-range under which blobs can be restored to any point-in-time. To check for valid time-ranges to restore within the last 30 days, you can use the az dataprotection restorable-time-range find command as shown below with the instance ID which was identified in the earlier step.

az dataprotection restorable-time-range find --start-time 2021-05-30T00:00:00 --end-time 2021-05-31T00:00:00 --source-data-store-type OperationalStore -g <rgname> --vault-name <backupvaultname> --backup-instances <backup instance id retrieved from previous step>

- Once the point-in-time to restore is fixed, there are multiple options to restore. To restore, use the az dataprotection backup-instance restore initialize-for-data-recovery command.

- Restoring all the blobs to a point-in-time

az dataprotection backup-instance restore initialize-for-data-recovery --datasource-type AzureBlob --restore-location southeastasia --source-datastore OperationalStore --target-resource-id "/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx/resourcegroups/<rgname>/providers/Microsoft.Storage/storageAccounts/<storage-account-name>" --point-in-time 2021-06-02T18:53:44.4465407Z > restore.json

- Restoring selected containers

az dataprotection backup-instance restore initialize-for-item-recovery --datasource-type AzureBlob --restore-location southeastasia --source-datastore OperationalStore --backup-instance-id "/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxx/resourceGroups/<rgname>/providers/Microsoft.DataProtection/backupVaults/<backupvaultname>/backupInstances/ <backup instance id retrieved from previous step>" --point-in-time 2021-06-02T18:53:44.4465407Z --container-list container1 container2 > restore.json

- Restoring containers using a prefix match

az dataprotection backup-instance restore initialize-for-item-recovery --datasource-type AzureBlob --restore-location southeastasia --source-datastore OperationalStore --backup-instance-id "/subscriptions/xxxxxxxx-xxxx-xxxx-xxxx-xxxxxxxx/resourceGroups/<rgname>/providers/Microsoft.DataProtection/backupVaults/<backupvaultname>/backupInstances/ <backup instance id retrieved from previous step>" --point-in-time 2021-06-02T18:53:44.4465407Z --from-prefix-pattern container1/text1 container2/text4 --to-prefix-pattern container1/text4 container2/text41 > restore.json

Note: The time zone for point-in-time will be taken as UTC

Trigger the restore

Below command triggers the restore operation

az dataprotection backup-instance restore trigger -g <rgname> --vault-name <backupvaultname> --backup-instances <backup instance id retrieved from previous step> --restore-request-object restore.json

Track backup jobs

- Track all the jobs using the az dataprotection job list command. You can list all jobs and fetch a particular job detail.

az dataprotection job list-from-resourcegraph --datasource-type AzureBlob --operation Restore

Additional Resources

- https://docs.microsoft.com/en-us/azure/backup/blob-backup-overview

- https://docs.microsoft.com/en-us/azure/backup/backup-blobs-storage-account-cli

- https://docs.microsoft.com/en-us/azure/backup/restore-blobs-storage-account-cli

- https://docs.microsoft.com/en-us/azure/backup/blob-backup-support-matrix

Published on:

Learn moreRelated posts

Automating Business PDFs Using Azure Document Intelligence and Power Automate

In today’s data-driven enterprises, critical business information often arrives in the form of PDFs—bank statements, invoices, policy document...

Azure Developer CLI (azd) Dec 2025 – Extensions Enhancements, Foundry Rebranding, and Azure Pipelines Improvements

This post announces the December release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) Dec 2025 – Extensions En...

Unlock the power of distributed graph databases with JanusGraph and Azure Apache Cassandra

Connecting the Dots: How Graph Databases Drive Innovation In today’s data-rich world, organizations face challenges that go beyond simple tabl...

Azure Boards integration with GitHub Copilot

A few months ago we introduced the Azure Boards integration with GitHub Copilot in private preview. The goal was simple: allow teams to take a...

Microsoft Dataverse – Monitor batch workloads with Azure Monitor Application Insights

We are announcing the ability to monitor batch workload telemetry in Azure Monitor Application Insights for finance and operations apps in Mic...

Copilot Studio: Connect An Azure SQL Database As Knowledge

Copilot Studio can connect to an Azure SQL database and use its structured data as ... The post Copilot Studio: Connect An Azure SQL Database ...

Retirement of Global Personal Access Tokens in Azure DevOps

In the new year, we’ll be retiring the Global Personal Access Token (PAT) type in Azure DevOps. Global PATs allow users to authenticate across...

Azure Cosmos DB vNext Emulator: Query and Observability Enhancements

The Azure Cosmos DB Linux-based vNext emulator (preview) is a local version of the Azure Cosmos DB service that runs as a Docker container on ...