Stream Data to Your Data Lake Using Azure Data Explorer

Summary

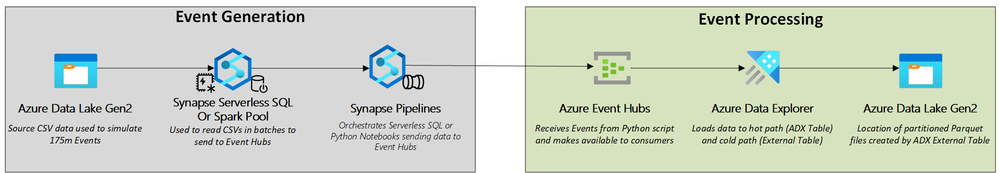

Microsoft Azure Data Explorer is a great resource to ingest and process streaming data. Azure Data Lake Storage is a great resource for storing large amounts of data. The end-to-end conceptual architecture contained in the GitHub Repository accompanying this blog focuses on deploying an environment that plays to the strengths of both of these services by using Azure Synapse Analytics to simulate streaming data to them. In this architecture, the core strength of Azure Data Explorer is performant real-time storage and analytics. The core strength of Azure Data Lake Storage is cost-effective and efficient long-term storage.

Target Audience

Data Engineering and Analytics teams that have the need to support both real-time analytics (hot path) and longer running batch analytics (cool path) are the teams targeted by the architecture shown above. Longer running batch analytics could include Machine Learning Training, Enterprise Data Warehouse loads, or querying data in place but enriching with data from batch sources. This architecture can support concepts like Lamda, Kappa, or Lakehouse.

Why Azure Data Explorer?

Performance in the Hot Path

Azure Data Explorer is extremely performant with telemetry and log data per this benchmark. To take advantage of this performance, streaming data must be ingested into Azure Data Explorer Tables so that the benefits of column store, text indexing, and sharding can be reaped. Data residing in the Azure Data Explorer Tables can be consumed by popular analytics services such as Spark, Power BI, or other services that can consume ODBC sources.

Performance and Control in the Cool Path

Azure Data Explorer offers the ability to control data going into the Cool Path/Data Lake at a granular level using Continuous Export and External Tables. External Tables allow you to define a location and format to store data in the lake or use a location that already exists. Continuous Export allows you to define the interval in which data is exported to the lake. Combining these two features allow you to create parquet files that are partitioned and optimally sized for analytical queries while avoiding the small file problem, provided you can accept the latency that comes with batching the data for larger writes.

Easy Ingestion from Azure Event Hubs

Azure Event Hubs often serves as the starting place for big data streaming. Azure Data Explorer integrates very well with Azure Event Hubs. In the GitHub repository tied to this architecture, you'll find a guide to complete the process of ingesting data into Azure Data Explorer from Event Hubs through an easy-to-use wizard. Even if a higher degree of configuration up is needed up front, there's an efficient way of creating this integration in the Azure Portal.

Flexibility for Other Use Cases

Azure Data Explorer has a dizzying number of features beyond what is demonstrated here. Other use case based solution architectures documented are Big Data Analytics, IoT Analytics, Geospatial Processing and Analytics, and more at this link. The Kusto query language employed by Azure Data Explorer has a lot of useful functions out of the box where other analytics tools might require a greater degree of customization. Some examples would be geo-spatial clustering, time-series analysis, and JSON parsing/querying. Finally, Azure Data Explorer also has a useful visualization layer built-in that can display real-time dashboards to deliver speed to value when building out analytics.

What's in the Repo?

The repository contains all artifacts needed to create the conceptual architecture detailed in this post. Furthermore, the repository is friendly to Azure newcomers and gives detailed walkthroughs on the concepts below (and more!).

- Infrastructure As Code (IaC) Deployment via Bicep templates - The Bicep language is one of the best ways to use code to deploy resources in Azure. All resources for the architecture deployment and some of the configuration of the integration between those resources are contained in Bicep templates in the repository.

- Managed Identities to secure communications between resources - Managed Identities can be used to simplify usage of credentials needed to allow Azure resources to communicate to one another. The repository will walk through the use of these credentials so that Azure Data explorer can read from Event Hubs, write to storage, and other use cases.

- Introduction to Streaming Consumption in Azure - Azure Data Explorer and Event Hubs work together in this architecture to consume the events generated by Azure Synapse Analytics. The repository shows how to connect to Event Hubs to other services, observe events, and use consumer groups. As previously covered, for Azure Data Explorer the repository shows how to consume Event Hub events, persist to Azure Data Explorer tables, and persist to parquet files residing in Azure Data Lake Storage.

- Introduction to Concepts in Azure Synapse Analytics - Azure Synapse Analytics is used to generate events in this architecture. Although this is not the normal workload for Synapse there are several higher-level concepts that are walked through in the repository. Concepts introduced include data processing orchestration in Synapse Pipelines, querying from the Azure Data Lake Storage using Synapse Serverless SQL, and deploying Python code in Synapse Spark.

Conclusion

Azure Data Explorer and Azure Data Lake Storage work well together to deliver a flexible streaming analytics environment. The Azure Data Explorer blog and Azure Storage blog are great places to monitor upcoming features and learn about detailed use cases. Try out the architecture in the GitHub repository today to learn how Azure Data Explorer can make your streaming analytics easier!

Published on:

Learn moreRelated posts

Azure Developer CLI (azd): One command to swap Azure App Service slots

The new azd appservice swap command makes deployment slot swaps fast and intuitive. The post Azure Developer CLI (azd): One command to swap Az...