A Reference Architecture for Siemens and Microsoft Customers in the Industrial AI Space.

This article presents a reference architecture to enhance the compatibility of Siemens Industrial Artificial Intelligence (Industrial AI) products with Azure.

The reference architecture ensures that data from Siemens edge devices smoothly flows into Azure, making it easy to monitor Industrial AI edge applications and machine learning models from one central location. This architecture is designed to simplify the integration process and improve the overall user experience for Siemens and Microsoft customers.

Context

AI-driven shop floor applications must adhere to rigorous industrial operational requirements to prevent unnoticed failures that could disrupt production lines.

The provided reference architecture solves these operational aspects of running machine learning (ML) models in shop floor environments. Observability data, such as machine learning inference logs and metrics, can be securely uploaded to the Azure Monitor platform, enabling the creation of operational dashboards and trigger notifications for threshold breaches.

Customers who use the Azure cloud for developing, training, evaluating, registering, and packaging machine learning models can now securely and automatically deploy these models from the cloud to their factory sites, integrating them with the Siemens Industrial Edge ecosystem. This allows them to take advantage of automated pipelines for training machine learning models within Azure and deploying them to Siemens Industrial Edge, along with Industrial AI applications.

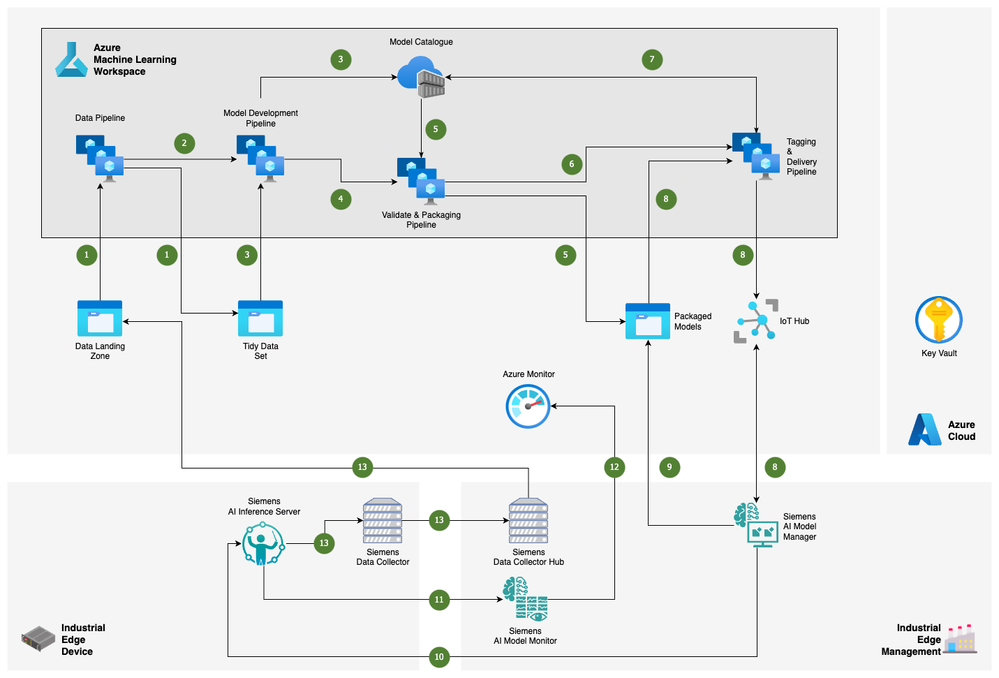

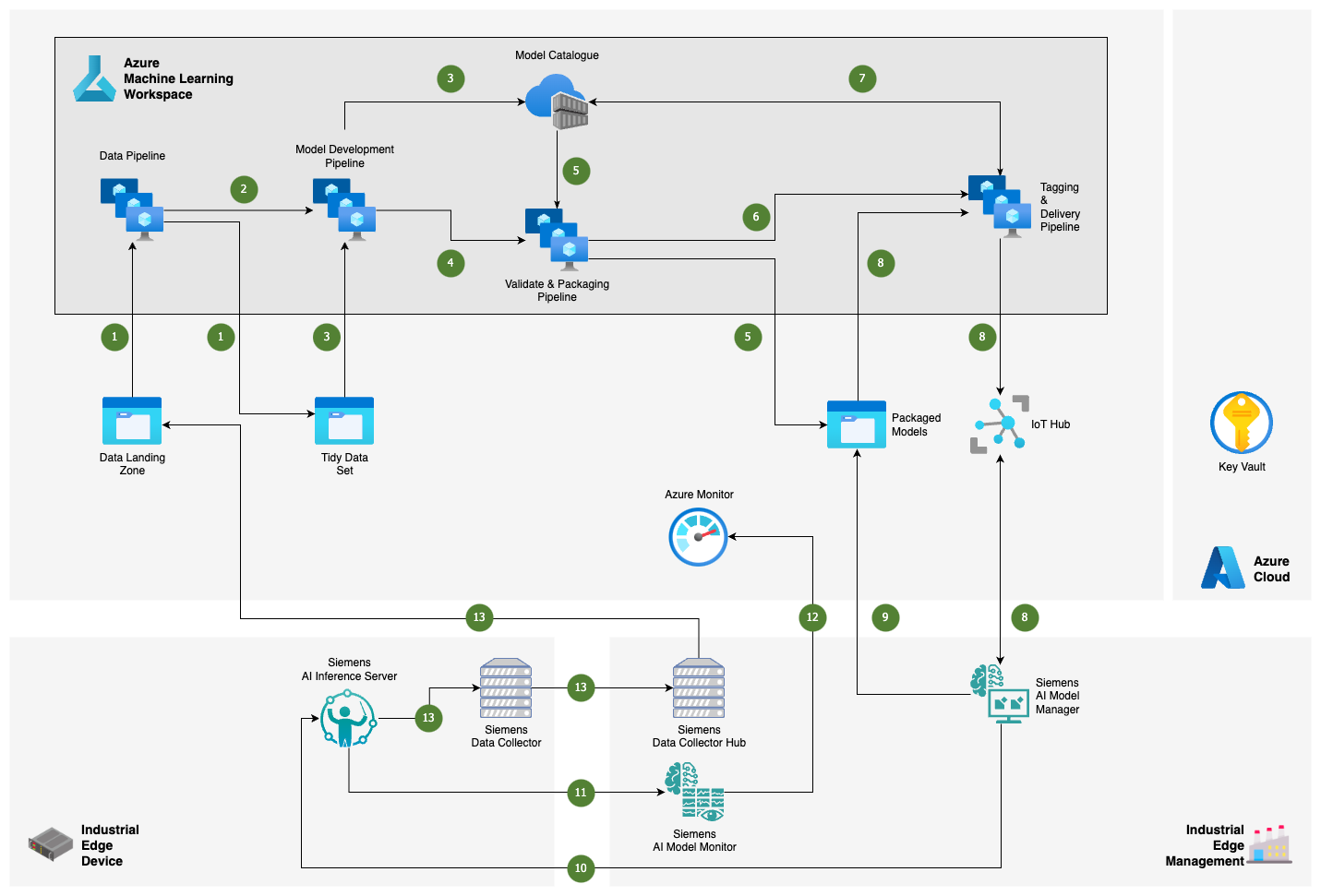

Architecture

Workflow

- Upon execution Data Pipeline:

- Loads raw training data from a dedicated Data Landing Zone Storage Account.

- Processes raw training data to shapes it to the format acceptable for model training.

- Saves the processed data into the Tidy Data Set Storage Account.

- After processing raw data Model Development Pipeline is executed.

- Upon execution Model Development Pipeline:

- Loads processed training data from Tidy Data Set Storage Account.

- Performs model training.

- Saves the resulting model into the Model Catalogue.

- After training the model Validate & Packaging Pipeline is executed.

- Upon execution Validate & Packaging Pipeline:

- Loads the model from Model Catalogue.

- Performs model validation.

- If model has passed validation, the pipeline packages it into a special format that is used by Siemens AI Model Manager (AIMM) and saves into the Packaged Models Storage Account.

- After packaged model is saved Tagging & Delivery Pipeline is executed.

- Tagging & Delivery Pipeline loads the trained model from the Model Catalogue, tags it and saves back.

- The delivery process is initiated by starting the communication loop within IoT Hub. Azure Machine Learning Pipeline and Siemens AI Model Manager exchange a set of messages via Event Hub to control the state of the delivery:

- Delivery pipeline informs Siemens AIMM that a new model is ready for deployment.

- Siemens AIMM analyses the state of the edge and when ready replies to IoT Hub with a request for model.

- Delivery pipeline reaches out to the Packaged Models Storage Account, generates a SAS link for the package and sends a message back to Siemens AIMM.

- Upon receiving SAS link from the Delivery Pipeline, Siemens AIMM reaches out to Packaged Models Storage Account and downloads the latest packaged model.

- Siemens AIMM runs internal process to deploy the latest packaged model to the Siemens AI Inference Server (AIIS).

- Telemetry information from Siemens AIIS is sent to the special monitoring service – Siemens AI Model Monitor.

- Siemens AI Model Monitor aggregates all the logs & metrics from the edge devices and services and sends this information via OpenTelemetry Collector to Azure Monitor.

- The inference data generated by the running model in the Siemens AI Inference Server is gathered by the Data Collector and passed through the Data Collector Hub to be uploaded back into the Data Landing Zone Storage Account for further retraining of the model.

Components

Siemens Industrial Edge Management Components:

- AI Model Monitor: Collects telemetry information, logs, metrics on model performance and edge devices.

- AI Model Manager (AIMM): Service that is responsible for orchestration of edge devices and model distribution.

- AI Inference Server: An application that runs on Siemens Industrial Edge devices. Executes deployed AI models using the built-in Python Interpreter for the inference purposes.

- Data Collector*: Collects inference data from AI Inference Server for further processing.

- Data Collector Hub*: Collects inference data from all Data Collectors to pass to the cloud for further processing.

* Descriptive names, subject to change.

Azure Cloud Components:

- IoT Hub: Communication for Model Delivery between Cloud and Edge occurs through IoT Hub, with the AI Model Manager registered as an "edge device," enabling the cloud service to send C2D (Cloud-to-Device) messages.

- Azure Machine Learning Workspace: Azure Machine Learning Workspace acts as a central hub, streamlining the creation, organization, and hosting of machine learning artifacts and tasks for teams, covering jobs, pipelines, data assets, models, and endpoints.

- Azure Monitor: Azure Monitor, a Microsoft Azure solution, offers insights into the performance and health of applications and resources, providing tools to collect, analyse, and act on telemetry data.

- Storage Accounts: A storage account is a cloud-based container or repository that allows users to store and manage various types of data in a scalable and accessible manner.

- Azure Key Vault: Azure Key Vault is a cloud service that securely manages and stores sensitive information such as secrets, encryption keys, and certificates, providing centralised control over access and audit trails.

Scenario Details

Siemens current Industrial AI Portfolio doesn't seamlessly integrate with Azure services. They aim to utilise Azure for enterprise cloud workloads to enhance their product offerings to customers.

Siemens wanted to provide customers edge products which customers connect to their owned Azure infrastructure. The objective of this reference architecture is to integrate edge and cloud systems while implementing a cloud reference model to enhance compatibility.

The reference architecture is built to support the following main challenges:

- Machine learning models which run on Siemens Industrial AI apps are not visible in Azure. Design a reference architecture to deliver trained models from the cloud to the edge and build an ML Ops pipeline in the cloud.

- Design a solution to ingest edge logs and metrics into the cloud.

This example architecture is divided into two operational areas:

- Model deployment from Azure to Industrial Edge: Deploy models trained using Azure machine learning to the on-premises AI Model Manager, facilitating model delivery from the cloud to the factory. Implement Machine Learning Operations (MLOps) pipelines in Azure for automated and repeatable processes such as model training, evaluation, and registration. Automate the deployment of model packages, ensuring secure model deployment from the cloud to the factory, complete with an approval workflow.

- Data telemetry (Logs and Metrics) upload from Edge to Cloud (Observability): Inference logs & metrics pushed to cloud interface. Support customers to monitor their edge applications centrally in Azure rather than logging into each edge device.

Observability Architecture

To ensure the smooth transfer of logs and metrics from Edge to Cloud, the architecture utilises the Azure Monitor Exporter from OpenTelemetry Collector, along with the AAD Authentication Proxy. These components are deployed as part of AI Model Monitor. This setup allows logs and metrics to be exported to Application Insights (and thus an Azure Monitor workspace) using a managed identity from Entra ID (AAD) as the key authentication mechanism.

The telemetry flows from the OT layer to an instance of the OpenTelemetry Collector in the IT layer, which exports it to Application Insights, picking up a Service Principal identity via the AAD Authentication Proxy. This strengthens the authentication from using a shared secret (Instrumentation Key) to a fully certificate backed Entra ID identity. The generation and distribution of the certificates, service principals and association of the relevant roles/permissions was automated via an Azure DevOps pipeline. This reference architecture fits with the principles such as Security, Reliability, Cost Optimisation defined in the Well Architected Framework.

Considerations

These considerations implement the pillars of the Azure Well-Architected Framework, which is a set of guiding tenets that you can use to improve the quality of a workload. For more information, see Microsoft Azure Well-Architected Framework.

1. Reliability

Reliability ensures your application can meet the commitments you make to your customers. For more information, see Overview of the reliability pillar.

In your solution, consider using Azure availability zones, which are unique physical locations within the same Azure region.

Plan for disaster recovery and account failover.

2. Security

Security provides assurances against deliberate attacks and the abuse of your valuable data and systems. For more information, see overview of the security pillar.

- Use Microsoft Entra ID for identity and access control.

- Perform Threat Modelling in the planning phase of the solution to secure the system and reduce the risks. For more information on this technique, see Microsoft Security Development Lifecycle Threat Modelling.

- Consider using Azure Key Vault to manage any sensitive information such as connection strings, passwords, keys. The configuration for the key vault can be further improved by addressing soft delete, logging, and access control settings.

- Regulate access and permissions to resources by following the principle of the least privilege using RBAC. For more information on how to manage access and permissions in Azure, see What is Azure role-based access control (Azure RBAC)? | Microsoft Learn.

- Use virtual networks and private endpoints to secure cloud services and cloud-to-edge communication.

3. Cost optimisation

Cost optimisation explores ways to reduce unnecessary expenses and improve operational efficiencies. For more information, see Overview of the cost optimisation pillar.

The cost of this architecture depends on several factors:

- The cost of storing observability data in Log Analytics. This is dependent on how much data is stored, retention periods and archive policies. Ingested data is retained for free for the first 30 days after which a monthly charge is incurred. For pricing details on Log Analytics, visit Azure Monitor Pricing.

- Azure Machine Learning has a set of dependant resources that will be deployed: Azure Key Vault, Application Insights, Azure Container Registry and Storage account which incur additional costs. More information on the Azure Machine Learning product can be found at Azure Machine Learning as a data product for cloud-scale analytics. For pricing details on Azure Machine Learning navigate to Pricing - Azure Machine Learning | Microsoft Azure.

- This architecture uses the standard tier of IoT Hub to be able to facilitate cloud-to-device messaging. The total cost incurred for IoT depends on how many messages are estimated to be sent per day. Pricing information for Azure IoT Hub can be found at Pricing—IoT Hub | Microsoft Azure.

Estimate the costs of using the Azure services for your specific workloads using the Azure Pricing Calculator. See the costs for every Azure product at Pricing overview – How Azure pricing works | Microsoft Azure.

4. Operational Excellence

Operational excellence ensures that standardised processes are put in place to ensure workload quality and improve team cohesion. For more information, see Overview of the operational excellence pillar.

We recommend that you:

- Implement the infrastructure using Infrastructure as Code tools such as Bicep, Terraform or Azure Resource Manager templates (ARM templates).

- Consider applying DevOps Security practices early in the life cycle of your application to minimise the impact of vulnerabilities and to bring the security closer to the development team.

- Deploy the infrastructure using CI/CD processes. Consider using a solution such as GitHub Actions or Azure DevOps Pipelines.

- Consider introducing secret detections tools as part of the CI pipelines to ensure secrets are not committed to the repository.

5. Performance Efficiency

Performance efficiency is the ability of your workload to scale to meet user demands. For more information, see Overview of the performance efficiency pillar.

- The SKU for the IoTHub should be selected based on the perceived number of messages exchanged per day.

- For production, it is recommended to use compute clusters as against compute instances in Azure Machine Learning. Compute clusters allow the scaling of compute resources to match traffic.

- To be able to scale Azure machine learning services alongside business requirements, it is recommended to monitor the following KPIs:

- The number of computer instances / compute cluster nodes used

- The number of experiments run on a daily / weekly basis

- The number of models deployed.

Published on:

Learn more