Managing SQL Server 2022 T-SQL snapshot backup with Azure NetApp Files snapshots

Table of Contents

SQL server backup and recovery

Common SQL server BU/R challenges

Azure NetApp Files storage-based snapshot technology

SQL server 2022 snapshot technology

SQL server snapshot backup and recovery workflow

Restoring and cloning SQL databases

Links to additional information

Abstract

This article provides guidance on how to use T-SQL snapshots to backup Microsoft SQL Server 2022 leveraging Azure NetApp Files snapshots on Azure virtual machines.

Co-author(s): Pat Sinthusan, NetApp

Intended audience

This blog provides an overview of the configuration of an Azure environment to run SQL Server with SMB file share storage using Azure NetApp Files with the highest levels of resiliency.

This article is intended for Azure NetApp Files and/or SQL Server database administrators who are responsible for deploying and protecting SQL Server in Azure. It is assumed that the reader is familiar with the various components of the solution.

Overview

Managing a very large database (VLDB) is a demanding task for a database (DB) administrator. Azure NetApp Files dramatically simplifies the management of SQL Server VLDBs in Azure environments. SQL Server has supported Server Message Block (SMB) since version 2012. Azure NetApp Files offers access to storage through the multichannel-enabled SMB protocol, providing low-latency file storage to SQL Server combined with snapshot capabilities.

Businesses have been increasingly migrating on-premises workloads to Azure for a number of reasons, including datacenter consolidation and cost effectiveness. For VLDB Migrate and Modernize scenarios with DB sizes in the double-digit TB range, virtual machine (VM)-based, Infrastructure as a Service (IaaS) architectures are a viable option. Memory-optimized Azure VMs like the E-series and M-series offer excellent compute capabilities for demanding DB workloads like SQL VLDBs or SAP HANA. Azure NetApp Files is an Azure-native NAS storage solution for running high performance SQL workloads in combination with Azure VMs.

Some SQL Server installations with DB sizes of over 50TB up to around 200TB are becoming more and more commonplace. Although there is no precise definition for a VLDB, a multi-terabyte DB – especially when growing into the 10s of TiBs – can add additional complexity for high availability and backup and restore operations.

SQL server backup and recovery

Common SQL server BU/R challenges

The following summarizes the SQL server backup and recovery challenges:

- Performance impact on production SQL databases. Conventional backups typically lead to a significant performance impact on the production database. That is because there is a heavy load on the database server, the storage system, and the storage network during traditional copy-based backups.

- Shrinking backup windows. Conventional backups can be taken only during times when little dialog or batch activities take place on the database. The scheduling of backups becomes more and more difficult to define when the databases are in use 24/7.

- Rapid data growth. Rapid data growth, together with shrinking backup windows, results in ongoing investments into the backup infrastructure. Incremental or differential backups can address these issues, but this option results in a very slow, cumbersome, and complex restoration process that is harder to verify. The option also usually leads to increased or elongated recovery time objective (RTO) or recovery point objective (RPO) times that are not acceptable to the business.

- Increasing cost of downtime. Unplanned downtime of a SQL database always has a (financial) impact on the business. A significant part of the unplanned downtime is the time that is needed to restore and recover the database after a failure. The backup and recovery architecture must be designed based on an acceptable RTO.

- Backup and recovery time included in SQL database upgrade projects. The project plan for a SQL database upgrade always includes at least three backups of the database. The time needed to perform these backups dramatically cuts down the total available time for the upgrade process. The go/no-go decision is generally based on the amount of time required to restore and recover the database from the backup that was previously created. The option to restore very quickly allows more time to solve problems that might occur during the upgrade process rather than just restore the system back to its previous state.

Azure NetApp Files storage-based snapshot technology

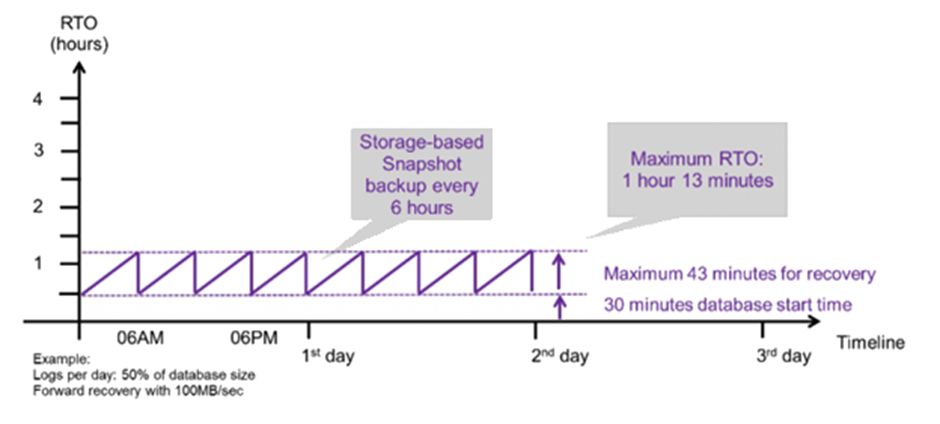

Azure NetApp Files Snapshot technology can be used to create online database backups within minutes. Because a snapshot does not move any physical data blocks on the storage platform, the time needed to create a snapshot is independent of the size of the database. The use of snapshot technology also has no performance impact on the live database. That is because the Azure NetApp Files snapshots do not move or copy data blocks when the snapshot is created or when data in the active file system is changed. Therefore, the creation of snapshots can be scheduled without having to consider peak dialog or batch activity periods. SQL customers leveraging Azure NetApp Files typically schedule multiple online snapshots during the day; for example, scheduling snapshots every four or six hours is common. These snapshots are typically kept for three to five days. For long-term retention older snapshots are then typically vaulted using Azure NetApp Files backup to Azure storage account.

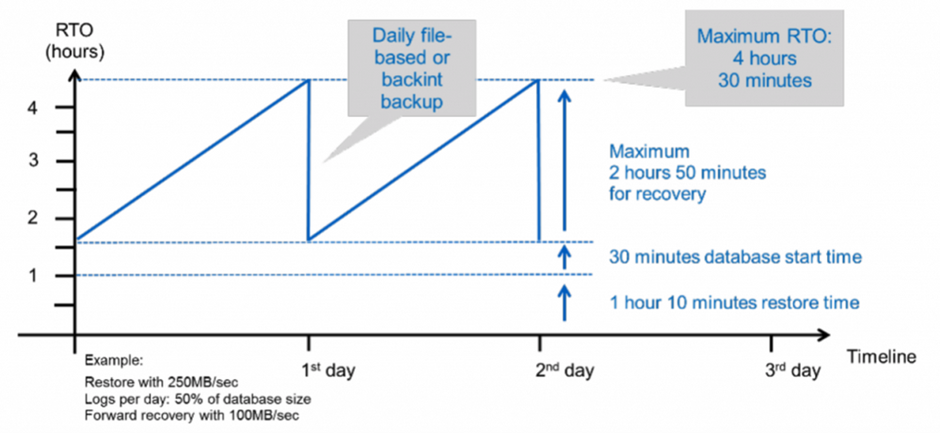

Snapshots also provide key advantages for the restore and recovery operation. Azure NetApp Files ‘Revert Volume’ functionality allows restoration of the entire database to any point in time based on the available snapshots. This restore process is performed near-instantaneously, independent of the size of the database. Because several online snapshots are created during the day, the actual time needed for the recovery process is dramatically reduced, as opposed to a traditional backup approach. A restore operation can be performed using a snapshot that is only a few hours old (rather than up to 24 hours old); therefore, fewer transaction logs need to be applied. As a result, the mean time to recover or RTO, which is the time needed for restore and recovery operations, is reduced to just several minutes compared to multiple hours with conventional single-cycle tape backups.

Azure NetApp Files snapshots are stored on the same volume as the active online data. Therefore, it is recommended to use Azure NetApp Files backup or Azure NetApp Files cross region replication (CRR) to a secondary Azure NetApp Files region to safeguard the data from accidental deletions.

SQL server 2022 snapshot technology

This article describes how to backup and restore a SQL Server 2022 database when using Azure NetApp Files using T-SQL snapshots to protect the database.

DB-consistent storage snapshots offer great advantages over the traditional built-in SQL Server backup functionality in terms of speed and added business value. Snapshots can, for example, be utilized for quickly syncing dev/test-systems or a fast recovery from failed operations or DB corruptions. Transact SQL (T-SQL) snapshot backup is now available with SQL Server 2022. However, the backup itself happens at the storage level, this is not a SQL Server-only solution. SQL Server must first prepare the data and log files for the snapshot so that the files are guaranteed to be in a state that can later be restored. Once this is done, I/O is frozen on SQL Server and control is handed over to the backup application to complete the snapshot. Once the snapshot has successfully completed, the application must return control back to SQL Server where I/O is then resumed.

With T-SQL snapshot backups, the SQL Server side of the orchestration can be handled with a series of T-SQL commands. This allows users to create their own simple backup applications that can run on either Windows or Linux, or even scripted solutions as the underlying storage supports a scripting interface to initiate a snapshot.

SQL server snapshot backup and recovery workflow

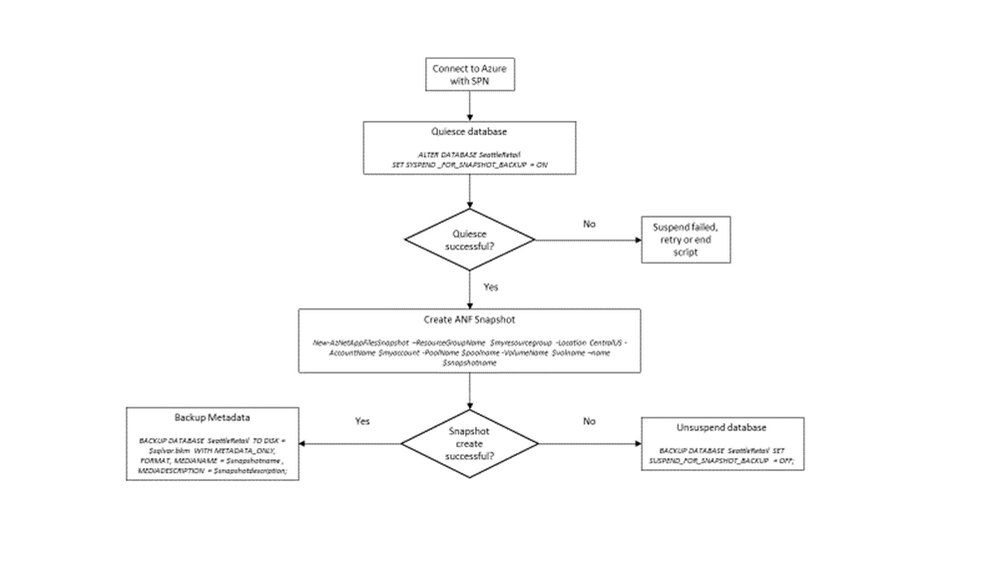

The following summarizes the backup workflow:

- Freeze a database with ALTER command – providing an opportunity to perform a consistent snapshot on the underlying storage. After this you can thaw the database and record the snapshot with BACKUP command.

- Perform snapshots of multiple databases simultaneously with the new BACKUP GROUP and BACKUP SERVER commands. This enables snapshots to be performed at the snapshot granularity of the underlying storage and eliminates the need to perform a snapshot of the storage volume(s) multiple times.

- Perform FULL backups as well as COPY_ONLY FULL backups. These backups are recorded in msdb as well.

- Perform point-in-time recovery using log backups taken with the normal streaming approach after the snapshot FULL backup. Streaming differential backups are also supported if desired.

Since SQL Server use UNC path on Azure NetApp Files volumes (SMB protocol) it is easy to point to the database files and log location by using UNC path. There is no need to mount or remount the volumes.

The following is a sample PowerShell script to create SQL Server snapshot backup with Azure NetApp Files snapshots. This PowerShell use service principal name (SPN) to login to Azure. For more information on SPN, please visit Use the portal to create an Azure AD application and service principal that can access resources.

Backup command sequence

In this scenario, Contoso’s ‘SeattleRetail’ database has 2 database files with 1 TiB each residing on Azure NetApp Files volumes:

- Prerequisite. Azure PowerShell and Azure NetApp Files PowerShell must be installed by using the following command:

Set-ExecutionPolicy -ExecutionPolicy RemoteSigned -Scope CurrentUser

Install-Module -Name Az -Scope CurrentUser -Repository PSGallery -Force

Install-Module -Name Az.NetAppFiles -AllowClobber -Force

- Define and create all variables:

#Azure variable

$azvar = @{

plainPassword = '<Application Secret>'

ApplicationId = '<Your Application ID>'

TenantId = '<Your Tanent ID>'

resourcegroup = '<Your resource group>'

location = '<Your region>'

}

$SecuredPassword = $azvar.plainPassword | ConvertTo-SecureString -AsPlainText -Force

#ANF variable

$anfvar = @{

accountname = 'ntap-acct-contoso-central'

poolname = 'cap-pool-contoso'

vols = @('SQL2022Prod-data1', 'SQL2022Prod-data2', 'SQL2022Prod-log')

ss = "SQL2022Prod-ss"

}

#SQL Server variable

$sqlvar = @{

SQLServer = 'SQL2022Prod'

db = "[SeattleRetail]"

bkm = "'\\contoso-900e.contoso.io\snapinfo\SeattleRetail.bkm'"

}

$SQLConn = New-Object System.Data.SQLClient.SQLConnection

$Command = New-Object System.Data.SQLClient.SQLCommand

$SQLConn.ConnectionString = "server='"+ $sqlvar.SQLServer +"';database='master';Integrated Security=True;"

$Suspenddb = "ALTER DATABASE " + $sqlvar.db + " SET SUSPEND_FOR_SNAPSHOT_BACKUP = ON;"

#Unsuspenddb

$Suspenddb = "ALTER DATABASE " + $sqlvar.db + " SET SUSPEND_FOR_SNAPSHOT_BACKUP = OFF;"

$Backupmetadata = "BACKUP DATABASE " + $sqlvar.db + " TO DISK = " + $sqlvar.bkm + " WITH METADATA_ONLY, FORMAT, MEDIANAME = '" + $anfvar.ss + "' , MEDIADESCRIPTION = '" + $anfvar.ss + "';"

#For restore the database back to the same server

$InplaceRestore = "RESTORE DATABASE " + $sqlvar.db + " FROM DISK = " + $sqlvar.bkm + " WITH METADATA_ONLY;"

#For creating a clone database to the same or different server

$Clonedb = "RESTORE DATABASE [SeattleRetail-Clone] FROM DISK = " + $sqlvar.bkm + " WITH METADATA_ONLY `, MOVE 'SeattleRetail1' TO '\\contoso-900e.contoso.io\SQL2022Prod-data1-clone\SeattleRetail1.mdf' `, MOVE 'SeattleRetail2' TO '\\contoso-900e.contoso.io\SQL2022Prod-data2-clone\SeattleRetail2.mdf' `, MOVE 'SeattleRetail_log' TO '\\contoso-900e.contoso.io\SQL2022Prod-log-clone\SeattleRetail_log.ldf'"

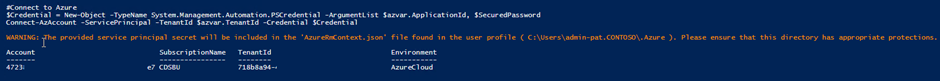

- Connect to Azure with SPN:

#Connect to Azure

$Credential = New-Object -TypeName System.Management.Automation.PSCredential -ArgumentList $azvar.ApplicationId, $SecuredPassword

Connect-AzAccount -ServicePrincipal -TenantId $azvar.TenantId -Credential $Credential

The result of the above command should display as follows:

- Suspend SQL Server with the following command:

#Connect to SQL Server

$SQLConn.Open()

$Command.Connection = $sqlConn

#Suspend DB

$Command.CommandText = $suspenddb

$Result = $Command.ExecuteNonQuery()

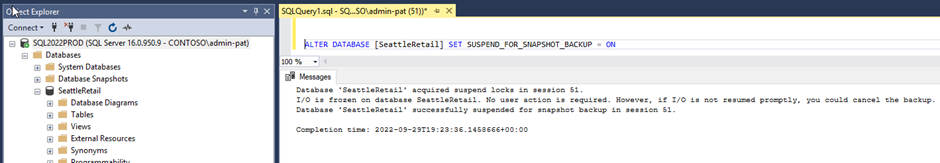

This is similar to executing the T-SQL command in SQL Server Management Studio:

- Create Azure NetApp Files snapshot(s) with the following command:

#Create Snapshot

foreach ($vol in $anfvar.vols){

New-AzNetAppFilesSnapshot -ResourceGroupName ($azvar.resourcegroup) -Location ($azvar.location) -AccountName ($anfvar.accountname) -PoolName ($anfvar.poolname) -VolumeName $vol -name ($anfvar.ss)

}

This command is similar to using Azure Portal:

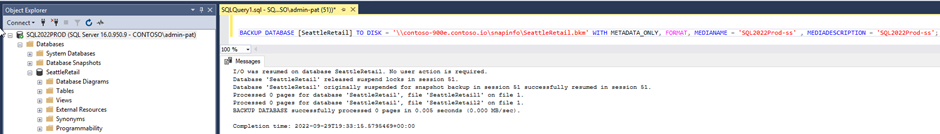

- Backup Metadata with the following command:

$Command.CommandText = $Backupmetadata

$Result = $Command.ExecuteNonQuery()

This is similar to execute T-SQL with SQL Server Manage Studio as follow:

Restoring and cloning SQL databases

- Restore database from Azure NetApp Files snapshot(s) with the following command:

Foreach ($vol in $anfvar.vols) {

$volobj = Get-AzNetAppFilesVolume -ResourceGroupName $azvar.resourcegroup -AccountName $anfvar.accountname -PoolName $anfvar.poolname -Name $vol

$ssid = Get-AzNetAppFilesSnapshot -ResourceGroupName $azvar.resourcegroup -AccountName $anfvar.accountname -PoolName $anfvar.poolname -VolumeName $vol -Name $anfvar.ss | select -ExpandProperty SnapshotId

Restore-AzNetAppFilesVolume -ResourceGroupName $volobj.ResourceGroupName -AccountName $anfvar.accountname -PoolName $anfvar.poolname -VolumeName $vol -SnapshotId $ssid

}

$Command.CommandText = $InplaceRestore

$Result = $Command.ExecuteNonQuery()

- Cloning database using Azure NetApp Files snapshot(s) by follow step 1 through 6 and use the following command to create new volumes from snapshot(s):

#Create Clone Volume

foreach ($vol in $anfvar.vols){

$volobj = Get-AzNetAppFilesVolume -ResourceGroupName $azvar.resourcegroup -AccountName $anfvar.accountname -PoolName $anfvar.poolname -Name $vol

$volclone = $vol + '-clone'

$ssid = Get-AzNetAppFilesSnapshot -ResourceGroupName $azvar.resourcegroup -AccountName $anfvar.accountname -PoolName $anfvar.poolname -VolumeName $vol -Name $anfvar.ss | select -ExpandProperty SnapshotId

New-AzNetAppFilesVolume -ResourceGroupName $azvar.resourcegroup -Location $azvar.location -AccountName $anfvar.accountname -PoolName $anfvar.poolname -VolumeName ` $volclone -SnapshotId $ssid -UsageThreshold $volobj.UsageThreshold -SubnetId $volobj.SubnetId ` -CreationToken $volclone -ServiceLevel $volobj.ServiceLevel -SecurityStyle ntfs -ProtocolType CIFS

}

$Command.CommandText = $Clonedb

$Result = $Command.ExecuteNonQuery()

For more details information on T-SQL snapshot backup, please refer to Create a Transact-SQL snapshot backup.

Summary

Using SQL server 2022 T-SQL snapshot backup in conjunction with Azure NetApp Files provides unique data management abilities for SQL customers only found in Azure. The combination of SQL server snapshots with Azure NetApp Files storage snapshots give teams the insurance of an application consistent backup of the SQL database without the time and load on the system. The orchestration of this workflow can be handled by scripting.

Links to additional information

- https://learn.microsoft.com/azure/architecture/example-scenario/file-storage/sql-server-azure-netapp-files

- https://learn.microsoft.com/azure/azure-netapp-files/azure-netapp-files-introduction

- https://techcommunity.microsoft.com/t5/azure-architecture-blog/deploying-sql-server-on-azure-using-azure-netapp-files/ba-p/3023143

- https://learn.microsoft.com/azure/azure-netapp-files/snapshots-introduction

- https://learn.microsoft.com/sql/relational-databases/backup-restore/create-a-transact-sql-snapshot-backup

Published on:

Learn moreRelated posts

Video: Copilot Studio – Connect Azure SQL As Knowledge

SQL is where the worlds data is stored. And in this video I’m going to ... The post Video: Copilot Studio – Connect Azure SQL As Knowled...

Azure Backup Threat Detection

One Azure, Many Logins: How Users Access Microsoft’s Cloud Safely

Users can access Microsoft Azure through several flexible and secure methods, depending on their role, device, and workload needs. The most co...

Unlocking New Possibilities: Microsoft Azure Hyperscale AI Computing with H200 GPUs Accelerates Secure AI Innovation in Azure for U.S. Government Secret and Top Secret

As artificial intelligence continues to reshape industries and redefine the boundaries of innovation, Microsoft is proud to announce a leap fo...

Tata Neu delivers personalized shopping experiences for millions of users with Azure DocumentDB

With Azure DocumentDB, Tata Neu delivers seamless authentication for millions of users, accelerates credit card onboarding across partners, un...