Deploy Multi-Region HPC clusters in Azure with CycleCloud

CycleCloud Multi-Region Cluster Overview

CycleCloud Multi-Region Cluster Overview

Overview

High Performance Computing (HPC) clusters in Azure are almost exclusively deployed per Azure Region (ie. East US, South Central US, West Europe, etc). Data gravity usually drives this as your data should be as close to the compute as possible to reduce latency. If a need arises to use a different Region the default answer is to create a new/separate cluster in the Region and manage multiple clusters. This additional management overhead isn't always desired and many customers ask how to create a single cluster that can span multiple Azure regions. This blog will provide an example of how to create a Multi-Region Slurm cluster using Azure CycleCloud (CC).

What are some drivers for creating a Multi-Region HPC cluster?

- Capacity. Sometimes a single Azure Region can not accommodate the quantity of compute cores needed. In this case the loosely coupled workload can be split across multiple regions to get access to all the compute cores requested.

- Specialty Compute (ie. GPU, FPGA, Infiniband). Your organization created an Azure environment in a specific Region (ie. East US 2) and later requires specialty compute VMs not available in that Region. Examples of these are high end GPU VMs (ie. NDv4), FPGA VMs (ie. NP) or HPC VMs (ie. HB & HC).

- Public Datasets. Azure hosts numerous Public Data Sets but they are generally specific to Regions (ie. West US 2). You may have an HPC cluster and Data Lake configured in South Central US but need a compute queue/partition in West US 2 to optimize use of the Public Data Set (ie. Genomics Data Lake).

REQUIREMENTS

- Azure CC UI has filters to restrict configuring resources to a single region. To create a Multi-Region Cluster with CC requires all "Parameters" typically configured in the UI to be hardcoded in the cluster template file or a parameters file. The configured template file and parameters will be imported to CC as a cluster instance using the CC CLI.

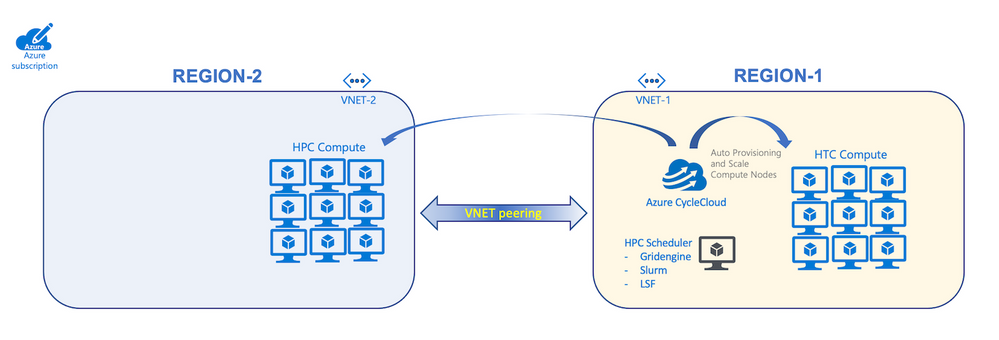

- Networking connectivity must exist between head node (aka "scheduler") in Region1 and compute nodes in Region2. The easiest way to accomplish this is with VNET Peering between VNET-2 in Region-2 and VNET-1 in Region-1 (Refer to drawing above). The cluster parameters file will need to specify both the Region and VNET for each node/nodearray definition (NOTE: these are typically defined once in the template [[ node defaults]] section). Example Azure CLI commands for VNET Peering:

- Name resolution is another key requirement to enable a Multi-Region cluster. Traditionally CC will provide name resolution by managing the /etc/hosts file on each cluster node with pre-populated hostnames in the format ip-0A0A0004, which is a hash of the node IP address (ie. 0A0A0004 > 10.10.0.4). This has been updated in CC v8.2.1 and Slurm Project version 2.5.x to use Azure DNS instead, which allows use of custom hostnames (for Nodes/VMs) and prefix (for NodeArray/VMSS). For a Multi-Region cluster this must to be taken a step further with use of an Azure Private DNS Zone linked to VNET-1 and VNET-2. For example:

IMPLEMENT

- The remaining portion assumes you have a working CC environment setup with CC CLI installed and VM quota in both Regions

- Acquire the sample template from GitHub repo. No need to clone the entire repo, just download the Slurm Multi-Region template and accompanying Parameters file

- Edit the parameters file ( slurm-multiregion-params-min.json ) in your editor of choice (ie. Visual Studio Code, vim, etc)

-

Credentialsis the common name of the CC credential in your environment. This can be found in your CC GUI or CC CLI command: cyclecloud account list Primary*represents the scheduler and HTC partition, whereasSecondary*represents the HPC partition- Update

PrimarySubnet,PrimaryRegion,SecondarySubnet&SecondaryRegion*Subnetis of the formatresource-group-name/vnet-name/subnet-name(the template has a placeholder name)*RegionName can be found with the azure-cli commandaz account list-locations -o table

- Update

HPCMachineType,MaxHPCExecuteCoreCount,HTCMachineType&MaxHTCExecuteCoreCountas necessary - Save your updates and exit

-

- Edit the template file ( slurm-multiregion-git.txt ) and replace

private.ccmr.netwith your specific Private DNS Zone name - Upload your modified template file to your CC server as follows:

cyclecloud import_cluster slurm-multigregion-cluster -c Slurm -f slurm-multiregion-git.txt -p slurm-multiregion-params-min.jsonslurm-multigregion-cluster= a name for the cluster chosen by you (no spaces)-c Slurm= name of the cluster defined in the template file (ie. line #6)-f slurm-multiregion-git.txt= file name of the template to upload-p slurm-multiregion-params-min.json= file name of the parameters file to upload

- Submit a test job to the Region2 VM (

sbatch mpi.sh)#!/bin/bash #SBATCH --job-name=mpiMultiRegion #SBATCH --partition=hpc #SBATCH -N 2 #SBATCH -n 120 # 60 MPI processes per node #SBATCH --chdir /tmp #SBATCH --exclusive set -x source /etc/profile.d/modules.sh module load mpi/hpcx echo "SLURM_JOB_NODELIST = " $SLURM_JOB_NODELIST # Assign the number of processors NPROCS=$SLURM_NTASKS #Run the job mpirun -n $NPROCS --report-bindings echo "hello world!" mv slurm-${SLURM_JOB_ID}.out $HOME NOTE: the default Slurm working directory is the path from which the job was submitted, typically the user home directory. As the home directory will likely be in Region1 its important to explicitly set a working dir to something local to Region2. In the above example I set it to the VM local /tmp (

#SBATCH --chdir /tmp) and added a line at the end to move the Slurm output file to the user home directory. - Review the output file in your home directory (ie. slurm-2.out for JobID 2)

CONCLUSION

With careful planning and implementation it is possible to create a Slurm Multi-Region cluster with Azure CycleCloud. This blog is not all inclusive and there is likely additional customization required for a customer specific environment, such as adding mounts (ie. datasets) specific to the workflow in Region2.

Published on:

Learn moreRelated posts

New Secure Boot update resources for Azure Virtual Desktop, Windows 365, and Microsoft Intune

New documentation is now available to help IT administrators prepare for Secure Boot certificate updates and manage update readiness across vi...

Azure DocumentDB: A Fully Managed MongoDB-Compatible Database

Running MongoDB at scale eventually forces a trade-off: invest heavily in managing your own infrastructure or move to a managed service and ri...

Azure SDK Release (February 2026)

Azure SDK releases every month. In this post, you'll find this month's highlights and release notes. The post Azure SDK Release (February 2026...

Recovering dropped tables in Azure Databricks with UNDROP TABLE

Oops, Dropped the Wrong Table? What now? We’ve all been there: you’re cleaning up some old stuff in Databricks, run a quick DROP TABLE… and su...

Azure Developer CLI (azd) – February 2026: JMESPath Queries & Deployment Slots

This post announces the February 2026 release of the Azure Developer CLI (`azd`). The post Azure Developer CLI (azd) – February 2026: JM...

Improved Python (PyPi/uvx) support in Azure MCP Server

Azure MCP Server now offers first-class Python support via PyPI and uvx, making it easier than ever for Python developers to integrate Azure i...

Microsoft Purview: Data Lifecycle Management- Azure PST Import

Azure PST Import is a migration method that enables PST files stored in Azure Blob Storage to be imported directly into Exchange Online mailbo...