Parquet file format – everything you need to know!

If you're dealing with big data, you've probably heard of the Parquet file format. In this blog post, you'll get a comprehensive overview of everything you need to know about this file format.

Firstly, you'll learn about the advantages Parquet offers over other file formats, such as CSV and JSON. Not only does Parquet reduce storage costs, but it also allows for faster querying.

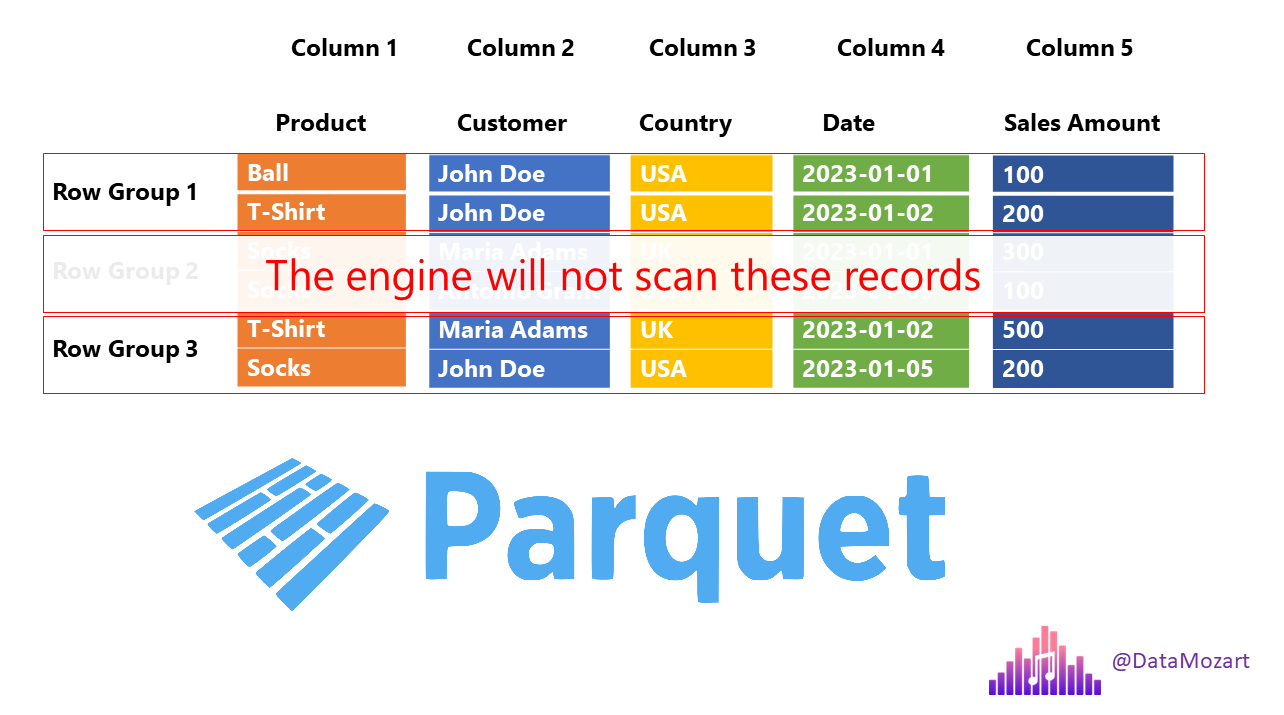

You'll also discover the inner workings of the Parquet file format, including its columnar storage architecture and compression methods. This allows for more efficient use of disk space and faster data retrieval.

Finally, the post covers how Parquet integrates with popular big data frameworks like Hadoop, Spark, and Hive.

Overall, this post provides a perfect introduction to anyone looking to work with Parquet files, highlighting the benefits and technicalities of this file format.

The post Parquet file format – everything you need to know! appeared first on Data Mozart.

Published on:

Learn moreRelated posts

Beyond Storage: Transformative Querying Tactics for Data Warehouses

Data warehousing is a vital aspect of modern-day data analysis, but simply storing raw data isn't enough. To truly leverage the value of data,...

The 4 Main Types of Data Analytics

It's no secret that data analytics is the backbone of any successful operation in today's data-rich world. That being said, did you know that ...

Incrementally loading files from SharePoint to Azure Data Lake using Data Factory

If you're looking to enhance your data platform with useful information stored in files like Excel, MS Access, and CSV that are usually kept i...

Data Analytics Case Study Guide 2023

Data analytics case studies serve as concrete examples of how businesses can harness data to make informed decisions and achieve growth. As a ...

OneLake: Microsoft Fabric’s Ultimate Data Lake

Microsoft Fabric's OneLake is the ultimate solution to revolutionizing how your organization manages and analyzes data. Serving as your OneDri...

Pandas Read Parquet File into DataFrame? Let’s Explain

Parquet files are becoming increasingly popular for data storage owing to their efficient columnar storage format, which enables faster query ...

Convert CSV to Parquet using pySpark in Azure Synapse Analytics

If you're working with CSV files and need to convert them to Parquet format using pySpark in Azure Synapse Analytics, this video tutorial is f...

Data Scientist vs Data Analyst: Key Differences Explained

In the world of data-driven decisions, the roles of data analysts and data scientists have emerged as crucial players in the era of big data. ...

Dealing with ParquetInvalidColumnName error in Azure Data Factory

Azure Data Factory and Integrated Pipelines within the Synapse Analytics suite are powerful tools for orchestrating data extraction. It is a c...